Gcore recognized as a Leader in the 2025 GigaOm Radar for AI Infrastructure

- July 22, 2025

- 2 min read

We’re proud to share that Gcore has been named a Leader in the 2025 GigaOm Radar for AI Infrastructure—the only European provider to earn a top-tier spot. GigaOm’s rigorous evaluation highlights our leadership in platform capability and innovation, and our expertise in delivering secure, scalable AI infrastructure.

Inside the GigaOm Radar: what’s behind the Leader status

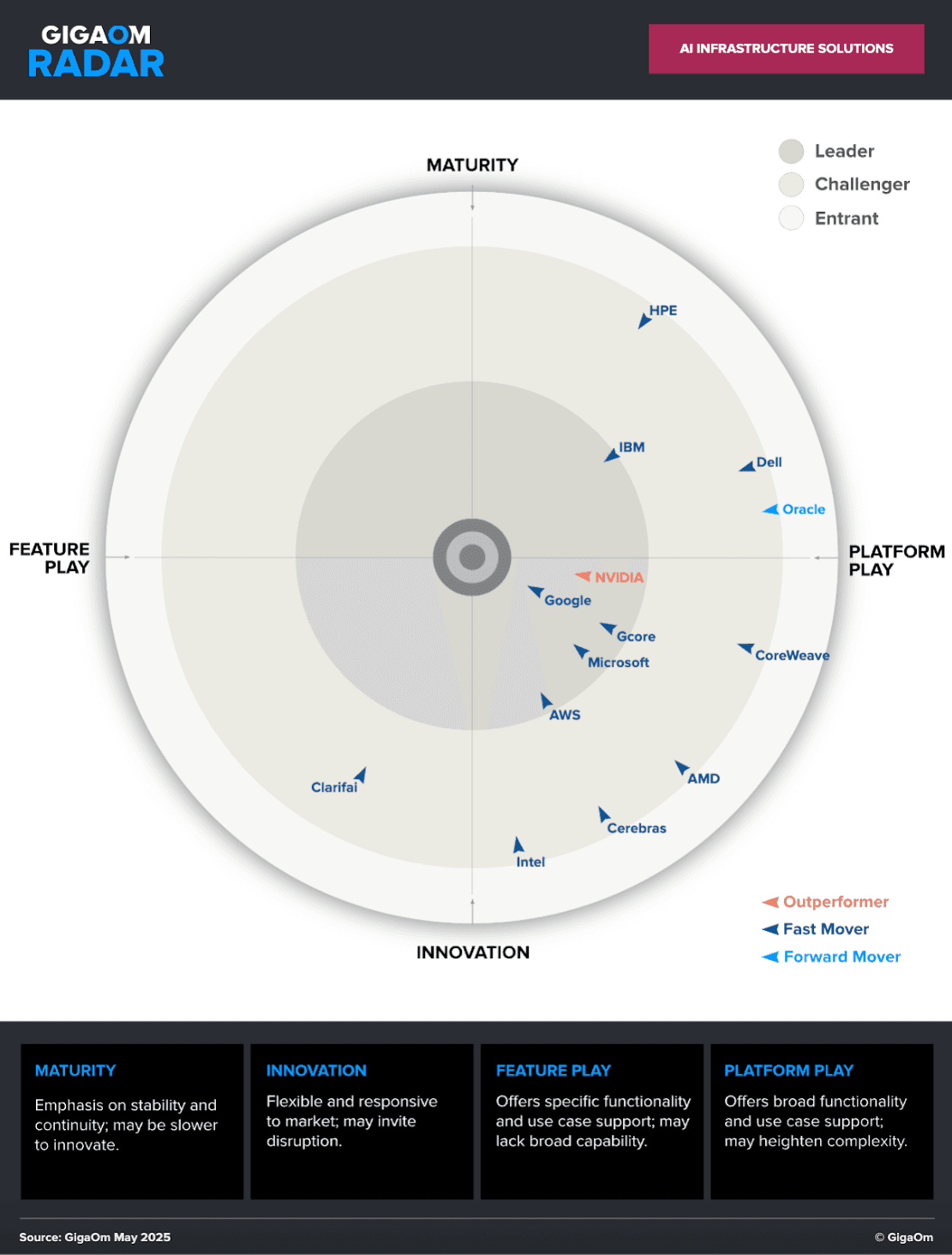

The GigaOm Radar report is a respected industry analysis that evaluates top vendors in critical technology spaces. In this year’s edition, GigaOm assessed 14 of the world’s leading AI infrastructure providers, measuring their strengths across key technical and business metrics. It ranks providers based on factors such as scalability and performance, deployment flexibility, security and compliance, and interoperability.

Alongside the ranking, the report offers valuable insights into the evolving AI infrastructure landscape, including the rise of hybrid AI architectures, advances in accelerated computing, and the increasing adoption of edge deployment to bring AI closer to where data is generated. It also offers strategic takeaways for organizations seeking to build scalable, secure, and sovereign AI capabilities.

Why was Gcore named a top provider?

The specific areas in which Gcore stood out and earned its Leader status are as follows:

- A comprehensive AI platform offering Everywhere Inference and GPU Cloud solutions that support scalable AI from model development to production

- High performance powered by state-of-the-art NVIDIA A100, H100, H200 and GB200 GPUs and a global private network ensuring ultra-low latency

- An extensive model catalogue with flexible deployment options across cloud, on-premises, hybrid, and edge environments, enabling tailored global AI solutions

- Extensive capacity of cutting-edge GPUs and technical support in Europe, supporting European sovereign AI initiatives

Choosing Gcore AI is a strategic move for organizations prioritizing ultra-low latency, high performance, and flexible deployment options across cloud, on-premises, hybrid, and edge environments. Gcore’s global private network ensures low-latency processing for real-time AI applications, which is a key advantage for businesses with a global footprint.

GigaOm Radar, 2025

Discover more about the AI infrastructure landscape

At Gcore, we’re dedicated to driving innovation in AI infrastructure. GPU Cloud and Everywhere Inference empower organizations to deploy AI efficiently and securely, on their terms.

If you’re planning your AI infrastructure roadmap or rethinking your current one, this report is a must-read. Explore the report to discover how Gcore can support high-performance AI at scale and help you stay ahead in an AI-driven world.

Related articles

Subscribe to our newsletter

Get the latest industry trends, exclusive insights, and Gcore updates delivered straight to your inbox.