Buildah is a tool for building OCI-compatible images through a lower-level coreutils interface. Similar to Podman, Buildah doesn’t depend on a daemon such as Docker or CRI-O, and it doesn’t require root privileges. Buildah provides a command-line tool that replicates all the commands found in a Dockerfile. This allows you to issue Buildah commands from a scripting language such as Bash.

This tutorial shows you how to:

- Use Buildah to package a web-application as a container starting from an existing image, and then run your application with Podman and Docker

- Use Buildah to package a web-application as a container starting from scratch

- Use Buildah to package a web-application as a container starting from a Dockerfile

- Use Buildah to modify an existing container image

- Push images to a public repository

Prerequisites

In this tutorial, we assume basic familiarity with Docker or Podman. To learn about Podman, see our Podman for Docker Users tutorial.

- Buildah. Use the

buildah --versioncommand to verify if Buildah is installed:

buildah --versionThe following example output shows that Buildah is installed on your computer:

buildah version 1.11.6 (image-spec 1.0.1-dev, runtime-spec 1.0.1-dev)If Buildah is not installed, follow the instructions from the Buildah Install page.

- Podman. Enter the following command to check if Podman is installed on your system:

podman versionThe following example output shows that Podman is installed on your computer:

Version: 1.6.4RemoteAPI Version: 1Go Version: go1.12.12OS/Arch: linux/amd64Refer the Podman Installation Instructions page for details on how to install Podman.

- Docker. Use the following command to see if Docker is installed on your system:

docker --versionThe following example output shows that Docker is installed on your computer:

Docker version 18.06.3-ce, build d7080c1For details about installing Docker, refer to the Install Docker page.

Package a Web-based Application as a Container Starting from an Existing Image

In this section, you’ll use Buildah to package a web-based application as a container, starting from the Alpine Linux image. Then, you’ll run your container image with Podman and Docker.

Alpine Linux is only 5 MB in size, and it lacks several prerequisites that are required to run ExpressJS. Thus, you’ll use apk to install these prerequisites.

- Enter the following command to create a new container image based on the

alpineimage, and store the name of your new image in an environment variable namedcontainer:

container=$(buildah from alpine)Getting image source signaturesCopying blob c9b1b535fdd9 skipped: already existsCopying config e7d92cdc71 doneWriting manifest to image destinationStoring signatures☞ Note that, by default, Buildah constructs the name of the container by appending -working-container to the name:

echo $containeralpine-working-containerYou can override the default behavior by specifying the --name flag with the name of the working container. The following example creates a container image called example-container:

example_container=$(buildah from --name "example-container" alpine)echo $example_containerexample-container- The Alpine Linux image you just pulled is only 5 MB in size and it lacks the basic utilities such as Bash. Run the following command to verify your new container image:

buildah run $container bashThe following output shows that the container image has been created, but bash is not yet installed:

ERRO[0000] container_linux.go:346: starting container process caused "exec: \"bash\": executable file not found in $PATH"container_linux.go:346: starting container process caused "exec: \"bash\": executable file not found in $PATH"error running container: error creating container for [bash]: : exit status 1ERRO exit status 1- To install Bash, enter the

buildah runcommand and specify:

- The name of the container (

$container) - Two dashes. The commands after

--are passed directly to the container. - The command you want to execute inside the container (

apk add bash)

buildah run $container -- apk add bashfetch http://dl-cdn.alpinelinux.org/alpine/v3.11/main/x86_64/APKINDEX.tar.gzfetch http://dl-cdn.alpinelinux.org/alpine/v3.11/community/x86_64/APKINDEX.tar.gz(1/5) Installing ncurses-terminfo-base (6.1_p20191130-r0)(2/5) Installing ncurses-terminfo (6.1_p20191130-r0)(3/5) Installing ncurses-libs (6.1_p20191130-r0)(4/5) Installing readline (8.0.1-r0)(5/5) Installing bash (5.0.11-r1)Executing bash-5.0.11-r1.post-installExecuting busybox-1.31.1-r9.triggerOK: 15 MiB in 19 packages- Similarly to how you’ve installed

bash, run thebuildah runcommand to installnodeandnpm:

buildah run $container -- apk add --update nodejs nodejs-npmfetch http://dl-cdn.alpinelinux.org/alpine/v3.11/main/x86_64/APKINDEX.tar.gzfetch http://dl-cdn.alpinelinux.org/alpine/v3.11/community/x86_64/APKINDEX.tar.gz(1/8) Installing ca-certificates (20191127-r1)(2/8) Installing c-ares (1.15.0-r0)(3/8) Installing libgcc (9.2.0-r3)(4/8) Installing nghttp2-libs (1.40.0-r0)(5/8) Installing libstdc++ (9.2.0-r3)(6/8) Installing libuv (1.34.0-r0)(7/8) Installing nodejs (12.15.0-r1)(8/8) Installing npm (12.15.0-r1)Executing busybox-1.31.1-r9.triggerExecuting ca-certificates-20191127-r1.triggerOK: 73 MiB in 27 packages- You can use the the

buildah configcommand to set the image configuration values. The following command sets the working directory to/usr/src/app/:

buildah config --workingdir /usr/src/app/ $container- To initialize a new JavaScript project, run the

npm init -ycommand inside the container:

buildah run $container -- npm init -yWrote to /package.json:{ "name": "", "version": "1.0.0", "description": "", "main": "index.js", "directories": { "lib": "lib" }, "dependencies": {}, "devDependencies": {}, "scripts": { "test": "echo \"Error: no test specified\" && exit 1" }, "keywords": [], "author": "", "license": "ISC"}- Issue the following command to install Express.JS:

buildah run $container -- npm install express --savenpm WARN @1.0.0 No descriptionnpm WARN @1.0.0 No repository field.+ express@4.17.1added 1 package from 8 contributors and audited 126 packages in 1.553sfound 0 vulnerabilities- Create a file named

HelloWorld.jsand copy in the following JavaScript source code:

const express = require('express')const app = express()const port = 3000app.get('/', (req, res) => res.send('Hello World!'))app.listen(port, () => console.log(`Example app listening on port ${port}!`))- To copy the

HelloWorld.jsfile to your container’s working directory, enter thebuildah copycommand specifying:

- The name of the container (

$container) - The name of the file you want to copy (

HelloWorld.js)

buildah copy $container HelloWorld.jsc26df5d060c589bda460c34d40c3e8f47f1e401cdf41b379247d23eca24b1c1d☞ You can copy a file to a different container by passing the name of the destination directory as an argument. The following example command copies the HelloWorld.js to the /temp directory:

buildah copy $container HelloWorld.js /temp- To set the entry point for your container, enter the

buildah configcommand with the--entrypointargument:

buildah config --entrypoint "node HelloWorld.js" $container- At this point, you’re ready to write the new image using the

buildah commitcommand. It takes two parameters:

- The name of the container image (

$container) - The name of the new image (

buildah-hello-world)

buildah commit $container buildah-hello-worldGetting image source signaturesCopying blob 5216338b40a7 skipped: already existsCopying blob 821cca548ffe doneCopying config 0d9f23545e doneWriting manifest to image destinationStoring signatures0d9f23545ed69ace9be47ed081c98b4ae182801b7fe5b7ef00a49168d65cf4e5☞ If the provided image name doesn’t begin with a registry name, Buildah defaults to adding localhost to the name of the image.

- The following command lists your Buildah images:

buildah imagesREPOSITORY TAG IMAGE ID CREATED SIZElocalhost/buildah-hello-world latest 0d9f23545ed6 56 seconds ago 71.3 MBRunning Your Buildah Image with Podman

- To run your image with Podman, you must first make sure your image is visible in Podman:

podman imagesThe following example output shows the container image created in the previous steps:

REPOSITORY TAG IMAGE ID CREATED SIZElocalhost/buildah-hello-world latest 0d9f23545ed6 About a minute ago 71.3 MB- Run the

buildah-hello-worldimage by entering thepodman runcommand with the following arguments:

dtto specify that the container should be run in the background, and that Podman should allocate a pseudo-TTY for it.-pwith the port on host (3000) that’ll be forwarded to the container port (3000), separated by:.- The name of your image (

buildah-hello-world)

podman run -dt -p 3000:3000 buildah-hello-world332d060fc0009a8088349aba672be3601b76553e5df7643d4788c917528cbd8e- Use the

podman pscommand to see the list of running containers:

podman psCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES332d060fc000 localhost/buildah-hello-world:latest /bin/sh 23 seconds ago Up 21 seconds ago 0.0.0.0:3000->3000/tcp cool_ritchie- To see the running application, point your browser to

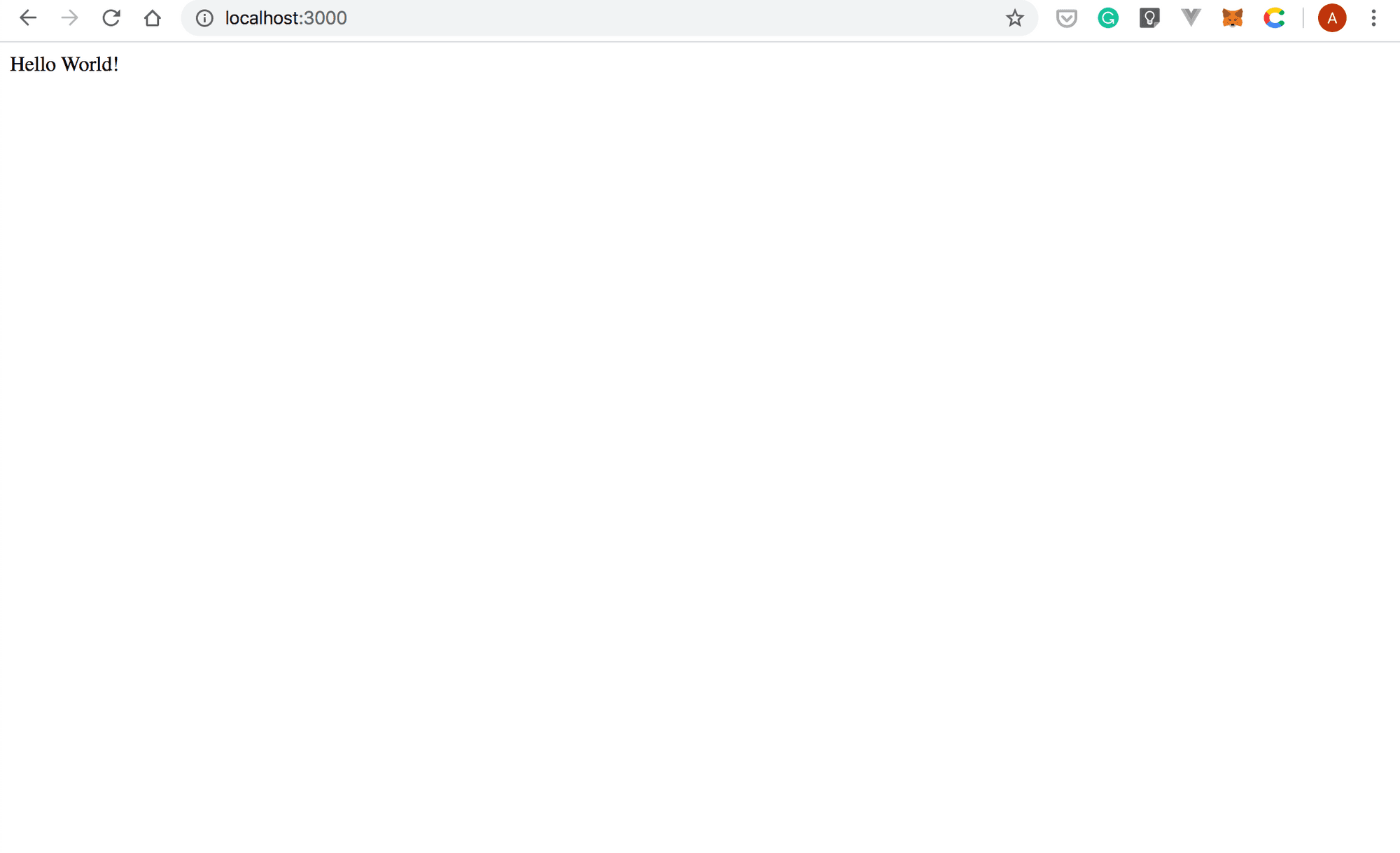

http://localhost:3000

. The application should look as shown in the following screenshot:

- Now that the functionality of the application has been validated, you can stop the running container:

podman kill 332d060fc000332d060fc000Running Your Buildah Image with Docker

The container image you’ve built in previous sections is compatible with Docker. In this section, we’ll walk you through the steps required to run the buildah-hello-world image with Docker.

- First, you must push the image to Docker. Enter the

buildah pushcommand specifying:

- The name of the container

- The destination which uses the following format

<TRANSPORT>:<DETAILS>.

The following example command uses the docker-daemon transport to push the buildah-hello-world image to Docker:

buildah push buildah-hello-world docker-daemon:buildah-hello-world:latestGetting image source signaturesCopying blob 5216338b40a7 doneCopying blob 821cca548ffe doneCopying config 0d9f23545e doneWriting manifest to image destinationStoring signatures- List the Docker images stored on your local machine:

docker imagesREPOSITORY TAG IMAGE ID CREATED SIZEbuildah-hello-world latest 0d9f23545ed6 16 minutes ago 64.5MB- Run the

buildah-hello-worldcontainer image with Docker:

docker run -dt -p 3000:3000 buildah-hello-worldb0f29ff964cd84bf204b3f30f615581c4bb67c4a880aa871ce9c89db48e68720- After a few seconds, enter the

docker psimage to display the list of running containers:

docker psCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMESb0f29ff964cd buildah-hello-world "/bin/sh -c 'node He…" 16 seconds ago Up 13 seconds 0.0.0.0:3000->3000/tcp goofy_chandrasekhar- To see the running application, point your browser to

http://localhost:3000

. The application should look as shown in the following screenshot:

- Stop the running container with:

docker kill b0f29ff964cdb0f29ff964cdPackage a Web-application as a Container Starting from Scratch

With Buildah, you can start from an image that’s basically an empty shell, except for some container metadata. Once you create such an image, you can then add more packages to it. This is useful when you want to create small containers, with a minimum number of packages installed. In this section, you’ll build the HelloWorld application starting from scratch.

An empty container image doesn’t have bash, yum, or any other tools installed. Thus, to install Node and Express.JS on it, you’ll mount the container’s file-system to a directory on the host, and then use the host’s package management system to install the required packages.

- If you’re running Buildah as an unprivileged user, mounting the container’s file-system will fail unless you enter the user namespace with the following command:

buildah unshare- To start building from an empty container image, enter the

buildah fromcommand, and specifyscratchas an argument:

container=$(buildah from scratch)☞ Note that the above command stores the name of your container image in the container environment variable:

echo $containerworking-container-1- Issue the following

buildah mountcommand to mount the container filesystem to a directory on the host, and store the path of the directory in themntenvironment variable:

mnt=$(buildah mount $container)- Use the

echocommand to see the name of the directory where the container filesystem is mounted:

echo $mnt/home/vagrant/.local/share/containers/storage/overlay/e1df4ce46bb88907af45e4edb7379fac8781928ac0cafe0c1a6fc799f4f7a48b/merged- You can check that the container filesystem is empty with:

ls $mnt[root@localhost ~]#- Use the hosts’ package manager to install software into the container. Enter the

yum installcommand specifying the following arguments:

--installrootto configure the alternative install root directory (mnt). The packages will be installed relative to this directory.--releaseverto indicate the version you want to install the packages for. Our example usescentos-release-8.- The name of the packages you want to install (

bashandcoreutils). - The

-yflag to automatically answeryesto all questions.

yum install --releasever=centos-release-8 --installroot $mnt bash coreutils -yshadow-utils-2:4.6-8.el8.x86_64systemd-239-18.el8_1.2.x86_64systemd-libs-239-18.el8_1.2.x86_64systemd-pam-239-18.el8_1.2.x86_64systemd-udev-239-18.el8_1.2.x86_64trousers-lib-0.3.14-4.el8.x86_64tzdata-2019c-1.el8.noarchutil-linux-2.32.1-17.el8.x86_64which-2.21-10.el8.x86_64xz-5.2.4-3.el8.x86_64xz-libs-5.2.4-3.el8.x86_64zlib-1.2.11-10.el8.x86_64Complete!Note that the above output was truncated for brevity.

- Clean up the temporary files that

yumcreated as follows:

yum clean --installroot $mnt all24 files removed- Validate the functionality of your container image. Enter the following

buildah runcommand to runbashinside of the container:

buildah run $container bashbash-4.4#- You can issue a few commands to make sure everything works as expected. Once you’re done, enter the

exitcommand to terminate thebashsession:

exit- Enter the following commands to move into the directory where you mounted the container’s filesystem, and then download the Node.JS installer:

cd $mnt && wget https://nodejs.org/dist/v12.16.1/node-v12.16.1-linux-x64.tar.xz--2020-02-24 13:50:07-- https://nodejs.org/dist/v12.16.1/node-v12.16.1-linux-x64.tar.xzResolving nodejs.org (nodejs.org)... 104.20.22.46, 104.20.23.46, 2606:4700:10::6814:162e, ...Connecting to nodejs.org (nodejs.org)|104.20.22.46|:443... connected.HTTP request sent, awaiting response... 200 OKLength: 14591852 (14M) [application/x-xz]Saving to: 'node-v12.16.1-linux-x64.tar.xz'node-v12.16.1-linux-x 100%[=======================>] 13.92M 7.25MB/s in 1.9s2020-02-24 13:50:09 (7.25 MB/s) - 'node-v12.16.1-linux-x64.tar.xz' saved [14591852/14591852]- To extract the files from the archive file and remove the first component from the file names, run the

tar xfcommand with--strip-commponents=1:

tar xf node-v12.16.1-linux-x64.tar.xz --strip-components=1- Delete the archive:

rm -f node-v12.16.1-linux-x64.tar.xz- To make sure everything works as expected, use the

buildah runcommand to runnodeinside of the container:

buildah run $container nodeWelcome to Node.js v12.16.1.Type ".help" for more information.>- Type

.exitto exit the Node.JS interactive shell.

- Now that everything is set up, you can install Express.JS and create the

HelloWorldproject. Follow the steps from4to9from the “Build an Express.JS based Image from an Existing Image” section. - Once you’ve finished the above steps, unmount the container filesystem:

buildah unmount $container- Execute the

buildah commitcommand to create a new image calledbuildah-demo-from-scratch:

buildah commit $container buildah-demo-from-scratchGetting image source signaturesCopying blob a9a2ac73e013 doneCopying config ec14304d59 doneWriting manifest to image destinationStoring signaturesec14304d5906c7b8fb9a485ff959e4a6c337115245a827858bf6ba808f5f4e0e- To see the list of your Buildah images, run the

buildah imagescommand:

buildah imagesREPOSITORY TAG IMAGE ID CREATED SIZElocalhost/buildah-demo-from-scratch latest ec14304d5906 3 minutes ago 582 MB- You can use the

buildah inspectcommand to retrieve more details about thebuildah-demo-from-scratchcontainer image:

buildah inspect $container{ "Type": "buildah 0.0.1", "FromImage": "", "FromImageID": "", "FromImageDigest": "", "Config": "", "Manifest": "", "Container": "working-container", "ContainerID": "f974b8b06921a57edddb5735ee7fc0c7176051ff1b76d0523bf2879d7865afba", "MountPoint": "", "ProcessLabel": "system_u:system_r:container_t:s0:c435,c738", "MountLabel": "system_u:object_r:container_file_t:s0:c435,c738", "ImageAnnotations": null, "ImageCreatedBy": "", "OCIv1": { "created": "2020-02-27T14:46:38.379626079Z", "architecture": "amd64", "os": "linux", "config": { "Entrypoint": [ "/bin/sh", "-c", "node HelloWorld.js" ], "WorkingDir": "/usr/src/app/" }, "rootfs": { "type": "", "diff_ids": null } }, "Docker": { "created": "2020-02-27T14:46:38.379626079Z", "container_config": { "Hostname": "", "Domainname": "", "User": "", "AttachStdin": false, "AttachStdout": false, "AttachStderr": false, "Tty": false, "OpenStdin": false, "StdinOnce": false, "Env": null, "Cmd": null, "Image": "", "Volumes": null, "WorkingDir": "/usr/src/app/", "Entrypoint": [ "/bin/sh", "-c", "node HelloWorld.js" ], "OnBuild": [], "Labels": null }, "config": { "Hostname": "", "Domainname": "", "User": "", "AttachStdin": false, "AttachStdout": false, "AttachStderr": false, "Tty": false, "OpenStdin": false, "StdinOnce": false, "Env": null, "Cmd": null, "Image": "", "Volumes": null, "WorkingDir": "/usr/src/app/", "Entrypoint": [ "/bin/sh", "-c", "node HelloWorld.js" ], "OnBuild": [], "Labels": null }, "architecture": "amd64", "os": "linux" }, "DefaultMountsFilePath": "", "Isolation": "IsolationOCIRootless", "NamespaceOptions": [ { "Name": "cgroup", "Host": true, "Path": "" }, { "Name": "ipc", "Host": false, "Path": "" }, { "Name": "mount", "Host": false, "Path": "" }, { "Name": "network", "Host": true, "Path": "" }, { "Name": "pid", "Host": false, "Path": "" }, { "Name": "user", "Host": true, "Path": "" }, { "Name": "uts", "Host": false, "Path": "" } ], "ConfigureNetwork": "NetworkDefault", "CNIPluginPath": "/usr/libexec/cni:/opt/cni/bin", "CNIConfigDir": "/etc/cni/net.d", "IDMappingOptions": { "HostUIDMapping": true, "HostGIDMapping": true, "UIDMap": [], "GIDMap": [] }, "DefaultCapabilities": [ "CAP_AUDIT_WRITE", "CAP_CHOWN", "CAP_DAC_OVERRIDE", "CAP_FOWNER", "CAP_FSETID", "CAP_KILL", "CAP_MKNOD", "CAP_NET_BIND_SERVICE", "CAP_SETFCAP", "CAP_SETGID", "CAP_SETPCAP", "CAP_SETUID", "CAP_SYS_CHROOT" ], "AddCapabilities": [], "DropCapabilities": [], "History": [ { "created": "2020-02-27T14:56:04.319174231Z" } ], "Devices": []}- The steps for running the image are similar to the ones from the “Running your Buildah Image with Podman”. For the sake of brevity, those steps are not repeated here.

Package a Web-application as a Container Starting from a Dockerfile

- Create a directory called

from-dockerfileand then move into it:

mkdir from-dockerfile && cd from-dockerfile/- Use a plain-text editor to create a file called

Dockerfile, and copy in the following snippet:

FROM node:10WORKDIR /usr/src/appRUN npm init -yRUN npm install express --saveCOPY HelloWorld.js .CMD [ "node", "HelloWorld.js" ]- Create a file named

HelloWorld.jswith the following content:

const express = require('express')const app = express()const port = 3000app.get('/', (req, res) => res.send('Hello World!'))app.listen(port, () => console.log(`Example app listening on port ${port}!`))- Build the container image. Enter the

buildah budcommand specifying the-tflag with the name Buildah should apply to the built image, and the build context directory (.):

buildah bud -t buildah-from-dockerfile .STEP 1: FROM node:10STEP 2: WORKDIR /usr/src/appSTEP 3: RUN npm init -yWrote to /usr/src/app/package.json:{ "name": "app", "version": "1.0.0", "description": "", "main": "index.js", "scripts": { "test": "echo \"Error: no test specified\" && exit 1" }, "keywords": [], "author": "", "license": "ISC"}STEP 4: RUN npm install express --savenpm notice created a lockfile as package-lock.json. You should commit this file.npm WARN app@1.0.0 No descriptionnpm WARN app@1.0.0 No repository field.+ express@4.17.1added 50 packages from 37 contributors and audited 126 packages in 4.989sfound 0 vulnerabilitiesSTEP 5: COPY HelloWorld.js .STEP 6: CMD [ "node", "HelloWorld.js" ]STEP 7: COMMIT buildah-from-dockerfileGetting image source signaturesCopying blob 7948c3e5790c skipped: already existsCopying blob 4d1ab3827f6b skipped: already existsCopying blob 69dfa7bd7a92 skipped: already existsCopying blob 01727b1a72df skipped: already existsCopying blob 1d7382716a27 skipped: already existsCopying blob 03dc1830d2d5 skipped: already existsCopying blob 1e1795dd2c10 skipped: already existsCopying blob c8a8d3d42bc1 skipped: already existsCopying blob 072dcfd76a1e skipped: already existsCopying blob fc67e152fd86 doneCopying config 7619bf0e33 doneWriting manifest to image destinationStoring signatures7619bf0e33165f5c3dc6da00cb101f2195484bff3e59f4c6f57a41c07647d4077619bf0e33165f5c3dc6da00cb101f2195484bff3e59f4c6f57a41c07647d407- The following command lists your Buildah images:

buildah imagesREPOSITORY TAG IMAGE ID CREATED SIZElocalhost/buildah-from-dockerfile latest 7619bf0e3316 52 seconds ago 944 MB- Enter the

podman runcommand to run un thebuildah-from-dockerfileimage:

podman run -dt -p 3000:3000 buildah-from-dockerfiledbbae173dca0ca5b602c0b9a70055886381cb7df5ae25fbb4bd81c75a4bcb50d[vagrant@localhost buildah-hello-world]$ podman psCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMESdbbae173dca0 localhost/buildah-from-dockerfile:latest node HelloWorld.j... 4 seconds ago Up 3 seconds ago 0.0.0.0:3000->3000/tcp priceless_cartwright- Point your browser to

http://localhost:3000

, and you should see something similar to the following screenshot:

- Stop the container by entering the

podman killcommand followed by the identifier of thebuildah-from-dockerfilecontainer (dbbae173dca0):

podman kill dbbae173dca0dbbae173dca0ca5b602c0b9a70055886381cb7df5ae25fbb4bd81c75a4bcb50dUse Buildah to Modify a Container Image

With Buidah, you can modify a container in the following ways:

- Mount the container and copy files to it

- Using the

buildah configcommand - Using the

buildah copycommand

Mount the Container and Copy Files to It

- Run the following command to create a new container using the

buildah-from-dockerfileimage as a starting point:

buildah from buildah-from-dockerfileThe above command prints the name of your new container:

buildah-from-dockerfile-working-container- Use the

buildah listcommand to see the list of your working containers:

buildah containersCONTAINER ID BUILDER IMAGE ID IMAGE NAME CONTAINER NAME78c4225c8c37 * 7619bf0e3316 localhost/buildah-from-docker... buildah-from-dockerfile-working-container- If you’re running Buildah as an unprivileged user, enter the user namespace with:

buildah unshare- Mount the container filesystem to a directory on the host, and save the name of that directory in an environment variable called

mountby entering the following command:

mount=$(buildah mount buildah-from-dockerfile-working-container)- You can use the

echocommand to print the path of the folder where the container filesystem is mounted:

echo $mount/home/vagrant/.local/share/containers/storage/overlay/83b2d731b920653a569795cf75f4902a1e148dab61f4cb41bcc37bae0f5d6655/merged- Move into the

/usr/src/appfolder:

cd $mount/usr/src/app/- Open the

HelloWorld.jsfile in a plain-text editor, and edit the line that prints theHello World!message to:

app.get('/', (req, res) => res.send('Hello World (modified with Buildah)!'))Your HelloWorld.js file should look similar to the listing below:

cat HelloWorld.jsconst express = require('express')const app = express()const port = 3000app.get('/', (req, res) => res.send('Hello World (modified with Buildah)!'))app.listen(port, () => console.log(`Example app listening on port ${port}!`))- Save the changes to a new container image called

modified-container:

buildah commit buildah-from-dockerfile-working-container modified-containerGetting image source signaturesCopying blob 7948c3e5790c skipped: already existsCopying blob 4d1ab3827f6b skipped: already existsCopying blob 69dfa7bd7a92 skipped: already existsCopying blob 01727b1a72df skipped: already existsCopying blob 1d7382716a27 skipped: already existsCopying blob 03dc1830d2d5 skipped: already existsCopying blob 1e1795dd2c10 skipped: already existsCopying blob c8a8d3d42bc1 skipped: already existsCopying blob 072dcfd76a1e skipped: already existsCopying blob fc67e152fd86 skipped: already existsCopying blob a546faf200ff doneCopying config d3ac43ac8d doneWriting manifest to image destinationStoring signaturesd3ac43ac8da20aef987367353e56e22a1a2330176c08e255c72670b3b08c1e14- If you run the

buildah imagescommand, you should see both images:

buildah imagesREPOSITORY TAG IMAGE ID CREATED SIZElocalhost/modified-container latest d3ac43ac8da2 46 seconds ago 944 MBlocalhost/buildah-from-dockerfile latest 7619bf0e3316 14 minutes ago 944 MB- Unmount the root filesystem of your container by entering the following

buildah unmountcommand:

buildah unmount buildah-from-dockerfile-working-container78c4225c8c377d8a018583586e2f76932204f20b4f3621fedb1ab3d41f8a3240- Run the

modified-containerimage with Podman:

podman run -dt -p 3000:3000 modified-container70105ac094b672c98f56290d25fa5406a7c51bf401cff586c7a356b4f19f1320- Enter the

podman pscommand to print the list of running containers:

podman psCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES70105ac094b6 localhost/modified-container:latest node HelloWorld.j... 4 seconds ago Up 4 seconds ago 0.0.0.0:3000->3000/tcp pedantic_rhodes- To see the modified application in action, point your browser to

http://localhost:3000

:

Modify a Container with the buildah config Command

- To see the list of your local container images, use the

buildah imagescommand:

buildah containersCONTAINER ID BUILDER IMAGE ID IMAGE NAME CONTAINER NAME305591a5116c * 7619bf0e3316 localhost/buildah-from-docker... buildah-from-dockerfile-working-container- In this example, you’ll modify the configuration value for the

authorfield. Run thebuildah configcommand specifying the following parameters:

--authorwith the name of the author.- The identifier of the container (

305591a5116c)

buildah config --author='Andrei Popescu' 305591a5116c- Enter the

buildah inspectcommand to display detailed information about your container:

buildah inspect 305591a5116c{ "Docker": { "created": "2020-02-24T14:41:01.41295511Z", "container_config": { "Hostname": "", "Domainname": "", "User": "", "AttachStdin": false, "AttachStdout": false, "AttachStderr": false, "Tty": false, "OpenStdin": false, "StdinOnce": false, "Env": [ "PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin", "NODE_VERSION=10.19.0", "YARN_VERSION=1.21.1" ], "Cmd": [ "node", "HelloWorld.js" ], "Image": "", "Volumes": null, "WorkingDir": "/usr/src/app", "Entrypoint": [ "docker-entrypoint.sh" ], "OnBuild": [], "Labels": null }, "author": "Andrei Popescu", "config": { "Hostname": "", "Domainname": "", "User": "", "AttachStdin": false, "AttachStdout": false, "AttachStderr": false, "Tty": false, "OpenStdin": false, "StdinOnce": false, "Env": [ "PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin", "NODE_VERSION=10.19.0", "YARN_VERSION=1.21.1" ], "Cmd": [ "node", "HelloWorld.js" ], "Image": "", "Volumes": null, "WorkingDir": "/usr/src/app", "Entrypoint": [ "docker-entrypoint.sh" ], "OnBuild": [], "Labels": null },Note that that the above output was truncated for brevity.

As you can see, the author field has been updated:

"author": "Andrei Popescu",Modifying a Container with the buildah copy Command

- List your Buildah images with:

buildah imagesREPOSITORY TAG IMAGE ID CREATED SIZElocalhost/buildah-from-dockerfile latest 4c4c1019785e 19 seconds ago 944 MBdocker.io/library/node 10 aa6432763c11 5 days ago 940 MB- Create a new working container using

buildah-from-dockerfileas the starting image:

container=$(buildah from buildah-from-dockerfile)- The above command saves the name of your new working container into an environment variable called

container. Use theechocommand to see the name of your new container:

echo $containerbuildah-from-dockerfile-working-container- Use a plain-text editor to open the

HelloWorld.js. Next, modify the line of code that prints theHello World!message to the following:

app.get('/', (req, res) => res.send('Hello World (modified with the buildah copy command)!'))Your HelloWorld.js file should look similar to the following listing:

const express = require('express')const app = express()const port = 3000app.get('/', (req, res) => res.send('Hello World (modified with the buildah copy command)!'))app.listen(port, () => console.log(`Example app listening on port ${port}!`))- Enter the following

buildah copycommand to copy the content of theHelloWorld.jsfile into the container’s/usr/src/app/directory:

buildah copy buildah-from-dockerfile-working-container HelloWorld.js /usr/src/app/bf36dd7b6ba5d3f520835f5e850e4303bd830bd0934d1cb8a11c4c45cf3ebcb8- The

buildah runis different from thepodman runcommand. Since Buildah is a tool aimed at building images, you can’t usebuildah runto map ports or mount volumes. You can think of it as similar to theRUNcommand from a Dockerfile. Thus, to test the changes before saving them to a new image, you must run a shell inside of the container:

buildah run $container -- bash- Use the

catcommand to list the contents of theHelloWorld.jsfile:

cat HelloWorld.jsconst express = require('express')const app = express()const port = 3000app.get('/', (req, res) => res.send('Hello World (modified with the buildah copy command)!'))app.listen(port, () => console.log(`Example app listening on port ${port}!`))- Type

exitto return to the host:

exit- Save your changes to a new container image named

modified-with-copy. Enter thebuildah commitcommand passing it the following parameters:

- The name of your working container (

$container) - The name of your new container (

modified-with-copy)

buildah commit $container modified-with-copyGetting image source signaturesCopying blob 2c995a2087c1 skipped: already existsCopying blob 00adafc8e77b skipped: already existsCopying blob d040e6423b7a skipped: already existsCopying blob 162804eaaa1e skipped: already existsCopying blob 91daf9fc6311 skipped: already existsCopying blob 236d3097407d skipped: already existsCopying blob 92086f81cd8d skipped: already existsCopying blob 90aa9e20811b skipped: already existsCopying blob cea8dd7dcda1 skipped: already existsCopying blob 490adad7924f skipped: already existsCopying blob fc29e33720c1 doneCopying config c6df996bc7 doneWriting manifest to image destinationStoring signaturesc6df996bc740c9670c87470f65124f8a8a3b74ecde3dc38038530a98209e5148- Enter the

podman imagescommand to list the images available on your system:

podman imagespodman imagesREPOSITORY TAG IMAGE ID CREATED SIZElocalhost/modified-with-copy latest c6df996bc740 About a minute ago 944 MBlocalhost/buildah-from-dockerfile latest efd9caedf198 24 minutes ago 944 MBdocker.io/library/node 10 aa6432763c11 5 days ago 940 MB- Run the modified image with Podman:

podman run -dt -p 3000:3000 modified-with-copyf2bf06e4d6010adab6acf92db063a4c11f821fb96c2912266ac9900752f53bc4- Make sure that the modified container works as expected by pointing your browser to

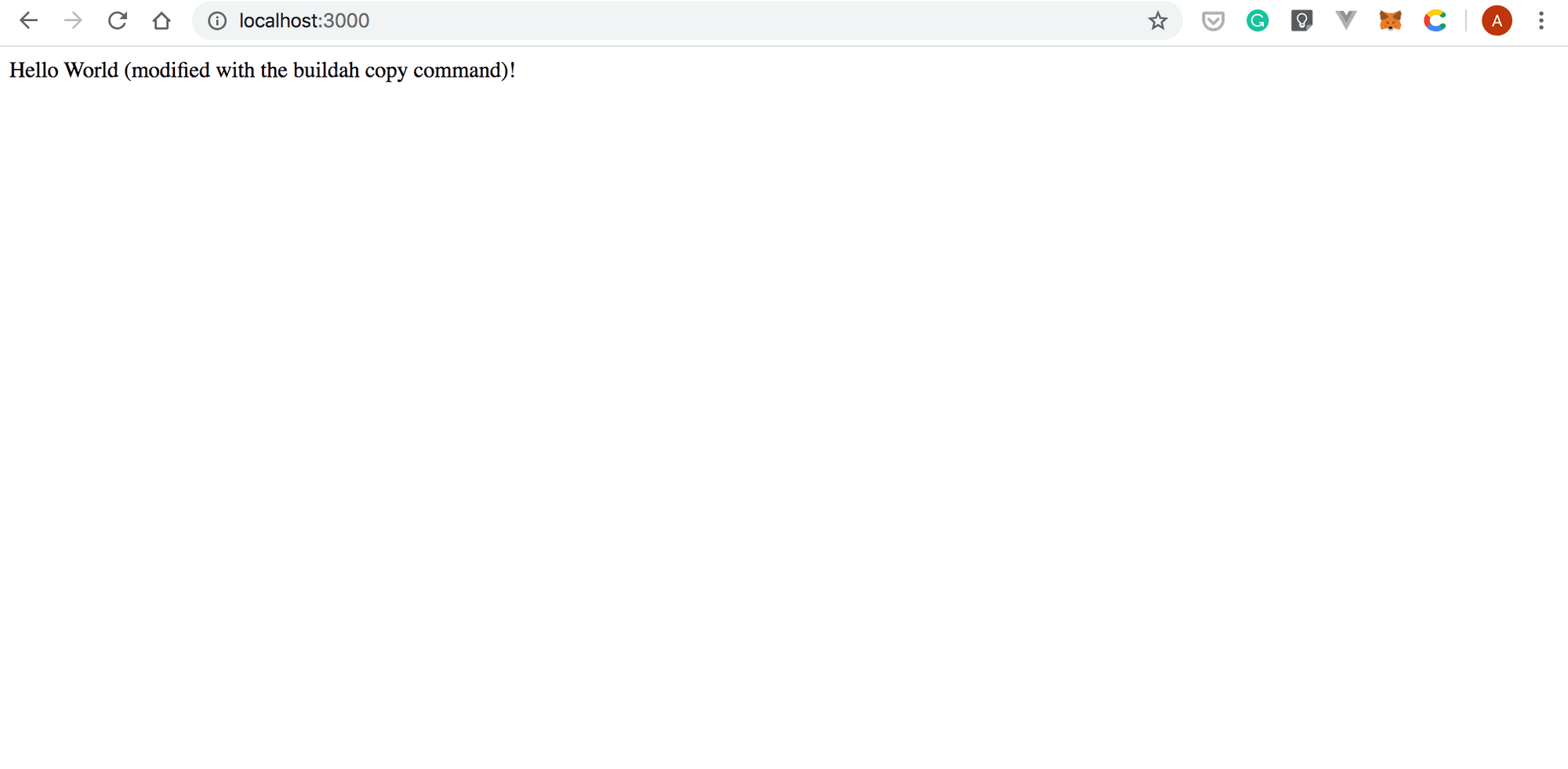

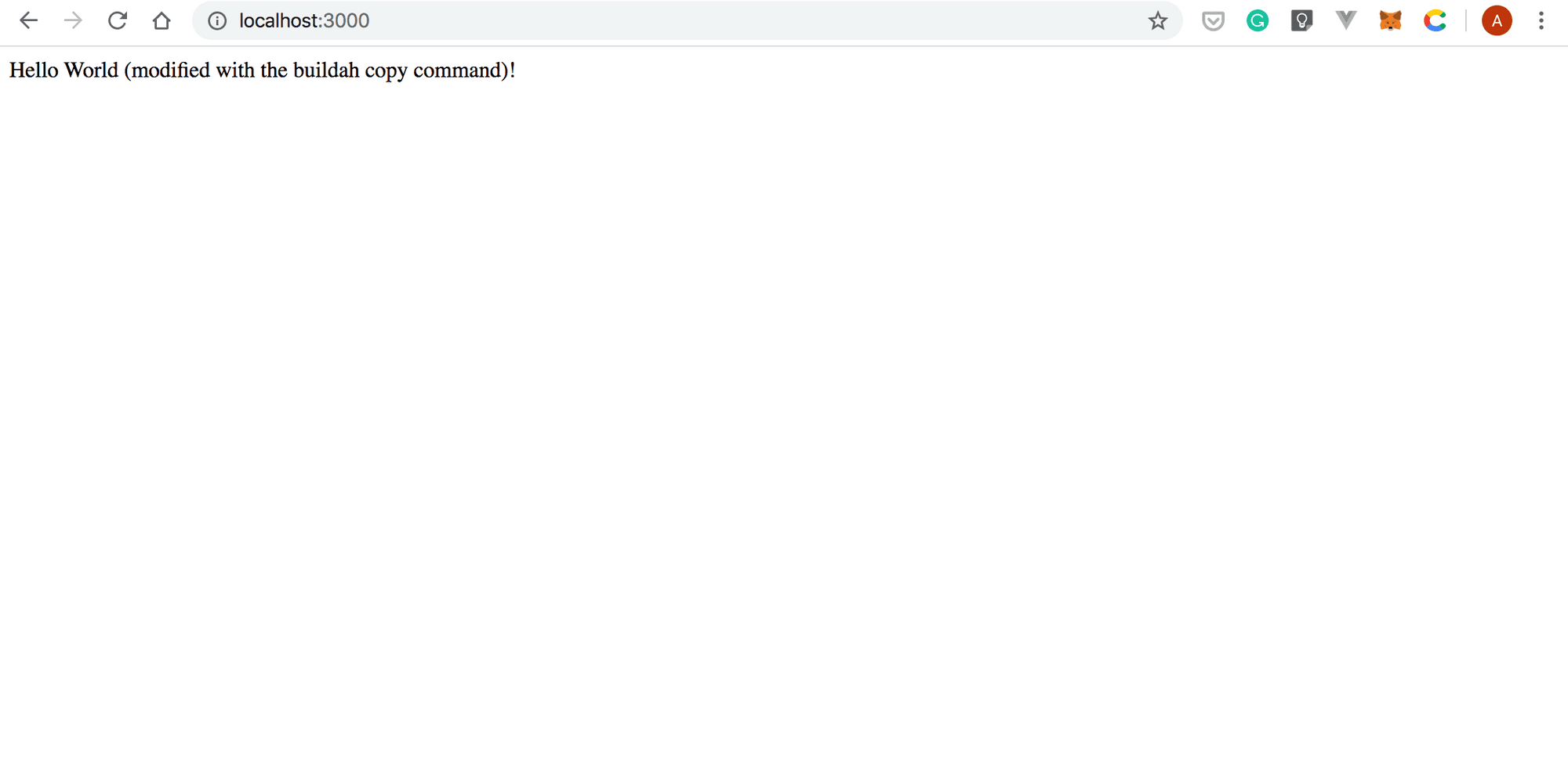

http://localhost:3000

:

Use Buildah to Push an Image to a Public Repository

In this section, we’ll show how you can push a Buildah image to Quay.io. Then, you’ll use Docker to pull and run it on your system.

- Login to Quay.io with the following command:

buildah login quay.ioBuildah will prompt you to enter your username and password:

Username:Password:Login Succeeded!- Use the

buildah imagescommand to see the list of Buildah images available on your system:

buildah imagesREPOSITORY TAG IMAGE ID CREATED SIZElocalhost/modified-with-copy latest c6df996bc740 31 minutes ago 944 MBlocalhost/buildah-from-dockerfile latest efd9caedf198 54 minutes ago 944 MBdocker.io/library/node 10 aa6432763c11 5 days ago 940 MB- To push an image to Quay.io, enter the

buildah pushcommand specifying:

- The source.

- The destination. This uses the following format

<transport>:<destination>.

The following example command pushes the modified-with-copy to the andreipope/modified-with-copy repository:

buildah push modified-with-copy docker://quay.io/andreipope/modified-with-copy:latestGetting image source signaturesCopying blob d040e6423b7a doneCopying blob 236d3097407d doneCopying blob 2c995a2087c1 doneCopying blob 00adafc8e77b skipped: already existsCopying blob 91daf9fc6311 doneCopying blob 162804eaaa1e doneCopying blob 92086f81cd8d skipped: already existsCopying blob 90aa9e20811b skipped: already existsCopying blob cea8dd7dcda1 skipped: already existsCopying blob 490adad7924f skipped: already existsCopying blob fc29e33720c1 skipped: already existsCopying config c6df996bc7 doneWriting manifest to image destinationCopying config c6df996bc7 doneWriting manifest to image destinationWriting manifest to image destinationStoring signatures- Pull the image from Quay.io using the

docker pullcommand:

docker pull quay.io/andreipope/modified-with-copy:latestlatest: Pulling from andreipope/modified-with-copy571444490ac9: Pull completea8c44c6007c2: Pull complete78082700aa2c: Pull completec3a1a87b600e: Pull complete307b97780b43: Pull completee6bc907e1abd: Pull completef7d60f9c5e35: Pull complete6d95f9b81e1b: Pull complete3fc72998ebc8: Pull complete632905c48be3: Pull complete29b4e1262307: Pull completeDigest: sha256:a57849f1f639b5f4e01af33fdf4b86238dead6ddaf8f95b4e658863dfcf22700Status: Downloaded newer image for quay.io/andreipope/modified-with-copy:latest- List your Docker images:

docker imagesREPOSITORY TAG IMAGE ID CREATED SIZEquay.io/andreipope/modified-with-copy latest 05b3081ac594 About an hour ago 914MB- Issue the following

docker runcommand to run themodified-with-copyimage:

docker run -dt -p 3000:3000 quay.io/andreipope/modified-with-copy6394d8a8b60106125a062504d3764fcd0034b06947cfe303f9be0e87b82fee88- Point your browser to

http://localhost:3000

and you should see something similar to the screenshot below:

In this tutorial, you learned how to:

- Use Buildah to build an image from an existing image

- Build an image from Scratch

- Build an image from a Dockerfile

- Use Buildah to modify an existing container

- Run your Buildah images with Podman and Docker

- Push images to a public repository

We hope this blog post has been helpful and that now you know how to build container images with Buildah.

Thanks for reading!

Related articles

Subscribe to our newsletter

Get the latest industry trends, exclusive insights, and Gcore updates delivered straight to your inbox.