Features

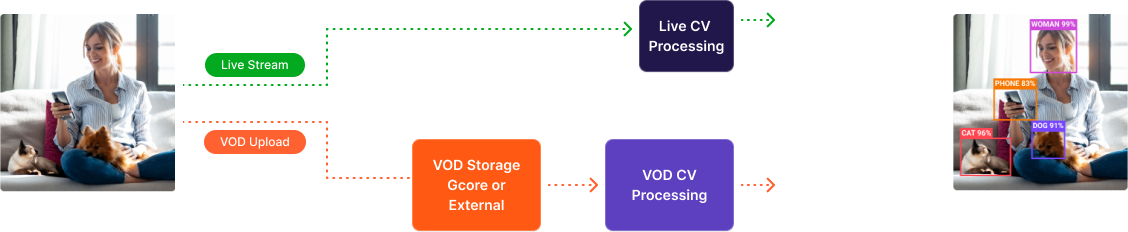

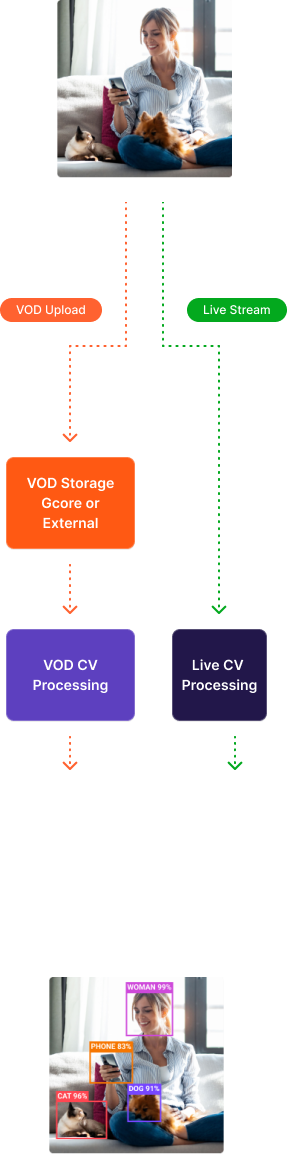

Automatic Moderation of User-Generated VOD Content

Videos that are prohibited from being published due to ethical, geographic, or other regulations are identified. The analysis allows for the automatic, quick, and accurate identification of the majority of invalid content. A smaller part of the content is sent for manual moderation: The video is tagged with the indication of probabilities and sent for checking by human moderators.

Automatic Live Analysis (beta)

Livestreams are constantly analyzed for specified objects. When they appear in a livestream, any restrictive actions can be performed. This allows for the automatic tracking of users’ compliance with content publishing rules.

Content Annotation and Tagging (beta)

Computer vision (CV) allows you to tag videos based on the identification of scenes, actions, or specified objects. Tags are included in the metadata, and they can serve as the basis for content cataloging or be displayed in video descriptions.

AI ASR

AI Automated Speech Recognition (ASR) enables content owners, both VOD and Live, to convert speech-to-text. This involves voice recognition software that processes human speech and turns it into text, supporting more than 100 languages.

What Can Be Detected

With Help Of AI Video Services- People

- Faces

- Pets

- Household items

- Logos

- Vehicles and means of transport

- Over 1.000 objects

- Dancing

- Eating

- Fitness

- Many other actions

- Female and male faces

- Covered and exposed body parts

- Other body parts

Usage Guides

for Live & VOD

The result of this function is metadata with a list of found objects and the probabilities of their detection.

{

"detection-annotations": [

{

"frame-no": 0,

"annotations": [

{

"top": 390,

"left": 201,

"width": 32,

"height": 32,

"object-name": "cell phone",

"object-score": 0.2604694366455078

}

]

}

]

}Benefits of Our Solution

5x Faster Processing

Video analysis is done only by key frames and not by the whole video. Video processing time is up to 30x shorter compared to traditional analysis. The average processing time is 1:5.

Automatic Stop Triggers

The analysis will stop at the point when the trigger is activated. This allows you to receive answers instantly, without waiting for the complete processing of the video.

Interactive Training and Functionality Improvement

Your project may require customized features. Therefore, the source base of machine learning can be supplemented with your images. We are open to suggestions for integrating new solutions.

Cost Optimization

The analysis is faster and does not perform unnecessary actions when detecting the required objects, saving you on budget. We also use our own cloud infrastructure with up-to-date technologies.

Compare Service Features

| Gcore | AWS | Azure | ||

|---|---|---|---|---|

| Annotation | ||||

| Objects | ||||

| Content Moderation | ||||

| VOD Processing | ||||

| Live Processing | ||||

| Cost Reduction | ||||

| Connection of External Storage | ||||

| Quick Analysis | ||||

| Automatic Stop Triggers |

Frequently Asked Questions

A full description, use cases, and the necessary documentation can be found in the knowledge base

Our models are built on the basis of OpenCV, TensorFlow, and other libraries.

OpenCV is an open-source library for computer vision, image processing, and general-purpose numerical algorithms.

TensorFlow is an open software library for machine learning developed by Google to solve the problems of building and training a neural network in order to automatically find and classify images, achieving the quality of human perception.

In your videos, CV determines both the objects and the probability of their detection. Each project has its own level of probability, ranging from a slight hint to the impossibility of the appearance of a specified object type. For example, a video has the tag EXPOSED_BREAST_F and a score of 0.51.

To determine the average value of your project, we recommend first taking a set of videos (for example, for a day or a week). Then, calculate the score of the specified tags for each video. Lastly, set coefficients based on the result analysis. For example, normal (max. 30%), questionable (max. 50%), and censored (51% and higher).

We operate with sets of images and videos that cover a large number of uses. However, the system sometimes needs additional training for particular cases.

We recommend generating a set of missed videos and sending them for analysis separately. In the next iteration, the system will also be trained on these videos.

Send images to the system for processing in the same way as videos. A picture is billed as a 1-second video.

The system takes into account the duration of each processed video in seconds. At the end of the month, the total number is sent to billing. The rate is calculated in minutes.

Let’s say you have uploaded three videos that last 10 seconds, 1 minute and 30 seconds, and 5 minutes and 10 seconds. The sum at the end of the month will be 10 s + 90 s + 310 s = 410 seconds = 6 minutes and 50 seconds. Billing charges 7 minutes. In your personal account, you can see a graph of minutes consumption for each day.

No. The streaming platform automatically deletes your videos and images after analysis and does not use your data to train basic models. Your video files do not leave your storage and are not sent to edge servers when the container is launched.

Contact us to get a personalized offer

Use cutting-edge streaming technologies without investing in infrastructure.

Try now for free14 days for free

Try our solution without worrying about costs

Easy integration

Get up and running in as little as 10 minutes

Competent 24/7 technical support

Our experts will help with integration and support later on