Mili Leitner Cohen

Content Marketing Lead, AI Products

Mili leads content marketing for the Gcore AI product team. She helps define how AI products are positioned, launched, and communicated globally. With more than a decade of experience in content and growth strategy, she makes complex innovation understandable and engaging for real audiences.

Three partnership announcements. One week. Twelve years in the making.Last week, as Gcore turned 12, we launched a new feature in partnership with NVIDIA and announced that Microsoft selected Gcore to join its elite group of global CDN part

We’ve expanded our AI inference Application Catalog with three new state-of-the-art models, covering massively multilingual translation, efficient agentic workflows, and high-end reasoning. All models are live today via Everywhere Inference

We’ve expanded our Application Catalog with a new set of high-performance models across embeddings, text-to-speech, multimodal LLMs, and safety. All models are live today via Everywhere Inference and Everywhere AI, and are ready to deploy i

For enterprises, telcos, and CSPs, AI adoption sounds promising…until you start measuring impact. Most projects stall or even fail before ROI starts to appear. ML engineers lose momentum setting up clusters. Infrastructure teams battle to b

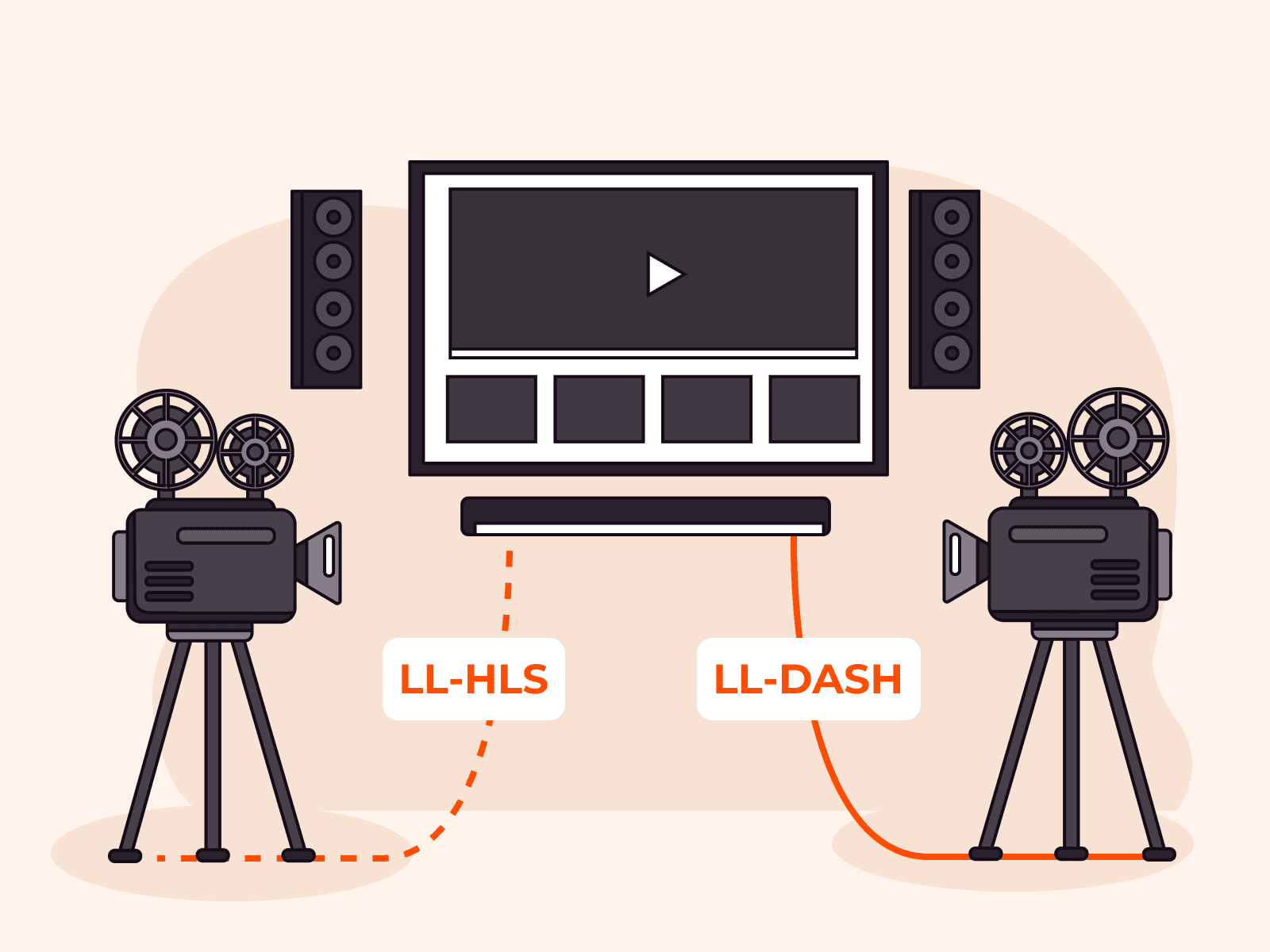

Viewers in sports, gaming, and interactive events expect real-time, low-latency streaming experiences. To deliver this, the industry has rallied around two powerful protocols: Low-Latency HLS (LL-HLS) and Low-Latency DASH (LL-DASH).While th

Related articles

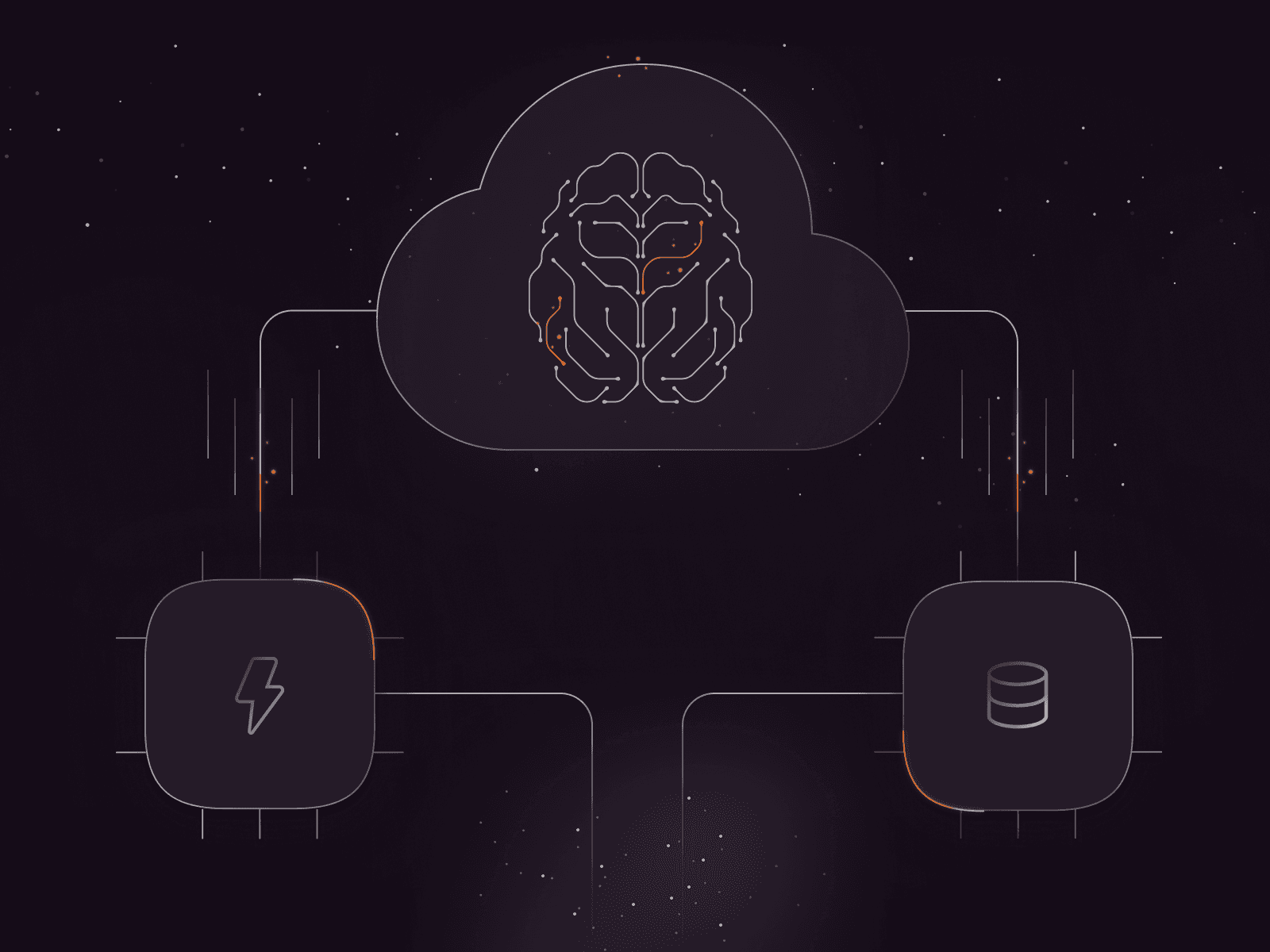

Imagine if you could click a button and suddenly your GPUs increase their throughput by 6x. Or reduce latency by 2x. Or route inference requests seamlessly across different GPU types.That's the experience we're bringing to our inference cus

HLS/DASH streaming via CDN with ~3 seconds latency glass-to-glassLL-HLS and LL-DASH are well-documented standards, but delivering them reliably at scale is far from trivial. The challenge is not in understanding the protocols—it is in engin

We’ve expanded our AI inference Application Catalog with three new state-of-the-art models, covering massively multilingual translation, efficient agentic workflows, and high-end reasoning. All models are live today via Everywhere Inference

For enterprises, telcos, and CSPs, AI adoption sounds promising…until you start measuring impact. Most projects stall or even fail before ROI starts to appear. ML engineers lose momentum setting up clusters. Infrastructure teams battle to b