This article was originally published on The New Stack. It’s written by Dmitrii Bubnov, a DevSecOps engineer at Gcore with 14 years of experience in IT.

Role-based access control (RBAC) is the default access control approach in Kubernetes. This model categorizes permissions using specific verbs to define allowed interactions with resources. Within this system, three lesser-known permissions—escalate, bind, and impersonate—can override existing role limitations, grant unauthorized access to restricted areas, expose confidential data, or even allow complete control over a cluster. This article explains these potent permissions, offering insights into their functions and guidance on mitigating their associated risks.

A Quick Reminder about RBAC Role and Verbs

In this article, I assume you are already familiar with the key concepts of Kubernetes RBAC. If not, please refer to Kubernetes’ own documentation.

However, we do need to briefly recall one important concept directly related to this article: role. This describes access rights to K8s resources within a specific namespace and the available operations. Roles consist of a list of rules. Rules include verbs—available operations for defined resources.

Here is an example of a role from the K8s documentation that grants read access to pods:

apiVersion: rbac.authorization.k8s.io/v1kind: Rolemetadata: namespace: default name: pod-readerrules:- apiGroups: [""] # "" points to the core API group resources: ["pods"] verbs: ["get", "watch", "list"]Verbs like get, watch, and list are commonly used. However, more intriguing ones also exist.

Three Lesser-Known Kubernetes RBAC Permissions

For more granular and complex permissions management, the K8s RBAC has the following verbs:

escalate: Allows users to create and edit roles even if they don’t have initial permissions to do so.bind: Allows users to create and edit role bindings and cluster role bindings with permissions that they haven’t been assigned.impersonate: Allows users to impersonate other users and gain their privileges in the cluster or in a different group. Critical data can be accessed using this verb.

Below, we’ll learn them in more detail. But first, let’s create a test namespace and name it rbac:

kubectl create ns rbacThen, create a test SA privesc:

kubectl -n rbac create sa privescWe’ll use them throughout the rest of this tutorial.

Escalate

By default, the Kubernetes RBAC API doesn’t allow users to escalate privileges by simply editing a role or role binding. This restriction works at the API level even if the RBAC authorizer is disabled. The only exception is if the role has the escalate verb.

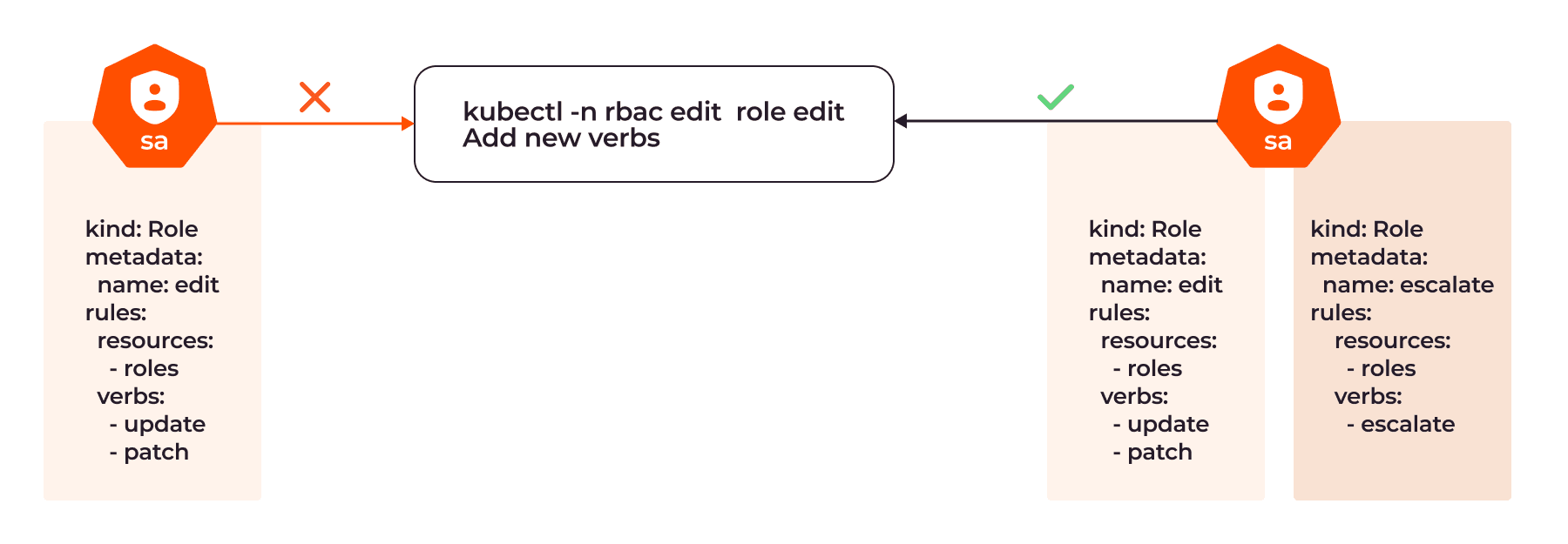

In the image below, the SA with only update and patch permissions can’t add a new verb to the role. But if we add a new role with the escalate verb, it becomes possible:

Let’s see how it works in more detail.

Create a role that allows read-only access to pods and roles in this namespace:

kubectl -n rbac create role view --verb=list,watch,get --resource=role,podBind this role to the SA privesc:

kubectl -n rbac create rolebinding view --role=view --serviceaccount=rbac:privescCheck if the role can be updated:

kubectl auth can-i update role -n rbac --as=system:serviceaccount:rbac:privesc noAs we can see, the SA can read roles but can’t edit them.

Create a new role that allows role editing in the rbac namespace:

kubectl -n rbac create role edit --verb=update,patch --resource=roleBind this new role to the SA privesc:

kubectl -n rbac create rolebinding edit --role=edit --serviceaccount=rbac:privescCheck if the role can be updated:

kubectl auth can-i update role -n rbac --as=system:serviceaccount:rbac:privescyesCheck if the role can be deleted:

kubectl auth can-i delete role -n rbac --as=system:serviceaccount:rbac:privescnoThe SA can now edit roles but can’t delete them.

For the sake of experimental accuracy, let’s check the SA capabilities. To do this, we’ll use a JWT (JSON Web Token):

TOKEN=$(kubectl -n rbac create token privesc --duration=8h)We should remove the old authentication parameters from the config because Kubernetes will check the user’s certificate first and won’t check the token if it already knows about the certificate.

cp ~/.kube/config ~/.kube/rbac.confexport KUBECONFIG=~/.kube/rbac.confkubectl config delete-user kubernetes-adminkubectl config set-credentials privesc --token=$TOKENkubectl config set-context --current --user=privescThis role shows we can edit other roles:

kubectl -n rbac get role edit -oyamlapiVersion: rbac.authorization.k8s.io/v1kind: Rolemetadata: name: edit namespace: rbacrules:- apiGroups: - rbac.authorization.k8s.io resources: - roles verbs: - update - patchLet’s try to add a new verb, list, which we have already used in the view role:

kubectl -n rbac edit role editOKapiVersion: rbac.authorization.k8s.io/v1kind: Rolemetadata: name: edit namespace: rbacrules:- apiGroups: - rbac.authorization.k8s.io resources: - roles verbs: - update - patch - list # the new verb we addedSuccess.

Now, let’s try to add a new verb, delete, which we haven’t used in other roles:

kubectl -n rbac edit role editapiVersion: rbac.authorization.k8s.io/v1kind: Rolemetadata: name: edit namespace: rbacrules:- apiGroups: - rbac.authorization.k8s.io resources: - roles verbs: - update - patch - delete # trying to add a new verberror: roles.rbac.authorization.k8s.io "edit" could not be patched: roles.rbac.authorization.k8s.io "edit" is forbidden: user "system:serviceaccount:rbac:privesc" (groups=["system:serviceaccounts" "system:serviceaccounts:rbac" "system:authenticated"]) is attempting to grant RBAC permissions not currently held:{APIGroups:["rbac.authorization.k8s.io"], Resources:["roles"], Verbs:["delete"]}This confirms that Kubernetes doesn’t allow users or service accounts to add new permissions if they don’t already have them—only if users or service accounts are bound to roles with such permissions.

Let’s extend the privesc SA permissions. We’ll do this by using the admin config and adding a new role with the escalate verb:

KUBECONFIG=~/.kube/config kubectl -n rbac create role escalate --verb=escalate --resource=roleNow, let’s bind the privesc SA to the new role:

KUBECONFIG=~/.kube/config kubectl -n rbac create rolebinding escalate --role=escalate --serviceaccount=rbac:privescCheck again if we can add a new verb to the role:

kubectl -n rbac edit role editapiVersion: rbac.authorization.k8s.io/v1kind: Rolemetadata: name: edit namespace: rbacrules:- apiGroups: - rbac.authorization.k8s.io resources: - roles verbs: - update - patch - delete # the new verb we addedrole.rbac.authorization.k8s.io/edit editedNow it works. The user can escalate the SA privileges by editing the existing role. This means that the escalate verb gives the admin privileges, including those of the namespace admin or even cluster admin.

Bind

The bind verb allows the user to edit the RoleBinding or ClusterRoleBinding for privilege escalation, similar to escalate, which allows the user to edit Role or ClusterRole.

In the image below, the SA with the role binding that has the update, patch, and create verbs can’t add delete until we create a new role with the bind verb.

Now, let’s take a closer look at how this works.

Let’s change the kubeconfig file to admin:

export KUBECONFIG=~/.kube/configRemove old roles and bindings:

kubectl -n rbac delete rolebinding view edit escalatekubectl -n rbac delete role view edit escalateAllow the SA to view and edit the role binding and pod resources in the namespace:

kubectl -n rbac create role view --verb=list,watch,get --resource=role,rolebinding,podkubectl -n rbac create rolebinding view --role=view --serviceaccount=rbac:privesckubectl -n rbac create role edit --verb=update,patch,create --resource=rolebinding,podkubectl -n rbac create rolebinding edit --role=edit --serviceaccount=rbac:privescCreate separate roles to work with pods, but still don’t bind the role:

kubectl -n rbac create role pod-view-edit --verb=get,list,watch,update,patch --resource=podkubectl -n rbac create role delete-pod --verb=delete --resource=podChange the kubeconfig to the SA privesc and try to edit the role binding:

export KUBECONFIG=~/.kube/rbac.confkubectl -n rbac create rolebinding pod-view-edit --role=pod-view-edit --serviceaccount=rbac:privescrolebinding.rbac.authorization.k8s.io/pod-view-edit createdThe new role has been successfully bound to the SA. Note that the pod-view-edit role contains verbs and resources that were already bound to the SA by the role binding view and edit.

Now, let’s try to bind a role with a new verb, delete, which is missing in the roles that are bound to the SA:

kubectl -n rbac create rolebinding delete-pod --role=delete-pod --serviceaccount=rbac:privescerror: failed to create rolebinding: rolebindings.rbac.authorization.k8s.io "delete-pod" is forbidden: user "system:serviceaccount:rbac:privesc" (groups=["system:serviceaccounts" "system:serviceaccounts:rbac" "system:authenticated"]) is attempting to grant RBAC permissions not currently held:{APIGroups:[""], Resources:["pods"], Verbs:["delete"]}Kubernetes doesn’t allow this, even though we have permission to edit and create role bindings. But we can fix that with the bind verb. Let’s do so using the admin config:

KUBECONFIG=~/.kube/config kubectl -n rbac create role bind --verb=bind --resource=rolerole.rbac.authorization.k8s.io/bind createdKUBECONFIG=~/.kube/config kubectl -n rbac create rolebinding bind --role=bind --serviceaccount=rbac:privescrolebinding.rbac.authorization.k8s.io/bind createdTry once more to create a role binding with the new delete verb:

kubectl -n rbac create rolebinding delete-pod --role=delete-pod --serviceaccount=rbac:privescrolebinding.rbac.authorization.k8s.io/delete-pod createdNow it works. So, using the bind verb, the SA can bind any role to itself or any user.

Impersonate

The impersonate verb in K8s is like sudo in Linux. If users have impersonate access, they can authenticate as other users and run commands on their behalf. kubectl has the --as, --as-group, and --as-uid options, which allow commands to be run as a different user, group, or UID (a universally unique identifier), respectively. If a user were given impersonation permissions, they would become the namespace admin, or—if there is a cluster-admin service account in the namespace—even the cluster admin.

Impersonate is helpful to check the RBAC permissions delegated to a user: An admin should perform a command according to the template kubectl auth can-i --as=$USERNAME -n $NAMESPACE $VERB $RESOURCE and check if the authorization works as designed.

In our example, the SA wouldn’t get info about pods in the rbac namespace just performing kubectl -n rbac get pod. But it becomes possible if there is a role with the impersonate verb:

kubectl auth can-i get pod -n rbac --as=system:serviceaccount:rbac:privescyes

Let’s create a new service account, impersonator, in the rbac namespace; this SA will have no permissions:

KUBECONFIG=~/.kube/config kubectl -n rbac create sa impersonatorserviceaccount/impersonator createdNow, create a role with the impersonate verb and a role binding:

KUBECONFIG=~/.kube/config kubectl -n rbac create role impersonate --resource=serviceaccounts --verb=impersonate --resource-name=privesc(Look at the --resource-name parameter in the above command: it only allows impersonation as the privesc SA.)

role.rbac.authorization.k8s.io/impersonate createdKUBECONFIG=~/.kube/config kubectl -n rbac create rolebinding impersonator --role=impersonate --serviceaccount=rbac:impersonatorrolebinding.rbac.authorization.k8s.io/impersonator createdCreate a new context:

TOKEN=$(KUBECONFIG=~/.kube/config kubectl -n rbac create token impersonator --duration=8h)kubectl config set-credentials impersonate --token=$TOKEN User "impersonate" set.kubectl config set-context impersonate@kubernetes --user=impersonate --cluster=kubernetesContext "impersonate@kubernetes" created.kubectl config use-context impersonate@kubernetesSwitched to context "impersonate@kubernetes".Check the permissions:

kubectl auth can-i --list -n rbacResources Non-Resource URLs Resource Names Verbsselfsubjectaccessreviews.authorization.k8s.io [] [] [create]selfsubjectrulesreviews.authorization.k8s.io [] [] [create]...serviceaccounts [] [privesc] [impersonate]No additional permissions exist besides impersonate, as specified in the role. But if we impersonate the impersonator SA as the privesc SA, we can see that we get the same permissions that the privesc SA has:

kubectl auth can-i --list -n rbac --as=system:serviceaccount:rbac:privescResources Non-Resource URLs Resource Names Verbsroles.rbac.authorization.k8s.io [] [edit] [bind escalate]selfsubjectaccessreviews.authorization.k8s.io [] [] [create]selfsubjectrulesreviews.authorization.k8s.io [] [] [create]pods [] [] [get list watch update patch delete create]...rolebindings.rbac.authorization.k8s.io [] [] [list watch get update patch create bind escalate]roles.rbac.authorization.k8s.io [] [] [list watch get update patch create bind escalate]configmaps [] [] [update patch create delete]secrets [] [] [update patch create delete]Thus, the impersonate SA has all of its own privileges and all the privileges of the SA it’s impersonating, including those that a namespace admin has.

How to Mitigate Potential Threats

The escalate, bind, and impersonate verbs can be used to create flexible permissions, resulting in granular management of access to K8s infrastructure. But they also open the door to malicious use, since, in some cases, they enable a user to access crucial infrastructure components with admin privileges.

Three practices can help you mitigate the potential dangers of misuse or malicious use of these verbs:

- Regularly check RBAC manifests

- Use the

resourceNamesfield inRoleandClusterRolemanifests - Use external tools to monitor roles

Let’s look at each in turn.

Regularly Check RBAC Manifests

To prevent unauthorized access and RBAC misconfiguration, periodically check your cluster RBAC manifests:

kubectl get clusterrole -A -oyaml | yq '.items[] | select (.rules[].verbs[] | contains("esalate" | "bind" | "impersonate")) | .metadata.name'kubectl get role -A -oyaml | yq '.items[] | select (.rules[].verbs[] | contains("esalate" | "bind" | "impersonate")) | .metadata.name'Use the ResourceNames Field

To restrict the use of escalate, bind, impersonate, or any other verbs, configure the resourceNames field in the Role and ClusterRole manifests. There, you can—and should—enter the names of resources that can be used.

Here is an example of a ClusterRole that allows the creation of a ClusterRoleBinding with roleRef named edit and view:

apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRolemetadata: name: role-grantorrules:- apiGroups: ["rbac.authorization.k8s.io"] resources: ["clusterroles"] verbs: ["bind"] resourceNames: ["edit","view"]You can do the same with escalate and impersonate.

Note that in the case of bind, an admin sets permissions in a role, and users can only bind that role to themselves if allowed in resourceNames. With escalate, users can write any parameters within a role and become admins of a namespace or cluster. So, bind restricts users, while escalate gives them more options. Keep this in mind if you need to grant these permissions.

Use External Tools to Monitor Roles

Consider using automated systems that monitor creating or editing roles with suspicious content, such as Falco or Tetragon.

You can also redirect Kubernetes audit logs to a log management system like Gcore Managed Logging, which is useful for analyzing and parsing K8s logs. To prevent accidental resource deletion, create a separate service account with the delete verb and allow users to impersonate only that service account. This is the principle of least privilege. To simplify this process, you can use the kubectl plugin kubectl-sudo.

At Gcore, we use these methods to make our Managed Kubernetes service more secure. We recommend that all our customers do the same. Using managed services doesn’t guarantee that your services are 100% secured by default, but at Gcore we do everything possible to ensure our customers’ protection, including encouraging RBAC best practices.

Conclusion

The escalate, bind, and impersonate verbs allow admins to manage access to K8s infrastructure flexibly and let users escalate their privileges. These are powerful tools that, if abused, can cause significant damage to a K8s cluster. Carefully review any use of these verbs and ensure that the least privilege rule is followed: Users must have the minimum privileges necessary to operate, no more.

Looking for a simple way to manage your K8s clusters? Try Gcore Managed Kubernetes. We offer Virtual Machines and Bare Metal servers with GPU worker nodes to boost your AI/ML workloads. Prices for worker nodes are the same as for our Virtual Machines and Bare Metal servers. We provide free, production-grade cluster management with a 99.9% SLA for your peace of mind.

Related articles

Subscribe to our newsletter

Get the latest industry trends, exclusive insights, and Gcore updates delivered straight to your inbox.