Natural Language Processing | How NLP is Transforming Communication

- By Gcore

- September 20, 2023

- 8 min read

Natural language processing (NLP) is a type of artificial intelligence that enables computers to understand and respond to human language in a manner that’s natural, intuitive, and useful. Read on to learn how NLP is transforming communication and revolutionizing the way we interact with technology, including applications and benefits of natural language processing and a detailed explanation of how it works.

What Is NLP?

Natural language processing (NLP) is a technology built on artificial intelligence algorithms that teaches computers human language. The goal is to understand, interpret, and respond to human language naturally, allowing humans to experience natural, conversation-like interactions with computers via written and speech-to-text queries. NLP uses complex algorithms to analyze words, sentences, and even the tone of what we say or write. This lets computers grasp the deeper meanings and nuances in our communication. The result is apps and devices that are easier and more intuitive to use, and ultimately more helpful.

Natural Language vs. Programming Languages

Natural language and programming languages are both ways of communicating with computers, so it’s important to understand the difference and their specific roles. Natural languages used for NLP—like English, German, or Mandarin Chinese—are full of nuance and can be interpreted in multiple ways. Programming languages, such as Java, C++, and Python, on the other hand, are designed to be absolutely precise and therefore don’t have nuance.

NLP serves as a bridge by enabling machines to understand human language just as they understand programming languages. This makes it possible for our complex thoughts and expressions to be understood by computers. Our interactions with technology are therefore enhanced, because computers can give nuanced outputs that are individualized for the user.

Applications of NLP and their Benefits

NLP advancements provide uniquely tailored solutions that offer practical advantages that enhance daily life and assist various industries.

Human-Computer Interaction

NLP enhances communication between humans and computers. Voice recognition algorithms, for instance, allow drivers to control car features safely hands-free. Virtual assistants like Siri and Alexa make everyday life easier by handling tasks such as answering questions and controlling smart home devices.

Document Management

In critical fields like law and medicine, NLP’s speech-to-text capabilities improve the accuracy and efficiency of documentation. By letting users dictate instead of type and using contextual information for accuracy, the margin for error is reduced while speed is improved.

Information Summarization

NLP algorithms can distill complex texts into summaries by employing keyword extraction and sentence ranking. This is invaluable for students and professionals alike, who need to understand intricate topics or documents quickly.

Business Analytics

From parsing customer reviews to analyzing call transcripts, NLP offers nuanced insights into public sentiment and customer needs. In the business landscape, NLP-based chatbots handle basic queries and gather data, which ultimately improves customer satisfaction through fast and accurate customer service and informs business strategies through the data gathered. Together, these two factors improve a business’ overall ability to respond to customer needs and wants.

Translation Services

Machine translation tools utilizing NLP provide context-aware translations, surpassing traditional word-for-word methods. They capture idioms and context, resulting in a more reliable translation. Traditional methods might render idioms as gibberish, not only resulting in a nonsensical translation, but losing the user’s trust. NLP makes this a problem of the past.

Content Generation and Classification

Models like ChatGPT can generate meaningful content swiftly, capturing the essence of events or data. Sentiment analysis sorts public opinion into categories, offering a nuanced understanding that goes beyond mere keyword frequency. This allows companies to make sense of social media chatter about an advertising campaign or new product, for example.

Automation in Customer Service

NLP-powered voice assistants in customer service can understand the complexity of user issues and direct them to the most appropriate human agent. This results in better service and greater efficiency compared to basic interactive voice response (IVR) systems. Customers are more likely to be matched successfully to a relevant agent, rather than having to start over when IVR fails to identify a particular keyword. This may have particular relevance for populations with accents or dialects, or non-native speakers who might be less likely to use predetermined keywords.

Deep Research

NLP can sift through extensive documents for relevance and context, saving time for professionals such as lawyers and physicians, while improving information accessibility for the public. For example, it can look for legal cases that offer a particular precedent to support an attorney’s case, allowing even a small legal practice with limited resources to conduct complex research more quickly and easily.

Emotional Understanding

NLP-enabled systems can pick up on the emotional undertones in text, enabling more personalized responses in customer service and marketing. For example, NLP can tell whether a customer service interaction should start with an apology to a frustrated customer.

Market and Talent Analysis

NLP can gauge public sentiment about industries or products, aiding in investment decisions and guiding corporate strategies. It also scans CVs with contextual awareness, providing a better job-employee match than simple keyword-based tools.

Educational Adaptivity

NLP can generate exam questions based on textbooks making educational processes more responsive and efficient. Beyond simply asking for replications of the textbook content, NLP can create brand new questions that can be answered through synthesized knowledge of a textbook, or various specific sources from a curriculum.

How NLP Works

NLP works according to a four-stage deep learning process that builds upon processes within the standard AI flow to enable precise textual and speech-to-text understanding.

Phase 1: Data Preprocessing

In the first phase, texts must be organized, structured, and simplified for analysis, by segmenting them into sentences and words, categorizing each word’s function in the sentence, and removing extra characters or irrelevant information. Think of it as cleaning and arranging a cluttered room. To do so, certain techniques are employed:

- Tokenization: This step divides the text into smaller units, like words or sentences. “NLP is amazing!” becomes [“NLP,” “is,” “amazing!”].

- Stopword removal: By eliminating common words, the system focuses on relevant information. “I am at the park” becomes [“park”], emphasizing the key message.

- Lemmatization: Reducing words to their root forms ensures consistency. For example, “running” becomes “run,” simplifying various forms of a word into a single representation.

- Part-of-speech tagging: By marking words as nouns, verbs, adjectives, etc., the system understands their roles in a sentence. “He runs fast” translates to [(“He,” “noun”), (“runs,” “verb”), (“fast,” “adverb”)], helping the computer grasp the grammatical structure.

- Segmentation: This step involves dividing a text into individual sentences. A simple sentence like “Mr. Johnson is here. Please meet him at 3 p.m.” might pose challenges due to periods in “Mr.” and “p.m.” Proper segmentation would result in [“Mr. Johnson is here.”, “Please meet him at 3 p.m.”], preserving abbreviations.

- Change case: This process typically converts all text to lowercase, ensuring uniformity. For example, “NLP is Amazing!” would become “nlp is amazing.”

- Spell correction: This stage corrects any spelling errors in the text. For instance, “I am lerning NLP.” would be corrected to “I am learning NLP.”

- Stemming: This step converts words to their base or stem form. Unlike lemmatization, stemming might not consider the context. For example, “flies” may be stemmed to “fli” instead of “fly.”

- Text normalization: This process cleans and replaces text to a standard form. A term like “bare metal server,” “bare-metal server,” and “baremetal server” would all be converted to “bare metal server.”

Phase 2: Algorithm Development

In this stage, two types of algorithms work on the preprocessed text:

- Rules-based systems: These algorithms follow linguistic rules, understanding patterns like adjectives preceding nouns. They’re good for tasks that have clear rules, like spotting passive voice in a sentence.

- Machine learning-based systems: These dynamic algorithms learn by example. For instance, they can classify a review as positive or negative by studying past reviews. These systems are useful when rules are not clear cut, like in spam detection.

The choice between rule-based and machine learning depends on your project’s needs.

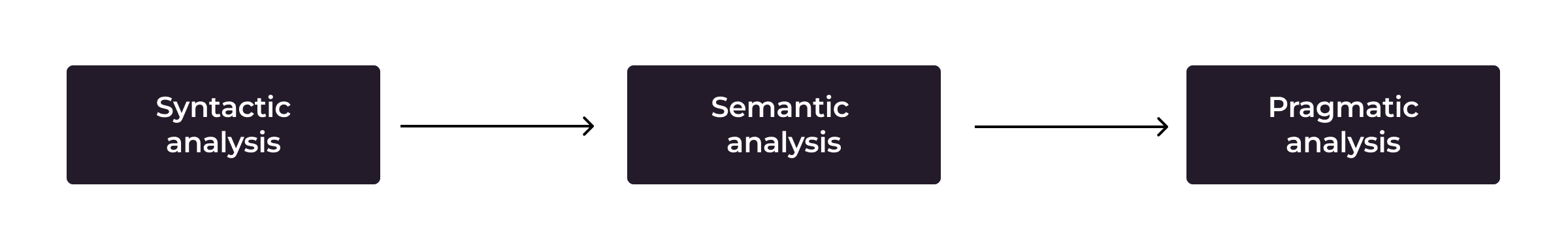

Phase 3: Data Processing

The next part of the NLP flow involves processing the data so that the texts can be understood in terms of their grammatical structure, meaning, and relationships with other texts, known as syntactic analysis, semantic analysis, and pragmatic analysis, respectively. Together, they form an essential framework that ensures correct interpretation, granting NLP a comprehensive understanding of the intricacies of human communication.

Let’s explore the methods and techniques they employ.

Syntactic Analysis: Structuring Language

Syntactic analysis provides a structural view of language, akin to the blueprint of a building. It includes:

- Parsing: Breaking down a sentence into its components to understand the grammatical relationships, like recognizing “dog” as the subject in “the dog barked.”

- Word segmentation: Dividing text into individual words or terms, which is vital for languages without spaces like Chinese. For example, e-commerce sites use word segmentation to search for specific products in customer reviews.

- Sentence breaking: Separating a text into individual sentences, such as a news aggregator dividing articles into sentences, to create concise summaries.

- Morphological segmentation: Analyzing the structure of individual words, such as dividing “unhappiness” into “un-,” “happy,” and “-ness.” An example might be educational software that uses morphological segmentation to teach users about the intricacies of language structure.

You might notice some similarities to the processes in data preprocessing, because both break down, prepare, and structure text data. However, syntactic analysis focuses on understanding grammatical structures, while data preprocessing is a broader step that includes cleaning, normalizing, and organizing text data.

Semantic Analysis: Unveiling Meaning

Semantic analysis dives into the profound range of meaning within language. It includes:

- Word sense disambiguation: Understanding the specific sense of a word in its context, such as knowing that “bat” refers to an animal in “the bat flew,” but to sports equipment in “he swung the bat.”

- Named-entity recognition (NER): Identifying and classifying entities like names, places, or dates within text. Travel agencies use NER to extract destination names from customer inquiries.

- Natural language generation: Creating coherent and contextually relevant text, such as automated news stories. One example is a weather service that automatically generates localized weather reports from raw data.

Pragmatic Analysis: One Step Deeper

Pragmatic analysis takes the exploration of language a step further by focusing on understanding the context around the words used. It looks beyond what’s literally said to consider how and why it’s said. This involves accounting for the speaker’s intent, tone, and even cultural norms.

To achieve this goal, NLP uses algorithms that analyze additional data such as previous dialogue turns or the setting in which a phrase is used. These algorithms can also identify keywords and sentiment to gauge the speaker’s emotional state, thereby fine-tuning the model’s understanding of what’s being communicated.

Phase 4: Response

In the response phase of NLP, two crucial elements come into play: token generation and contextual understanding.

- Token generation is the methodology for picking the most relevant words or “tokens” based on what best aligns with the user query and the context surrounding it. For instance, for a weather inquiry, the model may produce tokens like “weather,” “sunny,” or “temperature.”

- Contextual understanding deals with the semantic and grammatical aspects of the query. It’s not just about what words are in the query, but what the user is likely intending to ask. It analyzes sentence structure and the relationship between words to generate a well-framed response. So, when someone asks, “What’s the weather like?” the model knows that the user wants to know the current meteorological conditions for their location.

These generated tokens and contextual insights are then synthesized into a coherent, natural-language sentence. This is the response that is relayed back through the software system to the user. Continual monitoring is implemented to assess the quality of these responses. Metrics like fluency, accuracy, and relevance are evaluated. If anomalies arise, triggering the quality to deviate from established benchmarks, human intervention becomes necessary for recalibration, ensuring ongoing efficacy in generating natural, conversational responses.

Challenges of NLP

Natural language processing faces several challenges. Together, these issues illustrate the complexity of human communication and highlight the need for ongoing efforts to refine and advance natural language processing technologies.

- Ambiguity is a complex NLP challenge, much like in human-to-human written communications. Homonyms like “bank” might refer to a place to keep money or the side of a river, making interpretation tricky when context is limited. Similarly, without context or physical cues, tone, inflection, and sarcasm are challenging to detect in text. Since these challenges exist in human-to-human written communication, they are replicated in even the best NLP models.

- Dealing with different accents and dialects adds another layer of complexity, as pronunciation varies widely between regions, complicating the integration of speech recognition into search engines by making word recognition more difficult.

- The need for massive amounts of data to create deep learning-based NLP models is a labor-intensive process. The challenge even extends to understanding certain logic and math-related tasks, where replicating human thought and comprehension isn’t straightforward.

Conclusion

Imagine a world where your computer not only understands what you say but how you feel, where searching for information feels like a conversation, and where technology adapts to you, not the other way around. The future of NLP is shaping this reality across industries for diverse use cases, including translation, virtual companions, and understanding nuanced information. We can expect a future where NLP becomes an extension of our human capabilities, making our daily interaction with technology not only more effective but more empathetic.

Explore the future of NLP with Gcore’s AI IPU Cloud and AI GPU Cloud Platforms, two advanced architectures designed to support every stage of your AI journey. From building to training to deployment, the Gcore’s AI IPU and GPU cloud infrastructures are tailored to enhance human-machine communication, interpret unstructured text, accelerate machine learning, and impact businesses through analytics and chatbots. The AI IPU Cloud platform is optimized for deep learning, customizable to support most setups for inference, and is the industry standard for ML. On the other hand, the AI GPU Cloud platform is better suited for LLMs, with vast parallel processing capabilities specifically for graph computing to maximize potential of common ML frameworks like TensorFlow.

Find out which solution works best for your AI requirements.

Related articles

Subscribe to our newsletter

Get the latest industry trends, exclusive insights, and Gcore updates delivered straight to your inbox.