Linux has a wide array of files that are kept hidden to provide a neater user experience and protect them from accidental alterations. Knowing how to unveil these hidden files is crucial whether you’re troubleshooting, organizing, or just curious. This comprehensive guide will help you discover the methods for revealing hidden treasures in your Linux environment.

Show Hidden Files Using the Terminal

In Linux, hidden files usually start with a dot (.) and are thus often referred to as “dot files.” Here’s a step-by-step guide to show these hidden files in Linux via terminal:

#1 Open Terminal

You can open the terminal by pressing Ctrl + Alt + T on your keyboard or by searching for “Terminal” in your application menu.

#2 Navigate to the Desired Directory

Use the cd (change directory) command to navigate to the directory where you want to view hidden files. For example:

cd /path/to/directory#3 List All Files, Including Hidden Ones

Enter the following command to list all the files, including the hidden ones:

ls -laHere’s a breakdown of the command options:

- ls: The list command.

- -l: List in long format to show details such as file permissions, number of links, owner, group, size, and last modification time.

- -a: Show all entries, including hidden files that start with a dot (.).

#4 View Output

After executing the ls -la command, the terminal will display all files in the directory, including the hidden ones. Hidden files will start with a dot (.) before the filename, like .bashrc or .config.

Show Hidden Files Using the File Managers

The steps can vary slightly depending on your specific file manager, but here’s a general approach for the most common ones:

#1 Nautilus (Default for GNOME and Ubuntu)

- Open the Nautilus file manager (often simply called “Files”).

- Once it’s open, press Ctrl + H on your keyboard. This will toggle the visibility of hidden files.

#2 Dolphin (Default for KDE Plasma)

- Open the Dolphin file manager.

- Press Alt + . (period) on your keyboard. Alternatively, navigate to the Control menu, select ‘Hidden Files’ or look for a similar option.

Remember, in most Linux file managers, hidden files and directories start with a dot (.) prefix, such as .bashrc or .config. Using the appropriate file manager method, you can easily toggle their visibility as needed.

By following these steps in the terminal and in file managers, you can effortlessly unveil hidden files across any directory within your Linux environment. These hidden files, often beginning with a dot (e.g., .bashrc or .config), play crucial roles in user-specific configurations and system-wide settings. Learning to show hidden files allows you to fine-tune your system, troubleshoot potential issues, and ensure optimal performance, all while gaining a more profound understanding of the Linux architecture.

Conclusion

Looking to deploy Linux in the cloud? With Gcore Cloud, you can choose from Basic VM, Virtual Instances, or VPS/VDS suitable for Linux:

- Gcore Basic VM offers shared virtual machines from €3.2 per month

- Virtual Instances are virtual machines with a variety of configurations and an application marketplace

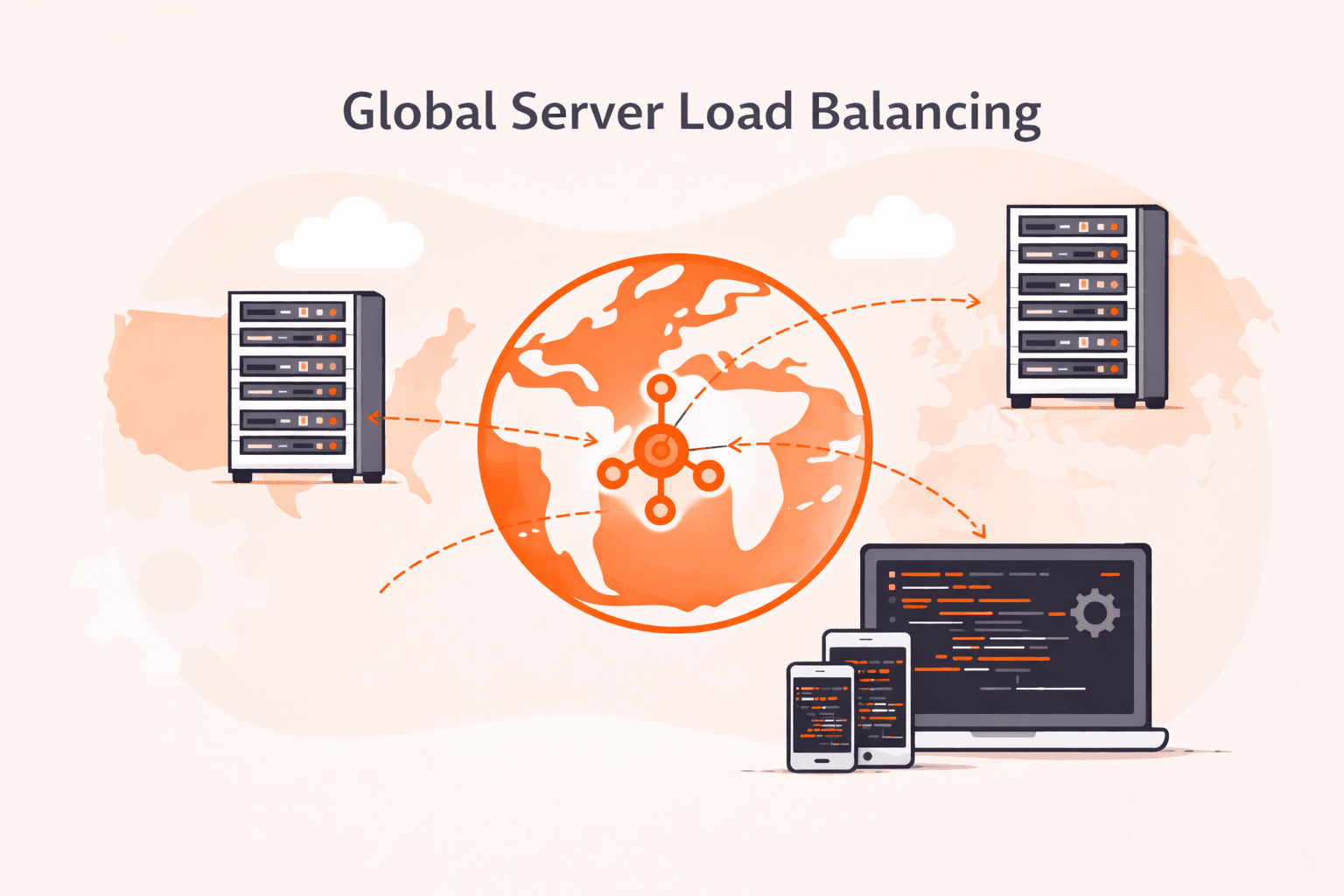

- Virtual Dedicated Servers provide outstanding speed of 200+ Mbps in 20+ global locations

Related articles

Subscribe to our newsletter

Get the latest industry trends, exclusive insights, and Gcore updates delivered straight to your inbox.