Podman is the command-line interface tool that lets you interact with Libpod, a library for running and managing OCI-based containers. It is important to note that Podman doesn’t depend on a daemon, and it doesn’t require root privileges.

The first part of this tutorial focuses on similarities between Podman and Docker, and we’ll show how you can do the following:

- Move a Docker image to Podman.

- Create a bare-bones Nuxt.JS project and build a container image for it

- Push your container image to Quay.io

- Pull the image from Quay.io and run it with Docker.

In the second part of this tutorial, we’ll walk you through two of the most important features that differentiate Podman from Docker. In this section, you will do the following:

- Create a Pod with Podman

- Generate a Kubernetes Pod spec with Podman, and deploy it to a Kubernetes cluster.

Prerequisites

- This tutorial is intended for readers who have prior exposure to Docker. In the next sections, you will use commands such as

run,build,push,commit, andtag. It is beyond the scope of this tutorial to explain how these commands work. - A running Linux system with Podman and Docker installed.

You can enter the following command to check that Podman is installed on your system:

podman versionVersion: 1.6.4RemoteAPI Version: 1Go Version: go1.12.12OS/Arch: linux/amd64Refer Podman Installation Instructions for details on how to install Podman.

Use the following command to verify if Docker is installed:

docker --versionDocker version 18.06.3-ce, build d7080c1See the Get Docker page for details on how to install Docker.

- Git. To check if Git is installed on your system enter, type the following command:

git versiongit version 2.18.2You can refer Getting Started – Installing Git on details of installing Git.

- Node.js 10 or higher. To check if Node.js is installed on your computer, type the following command:

node --versionv10.16.3If Node.js is not installed, you can download the installer from the Downloads page.

- A Kubernetes Cluster. If you don’t have a running Kubernetes cluster, refer the “Create a Kubernetes Cluster with Kind” section.

- Additionally, you will need a Quay.io account.

Moving Images from Docker to Podman

If you’ve just installed Podman on a system on which you’ve already used Docker to pull one or more images, you’ll notice that running the podman images command doesn’t show your Docker images:

docker imagesREPOSITORY TAG IMAGE ID CREATED SIZEcassandra latest b571e0906e1b 10 days ago 324MBpodman imagesREPOSITORY TAG IMAGE ID CREATED SIZEThe reason why you don’t see your Docker images is that Podman runs without root privileges. Thus, its repository is located in the user’s home directory – ~/.local/share/containers. However, Podman can import an image directly from the Docker daemon running on your machine, through the docker-daemon transport.

In this section, you’ll use Docker to pull the hello-world image. Then, you’ll import it into Podman. Lastly, you’ll run the hello-world image with Podman.

- Download and run the

hello-worldimage by executing the following command:

sudo docker run hello-worldUnable to find image 'hello-world:latest' locallylatest: Pulling from library/hello-world1b930d010525: Pull completeDigest: sha256:9572f7cdcee8591948c2963463447a53466950b3fc15a247fcad1917ca215a2fStatus: Downloaded newer image for hello-world:latestHello from Docker!This message shows that your installation appears to be working correctly.To generate this message, Docker took the following steps: 1. The Docker client contacted the Docker daemon. 2. The Docker daemon pulled the "hello-world" image from the Docker Hub. (amd64) 3. The Docker daemon created a new container from that image which runs the executable that produces the output you are currently reading. 4. The Docker daemon streamed that output to the Docker client, which sent it to your terminal.To try something more ambitious, you can run an Ubuntu container with: $ docker run -it ubuntu bashShare images, automate workflows, and more with a free Docker ID: https://hub.docker.com/For more examples and ideas, visit: https://docs.docker.com/get-started/- The following

docker imagescommand lists the Docker images on your system and pretty-prints the output:

sudo docker images --format '{{.Repository}}:{{.Tag}}'hello-world:latest- Enter the

podman pullcommand specifying the transport (docker-daemon) and the name of the image, separated by::

podman pull docker-daemon:hello-world:latestGetting image source signaturesCopying blob af0b15c8625b doneCopying config fce289e99e doneWriting manifest to image destinationStoring signaturesfce289e99eb9bca977dae136fbe2a82b6b7d4c372474c9235adc1741675f587e- Once you’ve imported the image, running the

podman imagescommand will display thehello-worldimage:

podman imagesREPOSITORY TAG IMAGE ID CREATED SIZEdocker.io/library/hello-world latest fce289e99eb9 13 months ago 5.94 kB- To run the image, enter the following

podman runcommand:

podman run hello-worldHello from Docker!This message shows that your installation appears to be working correctly.To generate this message, Docker took the following steps: 1. The Docker client contacted the Docker daemon. 2. The Docker daemon pulled the "hello-world" image from the Docker Hub. (amd64) 3. The Docker daemon created a new container from that image which runs the executable that produces the output you are currently reading. 4. The Docker daemon streamed that output to the Docker client, which sent it to your terminal.To try something more ambitious, you can run an Ubuntu container with: $ docker run -it ubuntu bashShare images, automate workflows, and more with a free Docker ID: https://hub.docker.com/For more examples and ideas, visit: https://docs.docker.com/get-started/Creating a Basic Nuxt.js Project

For the scope of this tutorial, we’ll create a simple web-application using Nuxt.JS, a progressive Vue-based framework that aims to provide a great experience for developers. Then, in the next sections, you’ll use Podman to create a container image for your project and push it Quay.io. Lastly, you’ll use Docker to run the container image.

- Nuxt.JS is distributed as an NPM package. To install it, fire up a terminal window, and execute the following command:

npm install nuxt+ nuxt@2.11.0added 1067 packages from 490 contributors and audited 9750 packages in 75.666sfound 0 vulnerabilitiesNote that the above output was truncated for brevity.

- With Nuxt.JS installed on your computer, you can create a new bare-bones project:

npx create-nuxt-app podman-nuxtjs-demoYou will be prompted to answer a few questions:

create-nuxt-app v2.14.0✨ Generating Nuxt.js project in podman-nuxtjs-demo? Project name podman-nuxtjs-demo? Project description Podman Nuxt.JS demo? Author name Gcore? Choose the package manager Npm? Choose UI framework Bootstrap Vue? Choose custom server framework None (Recommended)? Choose Nuxt.js modules (Press <space> to select, <a> to toggle all, <i> to invert selection)? Choose linting tools ESLint? Choose test framework None? Choose rendering mode Universal (SSR)? Choose development tools jsconfig.json (Recommended for VS Code)Once you answer these questions, npm will install the required dependencies:

🎉 Successfully created project podman-nuxtjs-demo To get started: cd podman-nuxtjs-demo npm run dev To build & start for production: cd podman-nuxtjs-demo npm run build npm run startNote that the above output was truncated for brevity.

- Enter the following commands to start your new application:

cd podman-nuxtjs-demo/ && npm run dev> podman-nuxtjs-demo@1.0.0 dev /home/vagrant/podman-nuxtjs-demo> nuxt ╭─────────────────────────────────────────────╮ │ │ │ Nuxt.js v2.11.0 │ │ Running in development mode (universal) │ │ │ │ Listening on: http://localhost:3000/ │ │ │ ╰─────────────────────────────────────────────╯ℹ Preparing project for development 14:39:30ℹ Initial build may take a while 14:39:30✔ Builder initialized 14:39:30✔ Nuxt files generated 14:39:30✔ Client Compiled successfully in 23.53s✔ Server Compiled successfully in 17.82sℹ Waiting for file changes 14:39:56ℹ Memory usage: 209 MB (RSS: 346 MB) 14:39:56- Point your browser to

http://localhost:3000

, and you should see something similar to the screenshot below:

Building a Container Image for Your Nuxt.JS Project

In this section, we’ll look at how you can use Podman to build a container image for thepodman-nextjs-demo project.

- Create a file called

Dockerfileand place the following content into it:

FROM node:10WORKDIR /usr/src/appCOPY package*.json ./RUN npm installCOPY . .EXPOSE 3000CMD [ "npm", "run", "dev" ]☞ For a quick refresher on the above Dockerfile commands, refer the Create a Docker Image section from the Debug a Node.js Application Running in a Docker Container tutorial.

- To avoid sending large files to the build context and speed up the process, create a file called

.dockerignorewith the following content:

node_modulesnpm-debug.log.nuxtAs you can see, this is just a plain-text file containing names of the files and directories that Podman should exclude from the build.

- Build the image. Execute the following

podman buildcommand, specifying the-tflag with the tagged name Podman will apply to the build image:

podman build -t podman-nuxtjs-demo:podman .STEP 1: FROM node:10STEP 2: RUN mkdir -p /usr/src/nuxt-app--> Using cache c7198c4f08b90ecb5575bbce23fc095e5c65fe5dc4b4f77b23192e2eae094d6fSTEP 3: WORKDIR /usr/src/nuxt-app--> Using cache f1cc5aba3f36e122513c5ff0410f862d6099bcee886453f7fb30859f66e0ac78STEP 4: COPY . /usr/src/nuxt-app/--> Using cache fb4c322c98b41d446f5cceb88b3f9c451751d0cfe8ed9d0e6eb153919b498da3STEP 5: RUN npm install--> Using cache bb5324e79782b4522048dcc5f0f02c41b56e12198438aa59a7588a6824a435e1STEP 6: RUN npm run build> podman-nuxtjs-demo@1.0.0 build /usr/src/nuxt-app> nuxt buildℹ Production build✔ Builder initialized✔ Nuxt files generated✔ Client Compiled successfully in 2.95m✔ Server Compiled successfully in 10.91sHash: 7c4493c4d1c7b235dd8eVersion: webpack 4.41.6Time: 177257msBuilt at: 02/11/2020 4:48:17 PM Asset Size Chunks Chunk Names../server/client.manifest.json 16.1 KiB [emitted] 7d497fe85470995d6e29.js 2.99 KiB 2 [emitted] [immutable] pages/index 848739217655a36af267.js 671 KiB 4 [emitted] [immutable] [big] vendors.app 90036491716edfc3e86d.js 159 KiB 1 [emitted] [immutable] commons.app LICENSES 1.95 KiB [emitted] b625f5fc00e8ff962762.js 2.31 KiB 3 [emitted] [immutable] runtime eac7116f7d28455b0958.js 36 KiB 0 [emitted] [immutable] app + 2 hidden assetsEntrypoint app = b625f5fc00e8ff962762.js 90036491716edfc3e86d.js 848739217655a36af267.js eac7116f7d28455b0958.jsWARNING in asset size limit: The following asset(s) exceed the recommended size limit (244 KiB).This can impact web performance.Assets: 848739217655a36af267.js (671 KiB)Hash: e3d9cfd644a086dc9c5bVersion: webpack 4.41.6Time: 10916msBuilt at: 02/11/2020 4:48:29 PM Asset Size Chunks Chunk Namesd1d703b09adf296a453d.js 3.07 KiB 1 [emitted] [immutable] pages/index server.js 222 KiB 0 [emitted] app server.manifest.json 145 bytes [emitted]Entrypoint app = server.js0d239b0083a60482b4b5fa60a99b96dd22045822e50fbd83b8a369d8179bf307STEP 7: EXPOSE 30001d037e041dd4a8d6c94a9f6fb8fe6578f5e00d27aab9168bad83e7ab260bbeaeSTEP 8: ENV NUXT_HOST=0.0.0.040d684a5441a8da38ed5198be722719f393be13a855a9e85cbc49e5c7155f7ccSTEP 9: ENV NUXT_PORT=30007d07961e058d66e172f4b9e01d50fb355c16060a990252c5bc7cd35d960f5f72STEP 10: CMD ["npm", "run", "dev"]STEP 11: COMMIT podman-nuxtjs-demo:podman54c55a8a44f30105371652bc2c25e0fbba200ad6c945654077151194aa0a66fe- At this point, you can check that everything went well with:

podman imagesREPOSITORY TAG IMAGE ID CREATED SIZElocalhost/podman-nuxtjs-demo podman 54c55a8a44f3 About a minute ago 1.09 GBdocker.io/library/node 10 bb78c02ca3bf 4 days ago 937 MB- To run the

podman-nuxtjs-demo:podmancontainer, enter thepodmanrun command and pass it the following arguments:

-dtto specify that the container should be run in the background and that Podman should allocate a pseudo-TTY-pwith the port on the host (3000) that’ll be forwarded to the container port (3000), separated by:.- The name of your image (

podman-nuxtjs-demo:podman)

podman run -dt -p 3000:3000/tcp podman-nuxtjs-demo:podmanThis will print out to the console the container ID:

4de08084dd1d33fcdae96cd493b3eb20406ea89ce2a3e8dbc833b38c2243ce43- You can list your running containers with:

podman psCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES4de08084dd1d localhost/podman-nuxtjs-demo:podman npm run dev 4 seconds ago Up 4 seconds ago 0.0.0.0:3000->3000/tcp objective_neumann- To retrieve detailed information about your running container, enter the

podman inspectcommand specifying the container ID:

podman inspect 4de08084dd1d33fcdae96cd493b3eb20406ea89ce2a3e8dbc833b38c2243ce43podman inspect 4de08084dd1d33fcdae96cd493b3eb20406ea89ce2a3e8dbc833b38c2243ce43[ { "Id": "4de08084dd1d33fcdae96cd493b3eb20406ea89ce2a3e8dbc833b38c2243ce43", "Created": "2020-02-11T17:00:06.819669549Z", "Path": "docker-entrypoint.sh", "Args": [ "npm", "run", "dev" ], "State": { "OciVersion": "1.0.1-dev", "Status": "running", "Running": true, "Paused": false, "Restarting": false, "OOMKilled": false, "Dead": false, "Pid": 10637, "ConmonPid": 10628, "ExitCode": 0, "Error": "", "StartedAt": "2020-02-11T17:00:07.317812139Z", "FinishedAt": "0001-01-01T00:00:00Z", "Healthcheck": { "Status": "", "FailingStreak": 0, "Log": null } }, "Image": "54c55a8a44f30105371652bc2c25e0fbba200ad6c945654077151194aa0a66fe", "ImageName": "localhost/podman-nuxtjs-demo:podman", "Rootfs": "", "Pod": "",Note that the above output was truncated for brevity.

- To retrieve the logs from your container, run the

podman logscommand specifying the container ID or the--latestflag:

podman logs --latest> podman-nuxtjs-demo@1.0.0 dev /usr/src/nuxt-app> nuxt ╭─────────────────────────────────────────────╮ │ │ │ Nuxt.js v2.11.0 │ │ Running in development mode (universal) │ │ │ │ Listening on: http://10.0.2.100:3000/ │ │ │ ╰─────────────────────────────────────────────╯ℹ Preparing project for developmentℹ Initial build may take a while✔ Builder initialized✔ Nuxt files generated✔ Client Compiled successfully in 25.36s✔ Server Compiled successfully in 19.21sℹ Waiting for file changesℹ Memory usage: 254 MB (RSS: 342 MB)- Display the list of running processes inside your container:

podman top 4de08084dd1dUSER PID PPID %CPU ELAPSED TTY TIME COMMANDroot 1 0 0.000 3m52.098907307s pts/0 0s npmroot 17 1 0.000 3m51.099829437s pts/0 0s sh -c nuxtroot 18 17 11.683 3m51.099997015s pts/0 27s node /usr/src/nuxt-app/node_modules/.bin/nuxtPush Your Podman Image to Quay.io

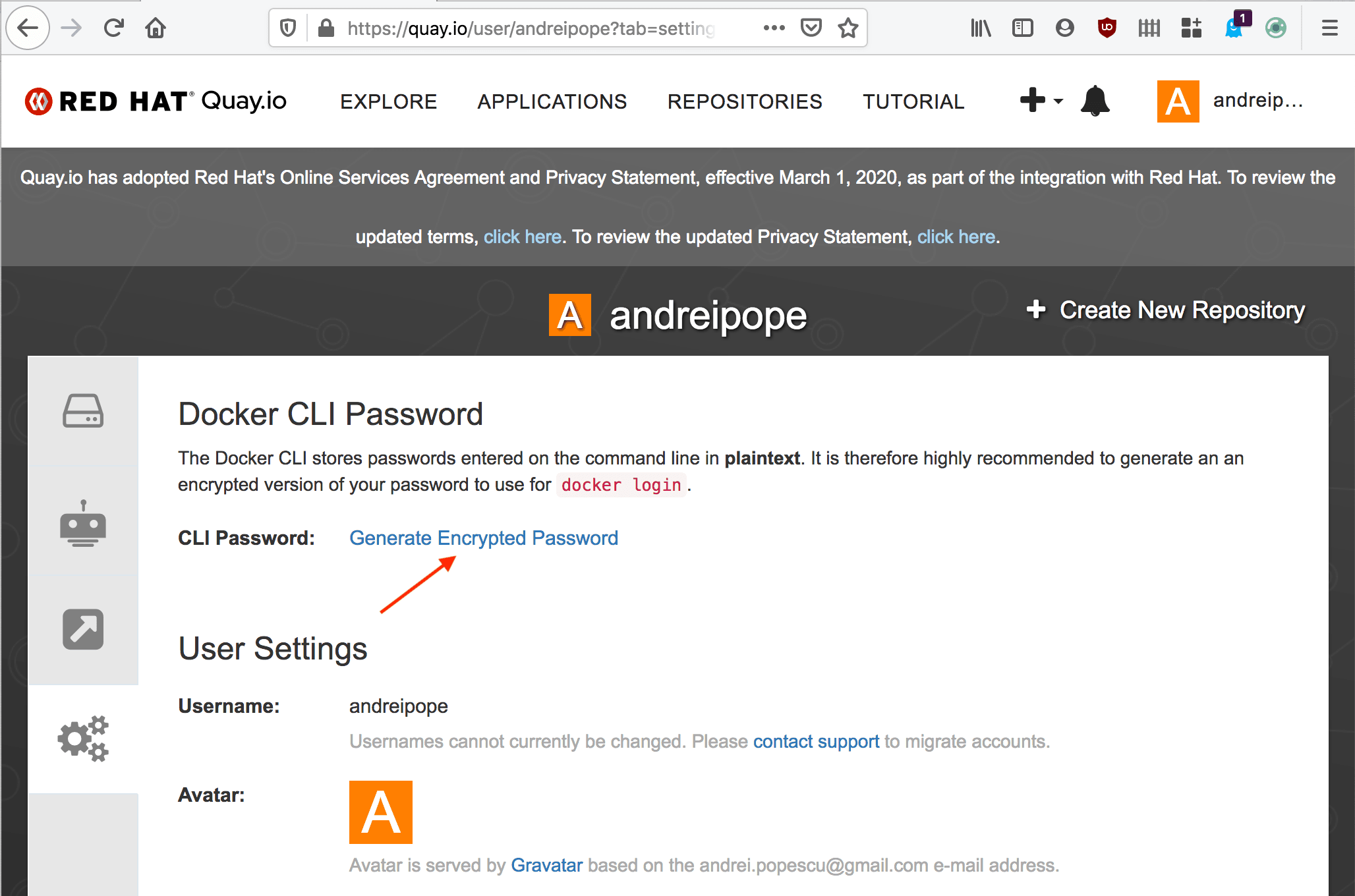

- First, you must generate an encrypted password. Point your browser to http://quay.io, and then navigate to the Account Settings page:

- On the Account Settings page, select Generate Encrypted Password:

- When prompted, enter your Quay.io password:

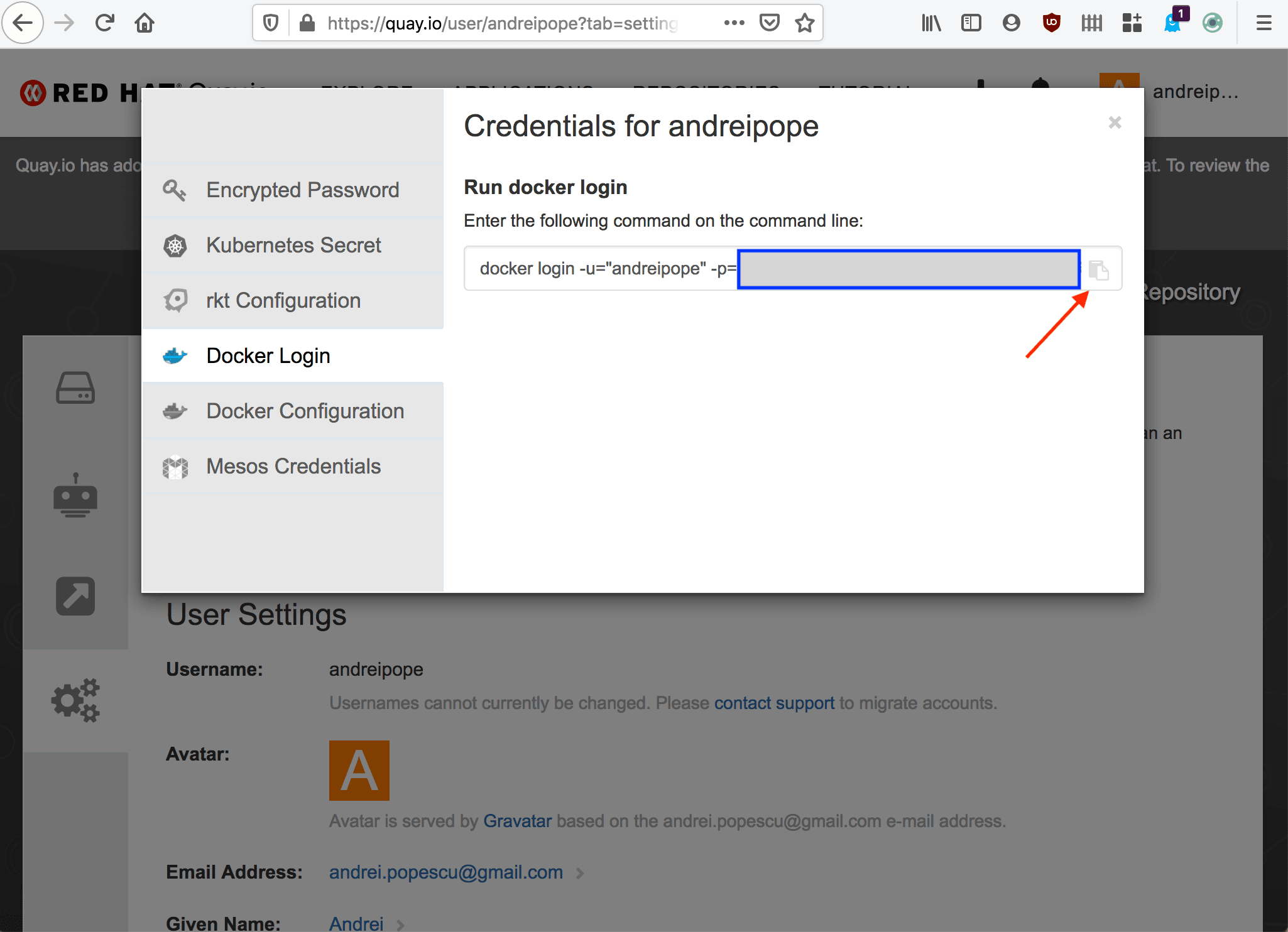

- From the sidebar on the left, select Docker Login. Then, copy your encrypted password:

- You can now log in to Quay.io. Enter the

podman logincommand specifying:

- The registry server (

quay.io) - The

-uflag with your username - The

-pflag with the encrypted password you retrieved earlier

podman login quay.io -u <YOUR_USER_NAME> -p="<YOUR_ENCRYPTED_PASSWORD>"Login Succeeded!- To push the

podman-nuxtjs-demoimage to Quay.io, enter the followingpodman pushcommand:

podman push podman-nuxtjs-demo:podman quay.io/andreipope/podman-nuxtjs-demo:podmanGetting image source signaturesCopying blob 69dfa7bd7a92 doneCopying blob 4d1ab3827f6b doneCopying blob 7948c3e5790c doneCopying blob 01727b1a72df doneCopying blob 03dc1830d2d5 doneCopying blob 1d7382716a27 doneCopying blob 062fc3317d1a doneCopying blob 3d36b8a4efb1 doneCopying blob 1708ebc408a9 doneCopying blob 0aacf878561f doneCopying blob c49b91e9cfd0 doneCopying blob 4294ef3571b7 doneCopying blob 1da55789948c doneCopying config 54c55a8a44 doneWriting manifest to image destinationCopying config 54c55a8a44 doneWriting manifest to image destinationStoring signaturesIn the above command, do not forget to replace our username (andreipope) with yours.

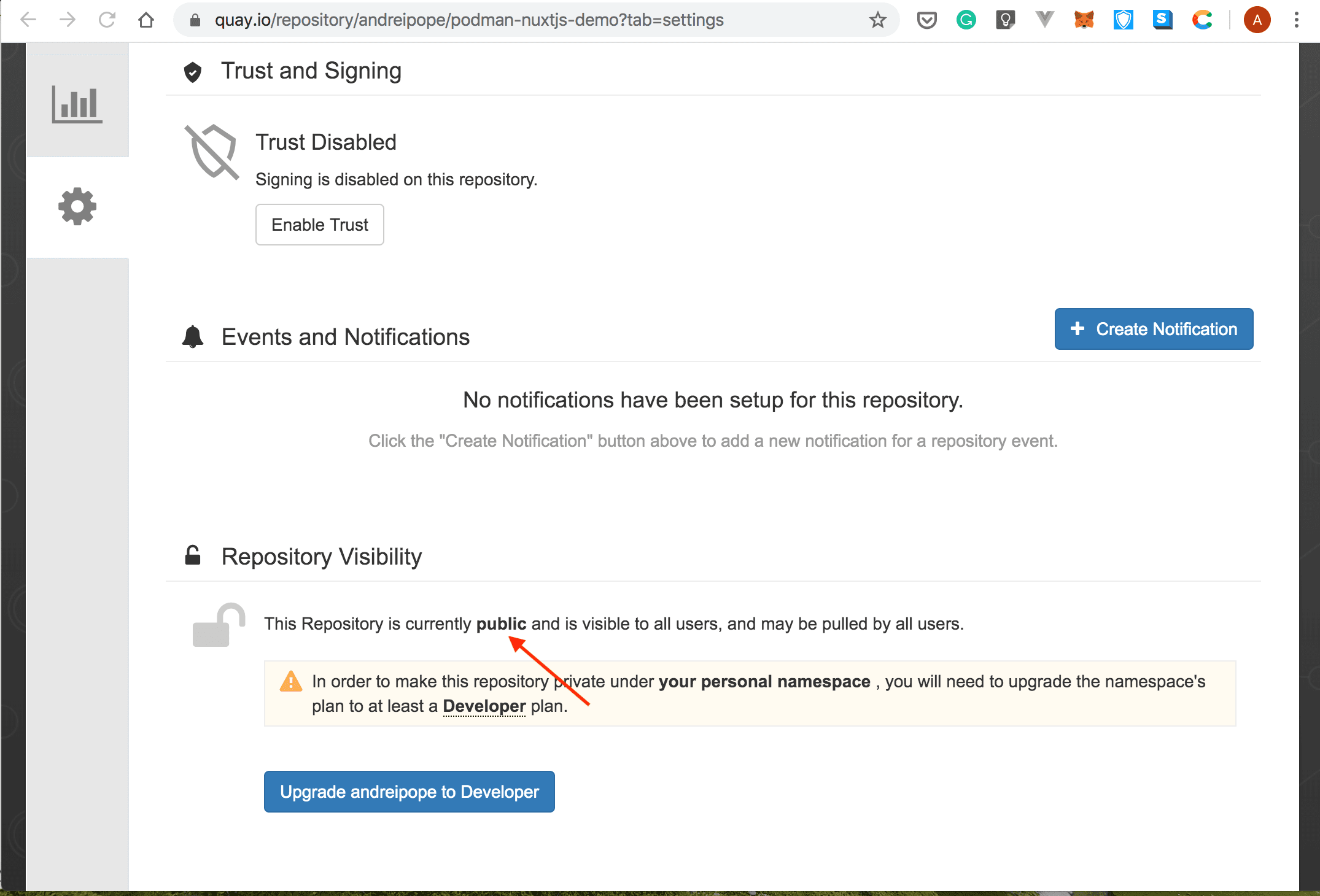

- Point your browser to https://quay.io/, navigate to the

podman-nuxtjs-demorepository, and make sure the repository is public:

Run Your Podman Image with Docker

Container images are compatible between Podman and Docker. In this section, you’ll use Docker to pull the podman-nuxtjs-demo image from Quay.io and run it. Ideally, you would want to run this on a different machine.

- You can log in to Quay.io by entering the

docker logincommand and passing it the following parameters:

- The

-uflag with your username - The

-pflag with your encrypted password (you retrieved it from Quay.io in the previous section) - The name of the registry (

quay.io)

docker login -u="<YOUR_USER_NAME>" -p="YOUR_ENCRYPTED_PASSWORD" quay.ioWARNING! Using --password via the CLI is insecure. Use --password-stdin.Login Succeeded- To run the

podman-nuxtjs-demoimage, you can use the following command:

docker run -dt -p 3000:3000/tcp quay.io/andreipope/podman-nuxtjs-demo:podmanUnable to find image 'quay.io/andreipope/podman-nuxtjs-demo:podman' locallypodman: Pulling from andreipope/podman-nuxtjs-demo03644a8453bd: Pull completee2c9fbbb35b2: Pull complete0c33fe27c91c: Pull complete957ac2567af6: Pull complete934d2e09d84d: Pull complete50c60e376f59: Pull complete3c43a52a3ecc: Pull completee74942a3267a: Pull completeaf1466e8bc5b: Pull complete3f24948a552e: Pull completedf2fea35a007: Pull complete7045f2526057: Pull complete5090c2f6d806: Pull completeDigest: sha256:fcf90cfc3fe1d0f7e975db8a271003cdd51d6f177e490eb39ec1e44d3659b815Status: Downloaded newer image for quay.io/andreipope/podman-nuxtjs-demo:podman1c0981690d66f2cd8cb77e9573f1dd4e9d7700869e08797b42fc33590d8baabf- Wait a bit until Docker pulls the image and creates the container. Then, issue the the

docker pscommand to display the list of running containers:

docker psCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES1c0981690d66 quay.io/andreipope/podman-nuxtjs-demo:podman "docker-entrypoint.s…" 25 seconds ago Up 20 seconds 0.0.0.0:3000->3000/tcp practical_bose- To make sure everything works as expected, point your browser to

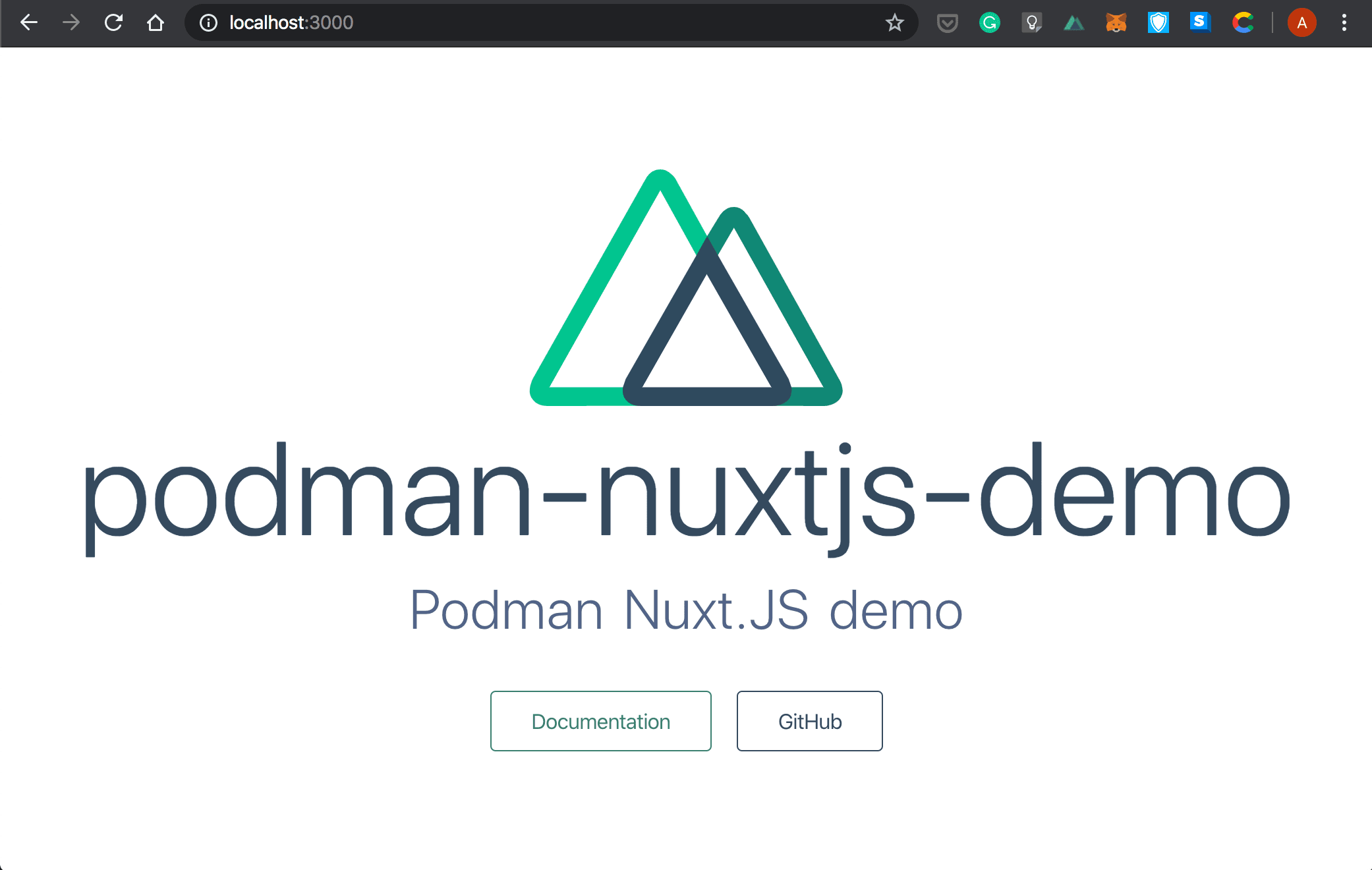

http://localhost:3000

. You should see something similar to the screenshot below:

Creating Pods

Until now, you’ve used Podman similarly to how Docker is used. However, Podman brings a couple of new features such as the ability to create pods. A Pod is a group of tightly-coupled containers that share their storage and network resources. In a nutshell, you can use a Pod to model a logical host. In this section, we’ll walk you through the process of creating a Pod comprised of the podman-nuxtjs-demo container and a PostgreSQL database. Note that it is beyond the scope of this tutorial to show how you can configure the storage and network for your Pod.

- Create a pod with the

podman-nuxtjs-democontainer. Enter thepodman runwith the following arguments:

-dtto specify that the container should be run in the background and that Podman should allocate a pseudo-TTY--podwith the name of your new Pod. Specifying-newindicates that you want to create a new Pod. Otherwise, Podman tries to attach the container to an existing Pod.-pwith the port on host (3000) that’ll be forwarded to the container port (3000), separated by:.- The name of your image (

podman-nuxtjs-demo:podman)

podman run -dt --pod new:podman_demo -p 3000:3000/tcp quay.io/andreipope/podman-nuxtjs-demo:podmanThis will print the identifier of your new Pod:

972c7c1db0c31a42ba4b41025078dfc6abb046f503aa413d6cca313068042041- You can display the list of running Pods with the

podman pod listcommand:

podman pod listPOD ID NAME STATUS CREATED # OF CONTAINERS INFRA IDd15a2abd9d5b podman_demo Running 32 seconds ago 2 6a5bc0360ae2In the output above, the number of containers is 2. This is because all Podman Pods include something called an Infra container, which does nothing except that it goes to sleep. This way, it holds the namespace associated with the Pod so that Podman can attach other containers to the Pod.

- Print the list of running containers by entering the

podman pscommand followed by the-aand-pflags. This lists all containers and prints the identifiers and the names of the Pods your containers are associated with:

podman ps -apCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES POD972c7c1db0c3 quay.io/andreipope/podman-nuxtjs-demo:podman npm run dev 56 seconds ago Up 55 seconds ago 0.0.0.0:3000->3000/tcp festive_yonath d15a2abd9d5b6a5bc0360ae2 k8s.gcr.io/pause:3.1 56 seconds ago Up 55 seconds ago 0.0.0.0:3000->3000/tcp d15a2abd9d5b-infra d15a2abd9d5bAs you can see, the Infra container uses the k8s.gcr.io/pause image.

- Run the

postgres:11-alpineimage and associate it with thepodman_demoPod:

podman run -dt --pod podman_demo postgres:11-alpined395bed40988a953257b9501497c66b886b2fb6e81f48aa0ac89d7cfe2639b75- This takes a bit of time to complete. Once everything is ready, you should see that the number of containers has been increased to

3:

podman pod listPOD ID NAME STATUS CREATED # OF CONTAINERS INFRA IDd15a2abd9d5b podman_demo Running 8 minutes ago 3 6a5bc0360ae2- You can display the list of your running containers with the following

podman pscommand:

podman ps -apCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES PODab5bd4810494 docker.io/library/postgres:11-alpine postgres 5 minutes ago Up 3 minutes ago 0.0.0.0:3000->3000/tcp dreamy_jackson d15a2abd9d5b972c7c1db0c3 quay.io/andreipope/podman-nuxtjs-demo:podman npm run dev 9 minutes ago Up 9 minutes ago 0.0.0.0:3000->3000/tcp festive_yonath d15a2abd9d5b6a5bc0360ae2 k8s.gcr.io/pause:3.1 9 minutes ago Up 9 minutes ago- As an example, you can stop the

podman-nuxtjs-democontainer. The other containers in the Pod won’t be affected, and the status of the Pod will show asRunning:

podman stop 972c7c1db0c3972c7c1db0c31a42ba4b41025078dfc6abb046f503aa413d6cca313068042041podman pod psPOD ID NAME STATUS CREATED # OF CONTAINERS INFRA IDd15a2abd9d5b podman_demo Running 12 minutes ago 3 6a5bc0360ae2- To start again the container, enter the

podman startcommand followed by the identifier of the container you want to start:

podman start 972c7c1db0c3972c7c1db0c31a42ba4b41025078dfc6abb046f503aa413d6cca313068042041- At this point, if you run the

podman ps -apcommand, you should see that the status of thepodman-nuxtjs-democontainer is nowUp:

podman ps -apCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES PODab5bd4810494 docker.io/library/postgres:11-alpine postgres 7 minutes ago Up 5 minutes ago 0.0.0.0:3000->3000/tcp dreamy_jackson d15a2abd9d5b972c7c1db0c3 quay.io/andreipope/podman-nuxtjs-demo:podman npm run dev 14 minutes ago Up 54 seconds ago 0.0.0.0:3000->3000/tcp festive_yonath d15a2abd9d5b6a5bc0360ae2 k8s.gcr.io/pause:3.1 14 minutes ago Up 14 minutes ago 0.0.0.0:3000->3000/tcp d15a2abd9d5b-infra d15a2abd9d5b- Lastly, let’s top the

podman_demopod:

podman pod stop podman_demod15a2abd9d5bcb6f403515c0ed4dd4cb7df252a87591a88975b5573eb7f20900- Enter the following command to make sure your Pod is stopped:

podman pod psPOD ID NAME STATUS CREATED # OF CONTAINERS INFRA IDd15a2abd9d5b podman_demo Stopped 17 minutes ago 3 6a5bc0360ae2Generate a Kubernetes Pod Spec with Podman

Podman can perform a snapshot of your container/Pod and generate a Kubernetes spec. This way, it makes it easier for you to orchestrate your containers with Kubernetes. For the scope of this section, we’ll illustrate how to use Podman to generate a Kubernetes spec and deploy your Pod to Kubernetes.

- To create a Kubernetes spec for a container and save it into a file called

podman-nuxtjs-demo.yaml, run the followingpodman generate kubecommand:

podman generate kube <CONTAINER_ID> > podman-nuxtjs-demo.yaml- Let’s take a look at what’s inside the

podman-nuxtjs-demo.yamlfile:

cat podman-nuxtjs-demo.yaml# Generation of Kubernetes YAML is still under development!## Save the output of this file and use kubectl create -f to import# it into Kubernetes.## Created with podman-1.6.4```YAMLapiVersion: v1kind: Podmetadata: creationTimestamp: "2020-02-12T05:21:44Z" labels: app: objectiveneumann name: objectiveneumannspec: containers: - command: - npm - run - dev env: - name: PATH value: /usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin - name: TERM value: xterm - name: HOSTNAME - name: container value: podman - name: NODE_VERSION value: 10.19.0 - name: YARN_VERSION value: 1.21.1 - name: NUXT_HOST value: 0.0.0.0 - name: NUXT_PORT value: "3000" image: localhost/podman-nuxtjs-demo:podman name: objectiveneumann ports: - containerPort: 3000 hostPort: 3000 protocol: TCP resources: {} securityContext: allowPrivilegeEscalation: true capabilities: {} privileged: false readOnlyRootFilesystem: false tty: true workingDir: /usr/src/nuxt-appstatus: {}There is a lot of output here, but the parts we’re interested in are:

metadata.labels.appandmetadata.name. You’ll have to give them more meaningful namesspec.containers.image. Since in real life you’ll have to pull the images from a registry, you must replacelocalhost/podman-nuxtjs-demo:podmanwith the address of your Quay.io container image.

- Edit the content of the

podman-nuxtjs-demo.yamlfile to the following:

# Generation of Kubernetes YAML is still under development!## Save the output of this file and use kubectl create -f to import# it into Kubernetes.## Created with podman-1.6.4apiVersion: v1kind: Podmetadata: creationTimestamp: "2020-02-12T05:24:44Z" labels: app: podman-nuxtjs-demo name: podman-nuxtjs-demospec: containers: - command: - npm - run - dev env: - name: PATH value: /usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin - name: TERM value: xterm - name: HOSTNAME - name: container value: podman - name: NODE_VERSION value: 10.19.0 - name: YARN_VERSION value: 1.21.1 - name: NUXT_HOST value: 0.0.0.0 - name: NUXT_PORT value: "3000" image: quay.io/andreipope/podman-nuxtjs-demo:podman name: objectiveneumann ports: - containerPort: 3000 hostPort: 3000 protocol: TCP resources: {} securityContext: allowPrivilegeEscalation: true capabilities: {} privileged: false readOnlyRootFilesystem: false tty: true workingDir: /usr/src/nuxt-appstatus: {}The above spec uses the address of our container image – quay.io/andreipope/podman-nuxtjs-demo:podman. Make sure you replace this with your address.

- Now, if your Quay.io repository is private, Kubernetes must authenticate with the registry to pull the image. Point your browser to http://quay.io, and then navigate to the Settings section of your repository. Select Generate Encrypted Password, and you’ll be asked to type your password. From the sidebar on the left, select Kubernetes Secret to download your Kubernetes secrets file:

- Next, you must refer to this Kubernetes secret from the

podman-nuxtjs-demo.yaml. You can do this by adding a field similar to the one below:

imagePullSecrets: - name: andreipope-pull-secretNote that the name of our Kubernetes secret is andreipope-pull-secret, but yours will be different.

At this point, your podman-nuxtjs-demo.yaml file should look something like the following:

# Generation of Kubernetes YAML is still under development!## Save the output of this file and use kubectl create -f to import# it into Kubernetes.## Created with podman-1.6.4apiVersion: v1kind: Podmetadata: creationTimestamp: "2020-02-12T05:24:44Z" labels: app: podman-nuxtjs-demo name: podman-nuxtjs-demospec: containers: - command: - npm - run - dev env: - name: PATH value: /usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin - name: TERM value: xterm - name: HOSTNAME - name: container value: podman - name: NODE_VERSION value: 10.19.0 - name: YARN_VERSION value: 1.21.1 - name: NUXT_HOST value: 0.0.0.0 - name: NUXT_PORT value: "3000" image: quay.io/andreipope/podman-nuxtjs-demo:podman name: objectiveneumann ports: - containerPort: 3000 hostPort: 3000 protocol: TCP resources: {} securityContext: allowPrivilegeEscalation: true capabilities: {} privileged: false readOnlyRootFilesystem: false tty: true workingDir: /usr/src/nuxt-app imagePullSecrets: - name: andreipope-pull-secretstatus: {}Create a Kubernetes Cluster with Kind (Optional)

Kind is a tool for running local Kubernetes clusters using Docker container “nodes”. Follow the steps in this section if you don’t have a running Kubernetes cluster:

- Create a file called

cluster.yamlwith the following content:

kind create cluster --config cluster.yaml# three node (two workers) cluster configkind: ClusterapiVersion: kind.x-k8s.io/v1alpha4nodes:- role: control-plane- role: worker- role: worker- Apply the spec:

kind create cluster --config cluster.yamlCreating cluster "kind" ... ✓ Ensuring node image (kindest/node:v1.16.3) 🖼 ✓ Preparing nodes 📦 ✓ Writing configuration 📜 ✓ Starting control-plane 🕹️ ✓ Installing CNI 🔌 ✓ Installing StorageClass 💾 ✓ Joining worker nodes 🚜Set kubectl context to "kind-kind"You can now use your cluster with:This creates a Kubernetes cluster with a control plane and two worker nodes.

Deploying to Kubernetes

- Apply your Kubernetes pull secrets spec. Enter the

kubectl createcommand specifying:

- The

-fflag with the name of the file (our example uses a file namedandreipope-secret.yml) - The

--namespaceflag with the name of your namespace (we’re using the default namespace)

kubectl create -f andreipope-secret.yml --namespace=defaultsecret/andreipope-pull-secret created- Now you’re ready to apply the

podman-nuxt-js-demospec:

kubectl apply -f podman-nuxt-js-demo.yamlpod/podman-nuxtjs-demo created- Monitor the status of your installation with:

kubectl get podsNAME READY STATUS RESTARTS AGEpodman-nuxtjs-demo 0/1 ContainerCreating 0 85s- You can retrieve more details about the status of your installation by entering the

kubectl describe podfollowed by the name of your Pod:

kubectl describe pod podman-nuxtjs-demoName: podman-nuxtjs-demoNamespace: defaultPriority: 0Node: kind-worker2/172.17.0.3Start Time: Wed, 12 Feb 2020 19:36:37 +0200Labels: app=podman-nuxtjs-demoAnnotations: kubectl.kubernetes.io/last-applied-configuration: {"apiVersion":"v1","kind":"Pod","metadata":{"annotations":{},"creationTimestamp":"2020-02-12T05:24:44Z","labels":{"app":"podman-nuxtjs-dem...Status: PendingIP:IPs: <none>Containers: objectiveneumann: Container ID: Image: quay.io/andreipope/podman-nuxtjs-demo:podman Image ID: Port: 3000/TCP Host Port: 3000/TCP Command: npm run dev State: Waiting Reason: ContainerCreating Ready: False Restart Count: 0 Requests: memory: 1Gi Environment: PATH: /usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin TERM: xterm HOSTNAME: container: podman NODE_VERSION: 10.19.0 YARN_VERSION: 1.21.1 NUXT_HOST: 0.0.0.0 NUXT_PORT: 3000 Mounts: /var/run/secrets/kubernetes.io/serviceaccount from default-token-rp6n5 (ro)Conditions: Type Status Initialized True Ready False ContainersReady False PodScheduled TrueVolumes: default-token-rp6n5: Type: Secret (a volume populated by a Secret) SecretName: default-token-rp6n5 Optional: falseQoS Class: BurstableNode-Selectors: <none>Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s node.kubernetes.io/unreachable:NoExecute for 300sEvents: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 57s default-scheduler Successfully assigned default/podman-nuxtjs-demo to kind-worker2 Normal Pulling 55s kubelet, kind-worker2 Pulling image "quay.io/andreipope/podman-nuxtjs-demo:podman"As an alternative, you can list events with the following command:

kubectl get eventsLAST SEEN TYPE REASON OBJECT MESSAGE4m55s Normal RegisteredNode node/kind-control-plane Node kind-control-plane event: Registered Node kind-control-plane in Controller4m37s Normal Starting node/kind-control-plane Starting kube-proxy.4m36s Normal NodeHasSufficientMemory node/kind-worker Node kind-worker status is now: NodeHasSufficientMemory4m36s Normal NodeHasNoDiskPressure node/kind-worker Node kind-worker status is now: NodeHasNoDiskPressure4m36s Normal NodeHasSufficientPID node/kind-worker Node kind-worker status is now: NodeHasSufficientPID4m35s Normal RegisteredNode node/kind-worker Node kind-worker event: Registered Node kind-worker in Controller4m15s Normal Starting node/kind-worker Starting kube-proxy.3m36s Normal NodeReady node/kind-worker Node kind-worker status is now: NodeReady4m34s Normal NodeHasSufficientMemory node/kind-worker2 Node kind-worker2 status is now: NodeHasSufficientMemory4m34s Normal NodeHasNoDiskPressure node/kind-worker2 Node kind-worker2 status is now: NodeHasNoDiskPressure4m34s Normal NodeHasSufficientPID node/kind-worker2 Node kind-worker2 status is now: NodeHasSufficientPID4m30s Normal RegisteredNode node/kind-worker2 Node kind-worker2 event: Registered Node kind-worker2 in Controller4m15s Normal Starting node/kind-worker2 Starting kube-proxy.3m34s Normal NodeReady node/kind-worker2 Node kind-worker2 status is now: NodeReady3m29s Normal Scheduled pod/podman-nuxtjs-demo Successfully assigned default/podman-nuxtjs-demo to kind-worker23m27s Normal Pulling pod/podman-nuxtjs-demo Pulling image "quay.io/andreipope/podman-nuxtjs-demo:podman"- Wait a bit until the pod is created. Then, you can list all pods with:

kubectl get podsNAME READY STATUS RESTARTS AGEpodman-nuxtjs-demo 1/1 Running 0 7m34s- Now let’s forward all requests made to

http://localhost:3000

to port 3000 on thepodman-nuxtjs-demoPod:

kubectl port-forward pod/podman-nuxtjs-demo 3000:3000Forwarding from 127.0.0.1:3000 -> 3000Forwarding from [::1]:3000 -> 3000Handling connection for 3000Handling connection for 3000Handling connection for 3000Handling connection for 3000Handling connection for 3000Handling connection for 3000Handling connection for 3000- Point your browser to

http://localhost:3000

. If everything works well, you should see something like the following:

Congratulations on completing this tutorial, now you know enough to use Podman as a replacement for Docker. Stay tuned for our next tutorials where, amongst many other things, you’ll learn how to use Buildah.

Thanks for reading!

Related articles

Subscribe to our newsletter

Get the latest industry trends, exclusive insights, and Gcore updates delivered straight to your inbox.