$0

Adaptive bitrate encoding at no cost

210+points of presence

Local and global infrastructure for Streaming, CDN and WebRTC

∞

Removing the limitations and problems of your current solution

Get optimised streaming performance with ease

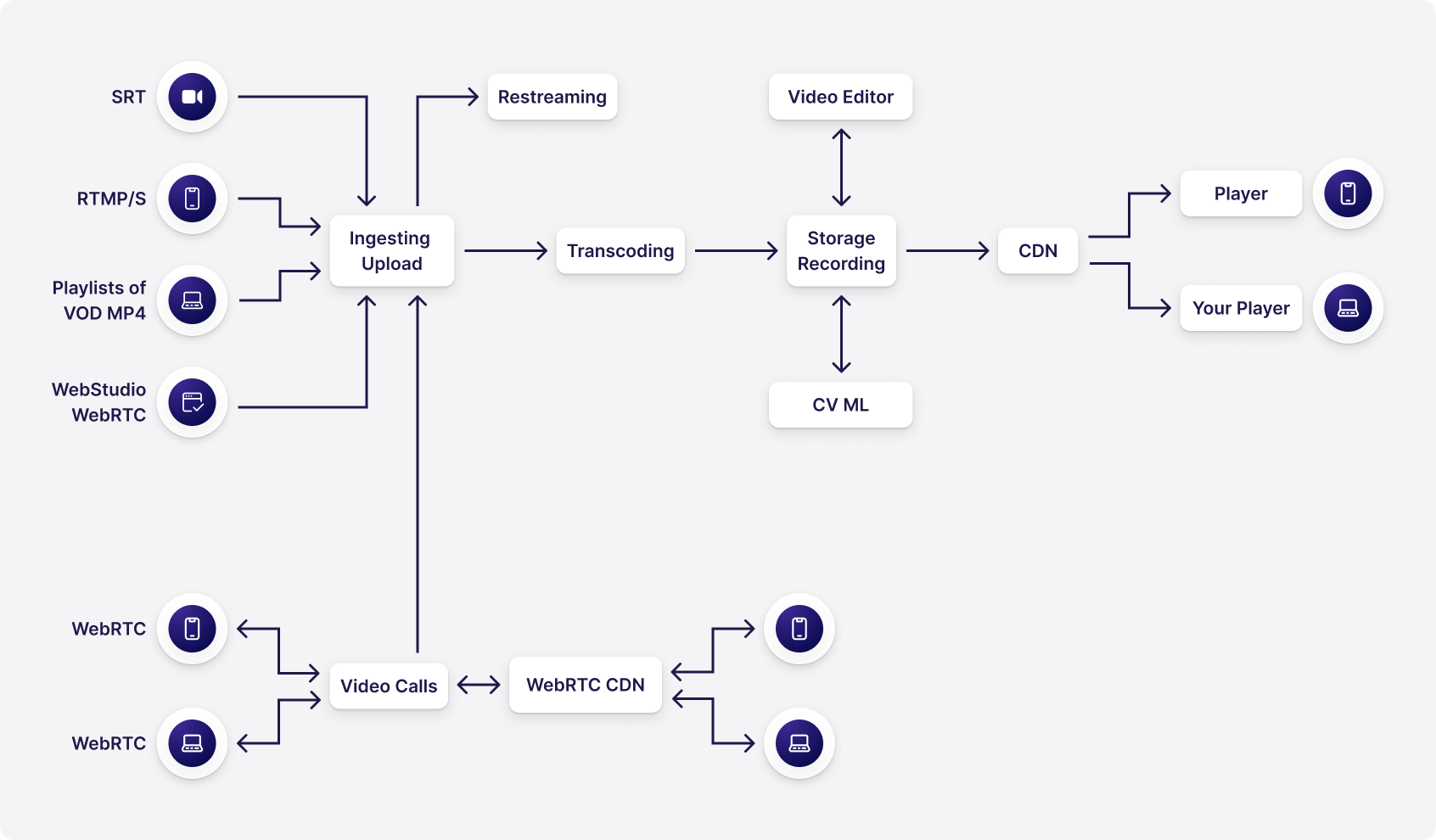

We support all aspects of streaming in one platform: upload, live ingest, transcode, record, store, process video using machine learning, delivery via CDN, multistream, embedded player, and playback.

Scroll horizontally to view the chart

Happy Clients

From industries

Features

Ready to get started?

14 day free trial

Geography of streaming services

Points of video hosting and receiving live streams

CDN points of broadcasting video to end-viewers (total capacity >100 million viewers)

Soon opening points of video hosting and receiving live streams

Soon opening CDN points of broadcasting video to end-viewers

Pricing

Workflow ready

Video infrastructure as developers see it.

Just use a simple dashboard or integrate your mobile app, website, corporate CMS, or other software with an SDK and API.

Industry content you may like

More information in our blog → Gcore partners with AVEQ to elevate streaming performance monitoring

Gcore partners with AVEQ to elevate streaming performance monitoringAt Gcore, delivering exceptional streaming experiences to users across our global network is at the heart of what we do. We're excited to share how we're taking our CDN performance monitoring to new heights through our partnership with AVEQ and their innovative Surfmeter solution.Operating a massive global network spanning 210 points of presence across six continents comes with unique challenges. While our globally distributed caching infrastructure already ensures optimal performance for end-users, we recognized the need for deeper insights into the complex interactions between applications and our underlying network. We needed to move beyond traditional server-side monitoring to truly understand what our customers' users experience in the real world.Real-world performance visibilityThat's where AVEQ's Surfmeter comes in. We're now using Surfmeter to gain unprecedented visibility into our network performance through automated, active measurements that simulate actual streaming video quality, exactly as end-users experience it.This isn't about checking boxes or reviewing server logs. It's about measuring what users see on their screens at home. With Surfmeter, our engineering teams can identify and resolve potential bottlenecks or suboptimal configurations, and collaborate more effectively with our customers to continuously improve Quality of Experience (QoE).How we use SurfmeterAVEQ helps us simulate and analyze real-world scenarios where users access different video streams. Their software runs both on network nodes close to our data center CDN caches and at selected end-user locations with genuine ISP connections.What sets Surfmeter apart is its authentic approach: it opens video streams from the same platforms and players that end-users rely on, ensuring measurements truly represent real-world conditions. Unlike monitoring solutions that simply check stream availability, Surfmeter doesn't make assumptions or use third-party playback engines. Instead, it precisely replicates how video players request and decode data served from our CDN.Rapid issue resolutionWhen performance issues arise, Surfmeter provides our engineers with the deep insights needed to quickly identify root causes. Whether the problem lies within our CDN, with peering partners, or on the server side, we can pinpoint it with precision.By monitoring individual video requests, including headers and timings, and combining this data with our internal logging, we gain complete visibility and observability into our entire streaming pipeline. Surfmeter can also perform ping and traceroute tests from the same device, measuring video QoE, allowing our engineers to access all collected data through one API rather than manually connecting to devices for troubleshooting.Competitive benchmarking and future capabilitiesSurfmeter also enables us to benchmark our performance against other services and network providers. By deploying Surfmeter probes at customer-like locations, we can measure streaming from any source via different ISPs.This partnership reflects our commitment to transparency and data-driven service excellence. By leveraging AVEQ's Surfmeter solution, we ensure that our customers receive the best possible streaming performance, backed by objective, end-user-centric insights.Learn more about Gcore CDN

30 Oct 2025 How we engineered a single pipeline for LL-HLS and LL-DASH

How we engineered a single pipeline for LL-HLS and LL-DASHViewers in sports, gaming, and interactive events expect real-time, low-latency streaming experiences. To deliver this, the industry has rallied around two powerful protocols: Low-Latency HLS (LL-HLS) and Low-Latency DASH (LL-DASH).While they share a goal, their methods are fundamentally different. LL-HLS delivers video in a sequence of tiny, discrete files. LL-DASH delivers it as a continuous, chunked download of a larger file. This isn't just a minor difference in syntax; it implies completely different behaviors for the packager, the CDN, and the player.This duality presents a major architectural challenge: How do you build a single, efficient, and cost-effective pipeline that can serve both protocols simultaneously from one source?At Gcore, we took on this unification problem. The result is a robust, single-source pipeline that delivers streams with a glass-to-glass latency of approximately 2.0 seconds for LL-DASH and 3.0 seconds for LL-HLS. This is the story of how we designed it.Understanding the dualityTo build a unified system, we first had to deeply understand the differences in how each protocol operates at the delivery level.LL-DASH: the continuous feedMPEG-DASH has always been flexible, using a single manifest file to define media segments by their timing. Low-Latency DASH builds on this by using Chunked CMAF segments.Imagine a file that is still being written to on the server. Instead of waiting for the whole file to be finished, the player can request it, and the server can send it piece by piece using Chunked Transfer Encoding. The player receives a continuous stream of bytes and can start playback as soon as it has enough data.Single, long-lived files: A segment might be 2–6 seconds long, but it’s delivered as it’s being generated.Timing-based requests: The player knows when a segment should be available and requests it. The server uses chunked transfer to send what it has so far.Player-driven latency: The manifest contains a targetLatency attribute, giving the player a strong hint about how close to the live edge it should play.LL-HLS: the rapid-fire deliveryLL-HLS takes a different approach. It extends the traditional playlist-based HLS by breaking segments into even smaller chunks called Parts.Think of it like getting breaking news updates. The server pre-announces upcoming Parts in the manifest before they are fully available. The player then requests a Part, but the server holds that request open until the Part is ready to be delivered at full speed. This is called a Blocking Playlist Reload.Many tiny files (Parts): A 2-second segment might be broken into four 0.5-second Parts, each requested individually.Manifest-driven updates: The server constantly updates the manifest with new Parts, and uses special tags like #EXT-X-PART-INF and #EXT-X-SERVER-CONTROL to manage delivery.Server-enforced timing: The server controls when the player receives data by holding onto requests, which helps synchronize all viewers.A simplified diagram visually comparing the LL-HLS delivery of many small parts versus the LL-DASH chunked transfer of a single, larger segment over the same time period.These two philosophies demand different things from a CDN. LL-DASH requires the CDN to intelligently cache and serve partially complete files. LL-HLS requires the CDN to handle a massive volume of short, bursty requests and hold connections open for manifest updates. A traditional CDN is optimized for neither.Forging a unified strategyWith two different delivery models, where do you start? You find the one thing they both depend on: the keyframe.Playback can only start from a keyframe (or I-frame). Therefore, the placement of keyframes, which defines the Group of Pictures (GOP), is the foundational layer that both protocols must respect. By enforcing a consistent keyframe interval on the source stream, we could create a predictable media timeline. This timeline can then be described in two different “languages” in the manifests for LL-HLS and LL-DASH.A single timeline with consistent GOPs being packaged for both protocols.This realization led us to a baseline configuration, but each parameter involved a critical engineering trade-off:GOP: 1 second. We chose a frequent, 1-second GOP. The primary benefit is extremely fast stream acquisition; a player never has to wait more than a second for a keyframe to begin playback. The trade-off is a higher bitrate. A 1-second GOP can increase bitrate by 10–15% compared to a more standard 2-second GOP because you're storing more full-frame data. For real-time, interactive use cases, we prioritized startup speed over bitrate savings.Segment Size: 2 seconds. A 2-second segment duration provides a sweet spot. For LL-DASH and modern HLS players, it's short enough to keep manifest sizes manageable. For older, standard HLS clients, it prevents them from falling too far behind the live edge, keeping latency reduced even on legacy players.Part Size: 0.5 seconds. For LL-HLS, this means we deliver four updates per segment. This frequency is aggressive enough to achieve sub-3-second latency while being coarse enough to avoid overwhelming networks with excessive request overhead, which can happen with part durations in the 100–200ms range.Cascading challenges through the pipeline1. Ingest: predictability is paramountTo produce a clean, synchronized output, you need a clean, predictable input. We found that the encoder settings of the source stream are critical. An unstable source with a variable bitrate or erratic keyframe placement will wreck any attempt at low-latency delivery.For our users, we recommend settings that prioritize speed and predictability over compression efficiency:Rate control: Constant Bitrate (CBR)Keyframe interval: A fixed interval (e.g., every 30 frames for 30 FPS, to match our 1s GOP).Encoder tune: zerolatencyAdvanced options: Disable B-frames (bframes=0) and scene-cut detection (scenecut=0) to ensure keyframes are placed exactly where you command them to be.Here is an example ffmpeg command in Bash that encapsulates these principles:ffmpeg -re -i "source.mp4" -c:a aac -c:v libx264 \ -profile:v baseline -tune zerolatency -preset veryfast \ -x264opts "bframes=0:scenecut=0:keyint=30" \ -f flv "rtmp://your-ingest-url"2. Transcoding and packagingOur transcoding and Just-In-Time Packaging (JITP) layer is where the unification truly happens. This component does more than just convert codecs; it has to operate on a stream that is fundamentally incomplete.The primary challenge is that the packager must generate manifests and parts from media files that are still being written by the transcoder. This requires a tightly-coupled architecture where the packager can safely read from the transcoder's buffer.To handle the unpredictable nature of live sources, especially user-generated content via WebRTC, we use a hybrid workflow:GPU Workers (Nvidia/Intel): These handle the heavy lifting of decoding and encoding. Offloading to GPU hardware is crucial for minimizing processing latency and preserving advanced color formats like HDR+.Software Workers and Filters: These provide flexibility. When a live stream from a mobile device suddenly changes resolution or its framerate drops due to a poor connection, a rigid hardware pipeline would crash. Our software layer can handle these context changes gracefully, for instance, by scaling the erratic source and overlaying it on a stable, black-background canvas, meaning the output stream never stops.This makes our JITP a universal packager, creating three synchronized content types from a single, resilient source:LL-DASH (CMAF)LL-HLS (CMAF)Standard HLS (MPEG-TS) for backward compatibility3. CDN delivery: solving two problems at onceThis was the most intensive part of the engineering effort. Our CDN had to be taught how to excel at two completely different, high-performance tasks simultaneously.For LL-DASH, we developed a custom caching module we call chunked-proxy. When the first request for a new .m4s segment arrives, our edge server requests it from the origin. As bytes flow in from the origin, the chunked-proxy immediately forwards them to the client. When a second client requests the same file, our edge server serves all the bytes it has already cached and then appends the new bytes to both clients' streams simultaneously. It’s a multi-client cache for in-flight data.For LL-HLS, the challenges were different:Handling Blocked Requests: Our edge servers needed to be optimized to hold thousands of manifest requests open for hundreds of milliseconds without consuming excessive resources.Intelligent Caching: We needed to correctly handle cache statuses (MISS, EXPIRED) for manifests to ensure only one request goes to the origin per update, preventing a "thundering herd" problem.High Request Volume: LL-HLS generates a storm of requests for tiny part-files. Our infrastructure was scaled and optimized to serve these small files with minimal overhead.The payoff: ultimate flexibility for developersThis engineering effort wasn't just an academic exercise. It provides tangible benefits to developers building with our platform. The primary benefit is simplicity through unification, but the most powerful benefit is the ability to optimize for every platform.Consider the complex landscape of Apple devices. With our unified pipeline, you can create a player logic that does this:On iOS 17.1+: Use LL-DASH with the new Managed Media Source (MMS) API for ~2.0 second latency.On iOS 14.0 - 17.0: Use native LL-HLS for ~3.0 second latency.On older iOS versions: Automatically fall back to standard HLS with a reduced latency of ~9 seconds.This lets you provide the best possible experience on every device, all from a single backend and a single live source, without any extra configuration.Don't fly blind: observability in a low-latency worldA complex system is useless without visibility, and traditional metrics can be misleading for low-latency streaming. Simply looking at response_time from a CDN log is not enough.We had to rethink what to measure. For example:For an LL-HLS manifest, a high response_time (e.g., 500ms) is expected behavior, as it reflects the server correctly holding the request while waiting for the next part. A low response_time could actually indicate a problem. We monitor “Manifest Hold Time” to ensure this blocking mechanism is working as intended.For LL-DASH, a player requesting a chunk that isn't ready yet might receive a 404 Not Found error. While occasional 404s are normal, a spike can indicate origin-to-edge latency issues. This metric, combined with monitoring player liveCatchup behavior, gives a true picture of stream health.Gcore: one pipeline to serve them allThe paths of LL-HLS and LL-DASH may be different, but their destination is the same: real-time interaction with a global audience. By starting with a common foundation—the keyframe—and custom-engineering every component of our pipeline to handle this duality, we successfully solved the unification problem.The result is a single, robust system that gives developers the power of both protocols without the complexity of running two separate infrastructures. It’s how we deliver ±2.0s latency with LL-DASH and ±3.0s with LL-HLS, and it’s the foundation upon which we’ll build to push the boundaries of real-time streaming even further.

27 Oct 2025 Gcore CDN updates: Dedicated IP and BYOIP now available

Gcore CDN updates: Dedicated IP and BYOIP now availableWe’re pleased to announce two new premium features for Gcore CDN: Dedicated IP and Bring Your Own IP (BYOIP). These capabilities give customers more control over their CDN configuration, helping you meet strict security, compliance, and branding requirements.Many organizations, especially in finance and other regulated sectors, require full control over their network identity. With these new features, Gcore enables customers to use unique, dedicated IP addresses to meet compliance or security standards; retain ownership and visibility over IP reputation and routing, and deliver content globally while maintaining trusted, verifiable IP associations.Read on for more information about the benefits of both updates.Dedicated IP: exclusive addresses for your CDN resourcesThe Dedicated IP feature enables customers to assign a private IP address to their CDN configuration, rather than using shared ones. This is ideal for:Businesses that are subject to strict security or legal frameworks.Customers who want isolated IP resources to ensure consistent access and reputation.Teams using WAAP or other advanced security solutions where dedicated IPs simplify policy management.BYOIP: bring your own IP range to Gcore CDNWith Bring Your Own IP (BYOIP), customers can use their own public IP address range while leveraging the performance and global reach of Gcore CDN. This option is especially useful for:Resellers who prefer to keep Gcore infrastructure invisible to end clients.Enterprises maintaining brand consistency and control over IP reputation.How to get startedBoth features are currently available as paid add-ons and are configured manually by the Gcore team. To request activation or learn more, please contact Gcore Support or your account manager.We’re working on making these features easier to manage and automate in future releases. As always, we welcome your feedback on both the feature functionality and the request process—your insights help us improve the Gcore CDN experience for everyone.Get in touch for more information

13 Oct 2025