For those looking to streamline their administrative tasks on a Unix or Linux-based system, the frequent password prompts when using the sudo command can become tedious. This article provides a clear guide on how to disable the password requirement for the sudo command, while also highlighting the potential security implications. Before making any changes to your system, it’s essential to be aware of the balance between convenience and security.

About Sudo command

The sudo command in Unix-like systems allows users to run commands with elevated privileges. It enhances security by delegating specific administrative tasks without granting full root access and maintains a log of these commands. When used, the system usually asks for a password to verify the action, but this can be configured or disabled.

Some users choose to disable the password prompt for convenience, especially if they run sudo commands frequently during their workflow. Continually entering a password can interrupt and slow down tasks. However, it’s crucial to understand that while this can streamline operations, it can also introduce security vulnerabilities, as it removes a layer of protection against unauthorized or inadvertent changes to the system.

Sudo command on Linux without password

Using the sudo command without a password can streamline tasks on Linux, but it’s essential to recognize the security implications of this action. If you decide to proceed, here’s a step-by-step guide to configure sudo to work without prompting for a password:

1. Open Terminal. Press Ctrl + Alt + T together. This shortcut will open the Terminal.

2. Backup sudoers file. Before making any changes, it’s good practice to back up the sudoers file:

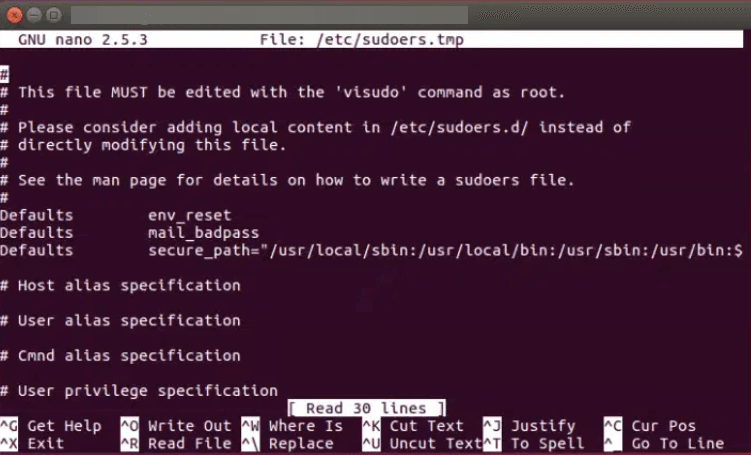

sudo cp /etc/sudoers /etc/sudoers.bak3. Edit the sudoers file. You should never edit the sudoers file directly with a regular text editor. Instead, use visudo:

sudo visudoSample Output:

4. Modify the file. Navigate to the section that looks like:

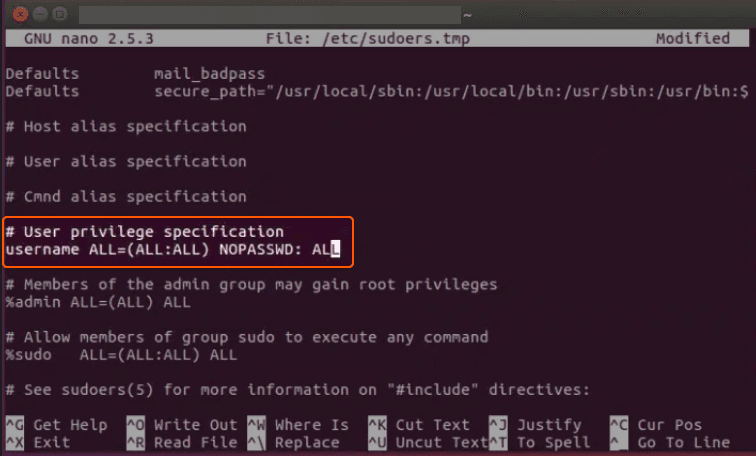

# User privilege specificationroot ALL=(ALL:ALL) ALLBelow this line, add the following, replacing username with your actual username:

username ALL=(ALL:ALL) NOPASSWD: ALLExample:

If you only want to disable the password for a particular command, replace ALL at the end with the full path to the command. For example:

username ALL=(ALL:ALL) NOPASSWD: /path/to/command5. Save and exit. If visudo uses the Vim editor by default (common on many systems), press Esc, then type :wq and press Enter. If it’s using nano, press Ctrl + O, then Enter, and then Ctrl + X.

6. Test the configuration. Try executing a command with sudo. It shouldn’t ask for a password.

That’s it! Now you’ve disabled the password for the sudo command. Please be aware that making sudo password-less can introduce a security risk, particularly if unauthorized individuals access your machine. Always weigh the security implications and make informed decisions.

Conclusion

Looking to deploy Linux in the cloud? With Gcore Cloud, you can choose from Basic VM, Virtual Instances, or VPS/VDS suitable for Linux:

- Gcore Basic VM offers shared virtual machines from €3.2 per month

- Virtual Instances are virtual machines with a variety of configurations and an application marketplace

- Virtual Dedicated Servers provide outstanding speed of 200+ Mbps in 20+ global locations

Related articles

Subscribe to our newsletter

Get the latest industry trends, exclusive insights, and Gcore updates delivered straight to your inbox.