What a HESP protocol is and how it changes streaming for the better

- By Gcore

- November 9, 2021

- 4 min read

HESP is a new video streaming protocol developed by THEO Technologies for streaming video content with ultra-low latency. In order to standardize and promote this protocol, the developer company in collaboration with Synamedia has created HESP Alliance, an association of streaming providers and media companies.

In September 2021, Gcore joined the HESP Alliance. This means that from now on our CDN supports the HESP protocol, thus enabling our clients to organize video content broadcasts with minimal delays to millions of viewers around the world.

We will tell you what kind of protocol it is and which advantages it provides in terms of video delivery.

What is HESP?

HESP (High Efficiency Stream Protocol) is an adaptive HTTP based video streaming protocol developed by THEO Technologies for ensuring video content streaming with ultra-low latency and optimizing your costs.

This protocol can deliver videos very fast, with the delays not exceeding 0.4–2 seconds. As opposed to its analogs, HESP requires less bandwidth.

Since HESP is an HTTP based protocol, it can be transmitted via CDN. This protocol enables Gcore CDN to deliver video content to all kinds of devices and to millions of viewers located anywhere in the world, while maintaining up to 8K quality. Note that the broadcast costs are reduced to a minimum, as opposed to the alternative WebRTC protocol.

4 main differences between HESP and other protocols

The main protocols currently used for streaming are HLS, MPEG DASH, RTMP, and WebRTC. Each of them has certain drawbacks.

HESP advantages over other protocols

The main disadvantage of the HLS and MPEG-DASH protocols is that they can’t provide sufficiently low latency streaming. Their limit is 4 seconds, which is too much for interactive events and for real-time communication.

WebRTC is too expensive and doesn’t allow clients to easily scale their streams to a larger audience.

HESP combines the advantages of all these protocols and is free of their weak points. Let’s take a look at its main features.

1. High speed and scalability

Since HESP is an HTTP based protocol, it allows the clients to broadcast videos using CDN, just like HLS and MPEG-DASH. This means that you can broadcast your video content to thousands and even millions of viewers around the world.

When using HESP, the video will be transmitted with the delays that don’t exceed 2 seconds. This is faster than with other HTTP based protocols.

Comparison of HESP with other protocols in terms of speed

RTMP, RTSP/RTP, and WebRTC are independent protocols that can’t be transmitted via CDN. They provide ultra-low latency just like HESP, yet they don’t allow you to stream your video content to a larger audience.

By the way, Gcore CDN supports not only the HESP protocol but also other HTTP based protocols. Our Streaming Platform enables video streaming using RTMP, RTSP/RTP, and WebRTC as well. If you use our streaming services, you can choose any technology mentioned in this article.

2. Cost-effectiveness

Since video content that is broadcast using RTMP, RTSP/RTP, and WebRTC protocols can’t be scaled via CDN, streaming videos to a larger audience becomes more difficult and effort-consuming. On average, streaming videos via CDN costs only 1/2–1/5 as much as streaming videos without CDN.

4. Reduced bandwidth requirements

Another difference that we’ve already mentioned is that the HESP protocol requires 10–20% less bandwidth than other low-latency protocols and technologies, including LL HLS, Chunked CMAF, and WebRTC.

5. Adaptive bitrate (ABR) support

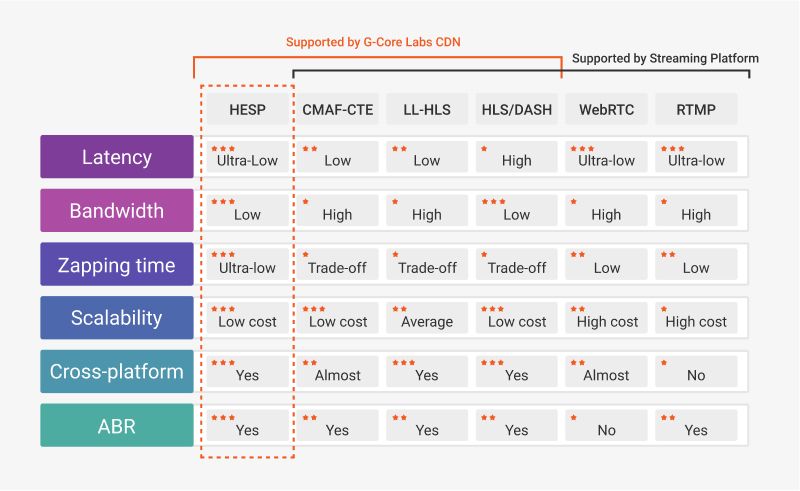

HESP is compatible with the adaptive bitrate technology. This means that your video content will be streamed to the users on all kinds of devices without buffering even in case of poor Internet connection. We’ve enlisted the main differences between HESP and other streaming protocols and technologies in the table below:

Comparison of HESP with other protocols

When you will benefit from using HESP

Almost any business aims at minimizing latency during video broadcasts and optimizing costs at the same time. The new technology seems to be especially useful for the following industries:

- Cybersport and Gaming. HESP delivers video content to end users faster than standard HLS or MPEG-DASH protocols.

- Online learning. You can hold interactive events for 1,000+ and even 1,000,000+ people without any restrictions and without having to use any expensive external applications.

- Sports. Sports events are streamed almost in real time. They will be broadcast in the Internet even faster than on TV.

- Telemedicine. HESP ensures doctors’ communication with a large audience without resorting to any third-party applications while helping significantly reduce financial costs.

- Auctions and online casinos. In these spheres, it is important not only to minimize delays while delivering video content but also to maintain high video quality in order to give the viewers the opportunity to take a closer look at what is happening on the screen. Using HESP will allow you to achieve this at a lower cost.

- OTT and TV broadcasting. These technologies will allow you to combine IPTV and OTT solutions for creating fully-featured broadcasts of the highest quality.

We’ve enlisted the main branches only, yet this is far from being the ultimate list. Using the HESP protocol is a truly profitable solution for any project where reducing delays to a minimum is of vital importance.

How to start using HESP for video content delivery

If you want to start streaming videos using HESP, you will need to incorporate some new features into your standard procedure of content production, processing, and delivery:

- A special HESP Packager that encodes videos before transmitting them. You can take it from our partners.

- CDN supporting the HESP protocol, e.g., Gcore CDN. We have excellent coverage and connectivity, including 90+ points of presence on 5 continents and 6,000+ peering partners.

- A player supporting the HESP protocol. You can also take it from our partners. For example, THEO Technologies has its own player called THEOPlayer. It has quite a number of different functions and can be easily integrated into your web resource.

Video creation and delivery process using HESP

If you are already signed up for our CDN and eager to stream your video content using the HESP protocol, contact our technical support, and they will activate this feature for you.

Summary

- Now our CDN supports the new HESP protocol and can deliver video content to the users even faster.

- HESP is an HTTP based streaming protocol providing ultra-low latency with the delays not exceeding 0.4–2 seconds.

- HESP will help you enhance user experience and optimize costs when it comes to any project that requires high video delivery speed: sports, cybersports and gaming, online learning, telemedicine, auctions, online casinos, etc.

- What distinguishes HESP from other protocols is that it both provides ultra-low latency and can be easily scaled via CDN. HESP requires 10–20% less bandwidth. This protocol costs only 1/2–1/5 as much as its analogs.

- To start using HESP, you will need to integrate a special HESP Packager and a player supporting HESP into your standard streaming process.

The HESP protocol combined with Gcore CDN will allow you to provide your users with the best streaming experience, no matter where in the world they are located.

Related articles

Subscribe to our newsletter

Get the latest industry trends, exclusive insights, and Gcore updates delivered straight to your inbox.