How to create a mobile streaming app on iOS

- June 9, 2022

- 6 min read

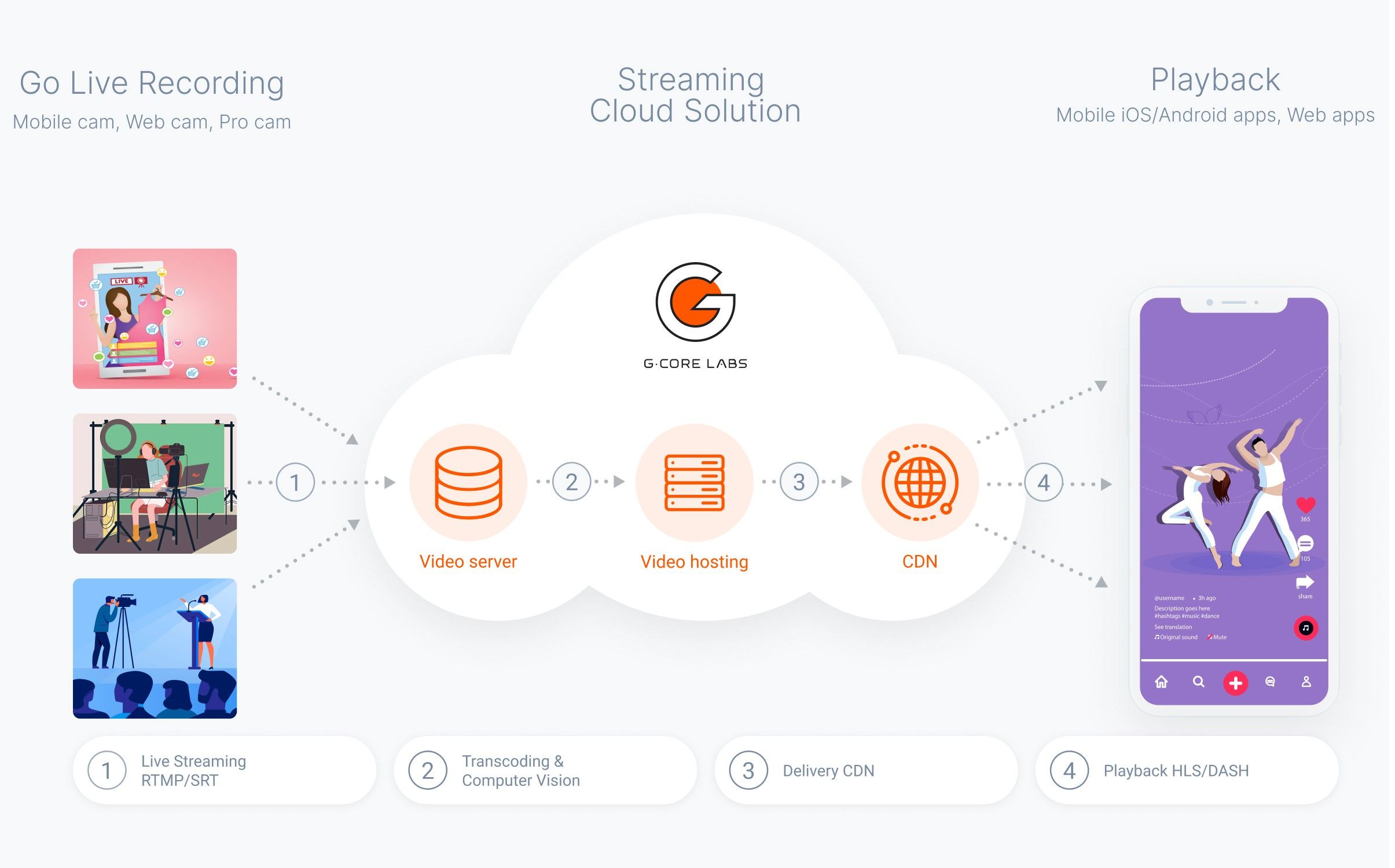

Livestreaming from mobile devices allows you to connect with your audience, wherever you are. Services that provide such an opportunity are very popular and used in various fields.

In our previous article, “How to create a mobile streaming app on Android”, we talked about how to set up streaming on Android devices. This time, we will take a closer look at how to create your own application for mobile streaming and watching livestreams on iOS, as well as how to integrate it with Gcore Streaming Platform.

Streaming protocols

Streaming protocols are used to send video and audio over public networks. One of the most popular streaming protocols is RTMP. It is supported by most streaming platforms. RTMP is reliable and perfect for livestreaming due to its low latency and retransmission of data packets based on the TCP protocol.

To distribute and play content on users’ devices, streaming platforms offer the popular and scalable broadcast HLS and DASH formats. In addition, iOS devices are equipped with a native AVPlayer that supports HLS playback. That is why we will focus on this particular protocol.

Selecting a library to create an RTMP stream

There are few open-source solutions for RTMP streaming from iOS devices and even fewer truly functional options. Let’s take a look at the primary solutions:

- LFLiveKit is outdated—the last commit was on December 21, 2016.

- HaishinKit is currently supported and free of charge.

- Larix SDK is a working but paid solution ($300 for providing the source files).

- KSYLive is outdated—the last commit was on March 22, 2020, and the description is in Chinese.

Given all this, HaishinKit is the most suitable library. It is an up-to-date, functional, and at the same time, free solution with good documentation.

In addition, HaishinKit has many advantages:

- Constantly updated

- Supports RTMP playback

- Easy to install in the application

- Hides the work internally with AVCaptureSession—convenient when the application does not need additional work with the session

- Supports switching and turning off the camera, as well as disabling the microphone during streaming

- Can change the resolution and bitrate, and can enable/disable video and audio during streaming

- Provides the ability to flexibly configure the broadcast parameters

- Has an option to pause the stream

However, there are several drawbacks:

- Adaptive bitrate option is not available

- Hides the work internally with AVCaptureSession, which can cause problems when any actions are required with the incoming audio and video

Streaming implementation via RTMP protocol from an iOS device

The library provides two types of objects for streaming: RTMPStream and RTMPConnection.

Let’s take a step-by-step look at how to set up mobile streaming.

1. Init

To use the HaishinKit library in your project, you need to add it via SPM by entering:

https://github.com/shogo4405/HaishinKit.swift2. Permissions

Specify the required permissions in the project’s Info.plist:

- NSMicrophoneUsageDescription (Privacy—Microphone Usage Description)

- NSCameraUsageDescription (Privacy—Camera Usage Description)

3. Displaying the camera stream

When streaming from a smartphone camera, you need to see what is being broadcast. For this purpose, a corresponding View will display the camera stream on the screen. In iOS, an object of the MTHKView class is used for these purposes, with the RTMPStream object attached to it.

let hkView = MTHKView(frame: .zero)hkView.videoGravity = AVLayerVideoGravity.resizeAspectFillhkView.attachStream(rtmpStream)NSLayoutConstraint.activate([ hkView.topAnchor.constraint(equalTo: topAnchor), hkView.leftAnchor.constraint(equalTo: leftAnchor), hkView.rightAnchor.constraint(equalTo: rightAnchor), hkView.bottomAnchor.constraint(equalTo: bottomAnchor)])4. Preparing for streaming

First of all, you need to configure and activate AVAudioSession. This can be done in the AppDelegate application class:

private func setupSession() { do { try AVAudioSession.sharedInstance().setCategory(.playAndRecord, mode: .default, options: [.defaultToSpeaker, .allowBluetooth]) try AVAudioSession.sharedInstance().setActive(true) } catch { print(error) } }}Create the RTMPConnection and RTMPStream objects:

let rtmpConnection = RTMPConnection()let rtmpStream = RTMPStream(connection: rtmpConnection)rtmpStream.attachAudio(AVCaptureDevice.default(for: AVMediaType.audio)) { error in // print(error)}rtmpStream.attachCamera(DeviceUtil.device(withPosition: .back)) { error in // print(error)}Set the rtmpStream parameters:

rtmpStream.captureSettings = [ .fps: 30, // FPS .sessionPreset: AVCaptureSession.Preset.medium, // input video width/height // .isVideoMirrored: false, // .continuousAutofocus: false, // use camera autofocus mode // .continuousExposure: false, // use camera exposure mode // .preferredVideoStabilizationMode: AVCaptureVideoStabilizationMode.auto]rtmpStream.audioSettings = [ .muted: false, // mute audio .bitrate: 32 * 1000,]rtmpStream.videoSettings = [ .width: 640, // video output width .height: 360, // video output height .bitrate: 160 * 1000, // video output bitrate .profileLevel: kVTProfileLevel_H264_Baseline_3_1, // H264 Profile require "import VideoToolbox" .maxKeyFrameIntervalDuration: 2, // key frame / sec]// "0" means the same of inputrtmpStream.recorderSettings = [ AVMediaType.audio: [ AVFormatIDKey: Int(kAudioFormatMPEG4AAC), AVSampleRateKey: 0, AVNumberOfChannelsKey: 0, // AVEncoderBitRateKey: 128000, ], AVMediaType.video: [ AVVideoCodecKey: AVVideoCodecH264, AVVideoHeightKey: 0, AVVideoWidthKey: 0, /* AVVideoCompressionPropertiesKey: [ AVVideoMaxKeyFrameIntervalDurationKey: 2, AVVideoProfileLevelKey: AVVideoProfileLevelH264Baseline30, AVVideoAverageBitRateKey: 512000 ] */ ],]5. Adaptive video bitrate and resolution

Two RTMPStreamDelegate methods are used to implement adaptive video bitrate and resolution:

func rtmpStream (_stream: RTMPStream, didPublishSufficientBW connection: RTMPConnection)—the method is called every second if there is enough bandwidth (used for increasing bitrate and resolution)func rtmpStream (_stream: RTMPStream, didPublishInsufficientBW connection: RTMPConnection)—the method is called in case of insufficient bandwidth (used for decreasing bitrate and resolution)

Examples of implementation:

func rtmpStream(_ stream: RTMPStream, didPublishSufficientBW connection: RTMPConnection) { guard isLive, appState != .background else { return } sufficientBWCount += 1 let currentBitrate = stream.videoSettings[.bitrate] as! UInt32 let setting = VideoSettings.getVideoResolution(type: videoType) if currentBitrate < setting.bitrate && isLive && sufficientBWCount >= 3 { sufficientBWCount = 0 let updatedBitrate = currentBitrate + 100_000 stream.videoSettings[.bitrate] = updatedBitrate increasingResolution(bitrate: Int(updatedBitrate)) print("Increasing the bitrate to \(stream.videoSettings[.bitrate] ?? 0)") } if sufficientBWCount >= 60 { sufficientBWCount = 0 } } func rtmpStream(_ stream: RTMPStream, didPublishInsufficientBW connection: RTMPConnection) { guard isLive, appState != .background else { return } sufficientBWCount = 0 insufficientBWCount += 1 let currentBitrate = stream.videoSettings[.bitrate] as! UInt32 if insufficientBWCount >= 3 { insufficientBWCount = 0 let updatedBitrate = Double(currentBitrate) * 0.7 stream.videoSettings[.bitrate] = updatedBitrate reducingResolution(bitrate: Int(updatedBitrate)) print("Bitrate reduction to \(stream.videoSettings[.bitrate] ?? 0)") } } private func reducingResolution(bitrate: Int) { guard let lowerType = VideoSettings.VideoType(rawValue: currentVideoType.rawValue - 1) else { return } let lowerSettings = VideoSettings.getVideoResolution(type: lowerType) let currentSettings = VideoSettings.getVideoResolution(type: currentVideoType) if bitrate < currentSettings.bitrate - ((currentSettings.bitrate - lowerSettings.bitrate) / 2) { setupVideoSettings(type: lowerType) currentVideoType = lowerType print("Reducing the resolutin to \(lowerSettings.width) x \(lowerSettings.height)") } } private func increasingResolution(bitrate: Int) { guard let upperType = VideoSettings.VideoType(rawValue: currentVideoType.rawValue + 1), upperType.rawValue <= videoType.rawValue else { return } let upperSettings = VideoSettings.getVideoResolution(type: upperType) let currentSettings = VideoSettings.getVideoResolution(type: currentVideoType) if bitrate > currentSettings.bitrate - ((currentSettings.bitrate - upperSettings.bitrate) / 2) { setupVideoSettings(type: upperType) currentVideoType = upperType print("Increasing the resolutin to \(upperSettings.width) x \(upperSettings.height)") } }6. Background streaming

Apple does not allow video recording in the background, which means that the library cannot send video fragments to the server. On the server side, it causes crashes and stream interruptions.

To fix this, we decided to add more functionality to the library for sending a static image in the background. Our version of the library can be taken from our project on GitHub.

7. Launching and stopping a livestream

Establish server connection and start streaming:

rtmpConnection.connect(connectString)rtmpStream.publish(publishString)To pause a stream, use the paused Boolean property with the True value:

rtmpStream.paused = trueIntegration with Gcore Streaming Platform

Creating a Gcore account

To integrate the streaming platform into the project, you need to create a free Gcore account with your email and password.

Activate the service by selecting Free Live or any other suitable plan.

To interact with Gcore Streaming Platform, we will use the Gcore API. Requests will be executed by native code using the methods of the NetworkManager structure which transmits data to the HTTPCommunication class. Data parsing is performed via the CodingKey protocol using the DataParser structure. You can use any other HTTP library if you want.

An example of a method for creating a request:

private enum HTTPMethod: String { case GET, PATCH, POST, DELETE } private func createRequest(url: URL, token: String? = nil, json: [String:Any]? = nil, httpMethod: HTTPMethod) ->URLRequest { var request = URLRequest(url: url) if let token = token { request.allHTTPHeaderFields = [ "Authorization" : "Bearer \(token)" ] } if let json = json { let jsonData = try? JSONSerialization.data(withJSONObject: json) request.setValue("application/json", forHTTPHeaderField: "Content-Type") request.httpBody = jsonData } request.httpMethod = httpMethod.rawValue return request }Authorization

Log in to start working with API. Use the email and password specified during registration to receive the Access Token, which you will need for further requests.

func authorizationRequest(login: String, password: String, completionHandler: @escaping ((Data?, HTTPError?) ->Void)) { guard let url = URL(string: "https://api.gcdn.co/auth/jwt/login") else { return } self.completionHandler = completionHandler //json for http body request let json: [String: Any] = ["username": login, "password": password] //setup request let request = createRequest(url: url, json: json, httpMethod: .POST) let task = session.downloadTask(with: request) task.resume() }Getting PUSH URL

There are two ways to get the URL for sending the RTMP stream:

Method 1. Send a Get all live streams request to get all livestreams. As a response, we will receive data on all streams created in your account.

An example of sending a request:

func allStreamsRequest(token: String, completionHandler: @escaping ((Data?, HTTPError?) -> Void)) { var components = URLComponents(string: "https://api.gcdn.co/vp/api/streams") //"with_broadcasts = 0" - because we get broadcasts in another method components?.queryItems = [ URLQueryItem(name: "with_broadcasts", value: "0") ] guard let url = components?.url else { return } self.completionHandler = completionHandler let request = createRequest(url: url, token: token, httpMethod: .GET) let task = session.downloadTask(with: request) task.resume() }}Method 2. Send a Get live stream request to get a particular livestream. As a response, we will receive data only on the requested stream, if such stream exists.

An example of sending a request:

func createStreamRequest(name: String, token: String, completionHandler: @escaping ((Data?, HTTPError?) -> Void)) { guard let url = URL(string: "https://api.gcdn.co/vp/api/streams") else { return } self.completionHandler = completionHandler //json for http body request let json: [String: Any] = [ "name" : name ] //setup request let request = createRequest(url: url, token: token, json: json, httpMethod: .POST) let task = session.downloadTask(with: request) task.resume() }From the responses to these requests, we get a push_url and use it as the URL for sending the RTMP stream. Once the stream begins, the broadcast will start automatically. Select the required stream in your personal account. You can use the preview before publishing the stream to your website or player.

Active stream playback

With Gcore Streaming Platform, you can broadcast streams on third-party resources in various formats, including HLS.

In our example, we will not consider simultaneous streaming and playback on one device. Instead, streaming should be launched from any other device.

To play the active stream, use the standard AVPlayer.

Starting playback

Before playing, the hls_playlist_url of the active stream should be embedded in the player during its initialization. hls_playlist_url is returned in the response to the Get livestream request mentioned above.

func downloadHLS(for streamsID: [Int]) { guard let token = token else { return } for id in streamsID { let http = HTTPCommunication() http.getStreamRequest(token: token, streamID: id) { data, error in if let data = data, let url = URL(string: dataParser.parseStreamHLS(dаta: data)) { delegate?.streamHLSDidDownload(url, streamID: id) } } } }Player initialization:

let broadcast = model.broadcasts[indexPath.row] guard let streamID = broadcast.streamIDs.first, let hls = model.getStreamHLS(streamID: streamID) else { return } let playerItem = AVPlayerItem(asset: AVURLAsset(url: hls)) playerItem.preferredPeakBitRate = 800 playerItem.preferredForwardBufferDuration = 1 let player = AVPlayer(playerItem: playerItem) let playerVC = GCPlayerViewController(player: player) playerVC.modalPresentationStyle = .fullScreen present(playerVC, animated: true, completion: nil)Summary

Using our examples, organizing a livestream in an iOS application is quite simple and does not take much time. All you need is the open-source HaishinKit library and Gcore Streaming Platform.

Gcore streaming API

All the code mentioned in the article can be viewed on GitHub.

Related articles

Subscribe to our newsletter

Get the latest industry trends, exclusive insights, and Gcore updates delivered straight to your inbox.