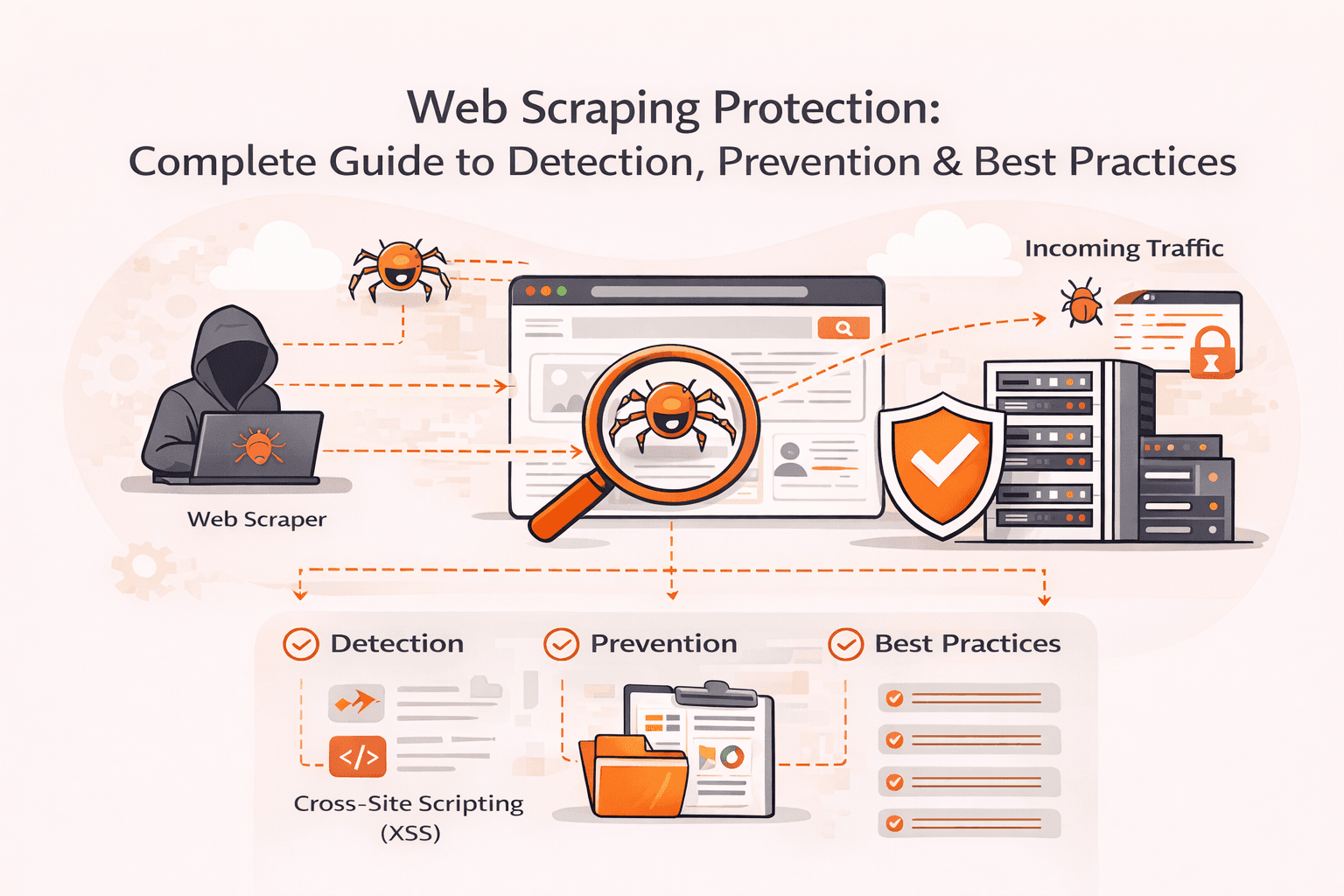

Web Scraping Protection: Complete Guide to Detection, Prevention & Best Practices

- By Gcore

- February 3, 2026

- 9 min read

Your server load suddenly spikes by 400%. Legitimate customers can't access your site. Competitors are methodically copying your product descriptions, pricing data, and proprietary content. Modern scraping bots are draining your resources and stealing your competitive advantage, and traditional defenses can't stop them because these attacks mimic human behavior perfectly.

The scale of this threat is staggering. Organizations using full protection strategies report reducing server load by up to 90%, while advanced solutions now prevent 99% of scraping attempts. Yet hyper-distributed bot attacks using multiple user-agents, IPs, and rotating proxies easily bypass conventional Web Application Firewalls. The sophistication has forced the entire industry to shift from signature-based detection to behavioral analysis and machine learning approaches.

You'll discover how modern web scraping protection actually works, which technologies detect even the most advanced bots, and how to build a layered defense plan that stops unauthorized data extraction without blocking legitimate users or search engines.

What is web scraping protection?

Web scraping protection is a security layer that detects and blocks automated bots trying to extract content and data from your website without permission. These bots can steal product listings, pricing information, user data, and proprietary content, often selling it to competitors or using it to undercut your business. Modern protection systems analyze behavior patterns and apply machine learning to identify scraping attempts, since today's bots mimic human behavior and easily bypass traditional defenses like basic firewalls. Organizations that deploy full protection can reduce server load by up to 90% while still allowing legitimate search engines and real users to access their sites normally.

How does web scraping protection work?

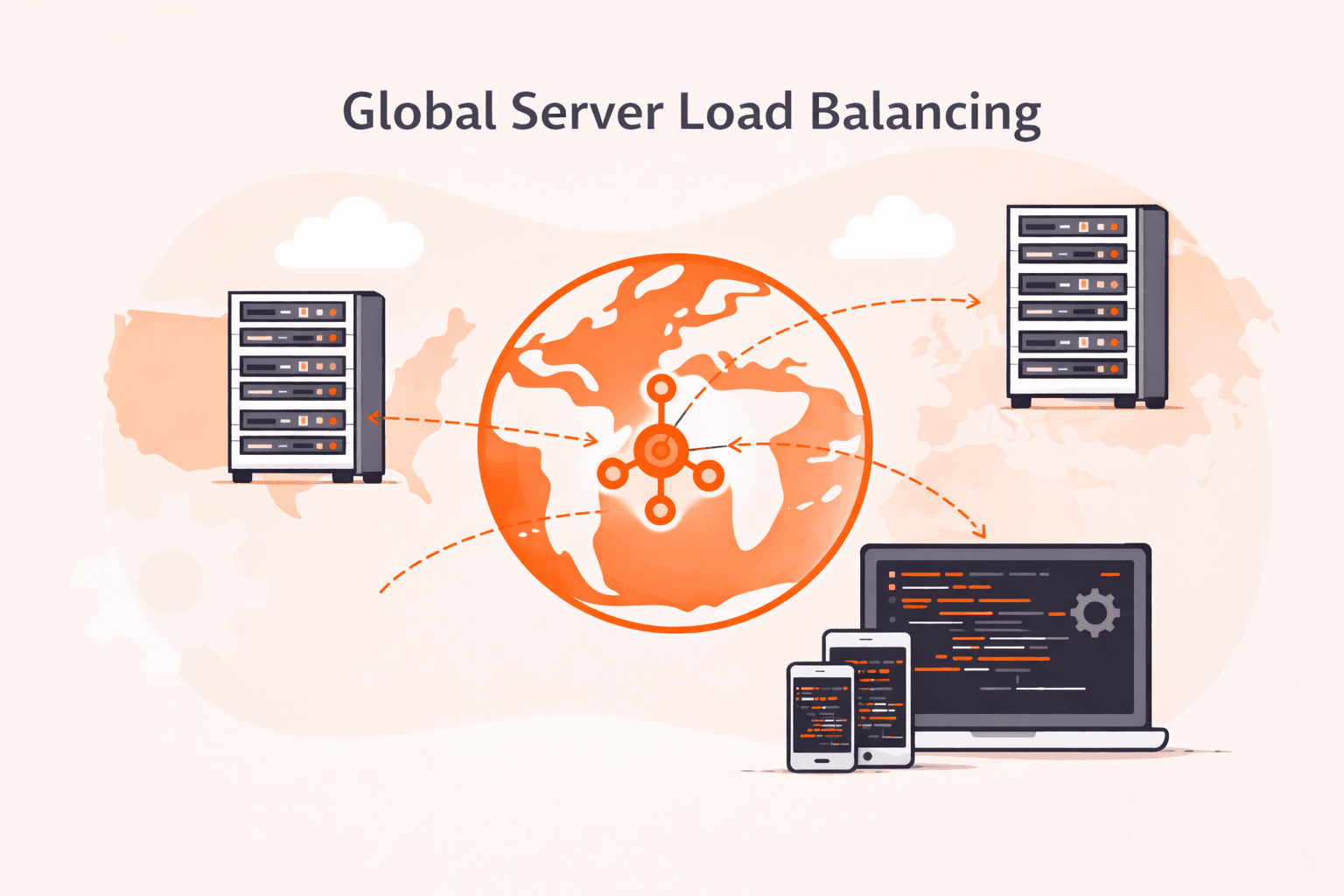

Web scraping protection combines multiple detection layers that identify automated bots and distinguish them from legitimate users. The system monitors incoming traffic patterns, analyzes behavioral signals, and applies mitigation rules, all happening in milliseconds before content is served.

Here's the basic flow. When a request hits your site, protection tools first check device fingerprints by collecting attributes like screen resolution, operating system, and browser plugins. These create unique identifiers that persist even when scrapers rotate IP addresses or use VPNs. Next, behavioral analysis kicks in. The system tracks mouse movements, keystroke patterns, and navigation sequences. Bots typically move too fast, follow predictable paths, or skip certain actions that humans naturally perform.

Rate limiting adds another defense layer. If requests from a single source exceed set thresholds (say, 100 requests per minute), the system automatically throttles or blocks that traffic. Advanced solutions apply machine learning models trained on millions of traffic samples to spot attacks that mimic human behavior. These models can detect hyper-distributed attacks using multiple user-agents and IP addresses, which easily bypass traditional WAFs.

The key thing: modern protection happens at the edge, analyzing traffic before it reaches your origin servers. This approach can reduce server load by up to 90% while maintaining access for legitimate visitors and search engine crawlers.

What are the main business impacts of unprotected web scraping?

Unprotected web scraping creates measurable financial and operational damage across multiple business areas. Organizations without proper defenses face server overload, competitive disadvantages, and security vulnerabilities that directly affect their bottom line.

- Revenue loss: Competitors scraping pricing data can undercut your offers in real time, while scraped product listings appear on unauthorized reseller sites that divert potential customers. E-commerce sites lose sales when scrapers extract inventory details and customer reviews to replicate successful products.

- Server infrastructure costs: Scraping bots generate massive traffic volumes that consume bandwidth and processing power meant for legitimate users. Companies often need to provision 90% more server capacity than necessary just to handle automated extraction requests, dramatically increasing hosting expenses.

- Degraded user experience: When bots overwhelm your servers, page load times increase for real visitors, leading to higher bounce rates and abandoned transactions. Sites experiencing heavy scraping see performance drops that frustrate customers and damage brand reputation.

- Competitive intelligence theft: Scrapers extract proprietary data like pricing strategies, product catalogs, and market positioning that took years to develop. Competitors gain instant access to your business intelligence without the research investment, eliminating your planned advantages.

- SEO penalties: Scraped content appearing on other sites creates duplicate content issues that confuse search engines and dilute your rankings. Your original content loses visibility when copied versions flood search results, reducing organic traffic to your site.

- Legal and compliance risks: Unauthorized data extraction may expose customer information or violate data protection regulations, creating liability issues. Companies face potential lawsuits when scrapers access and redistribute content that includes personal data or copyrighted material.

What are the most effective web scraping protection methods?

Effective web scraping protection methods combine multiple defense layers to detect and block automated data extraction while allowing legitimate traffic through. The most reliable approaches analyze behavior patterns, fingerprint devices, and apply intelligent rate limiting rather than simple IP blocks that scrapers easily bypass.

The most effective protection methods are listed below.

- Bot detection systems: Advanced solutions analyze user behavior patterns, mouse movements, and interaction timing to distinguish bots from humans. Machine learning models identify suspicious activity even when scrapers rotate IPs or mimic browser signatures.

- Device fingerprinting: This technology creates unique identifiers by collecting device attributes like screen resolution, operating system, active plugins, and network characteristics. Basic fingerprinting can track users across VPNs and proxy servers. However, sophisticated scrapers use antidetect browsers and fingerprint randomization tools to generate unique, realistic fingerprints per session, so fingerprinting works best as part of a layered defense combined with behavioral analysis.

- Rate limiting: Setting thresholds for requests from single IPs or user agents within specific timeframes slows down scraping operations. When combined with behavioral analysis, rate limiting can reduce server load by up to 90% without affecting legitimate users.

- CAPTCHA challenges: Interactive verification tests confirm human users at suspicious access points. Modern CAPTCHA systems trigger only when behavioral signals indicate potential bot activity, reducing friction for real visitors.

- Content obfuscation: Converting text to SVG images or rendering through JavaScript adds friction for basic HTTP-based scrapers that don't execute JavaScript. However, modern scraping tools using headless browsers (Playwright, Puppeteer) render this content the same way search engines do, so this technique should be considered only one layer in a defense-in-depth strategy rather than a standalone solution.

- Honeypot traps: Invisible links and fields that only bots can detect help identify scraping attempts. When a bot interacts with these hidden elements, the system flags and blocks the source immediately.

- IP reputation filtering: Blocking known datacenter IPs, proxy services, and previously flagged addresses stops many scraping attempts before they reach your site. Geographic restrictions add another layer when content theft patterns emerge from specific regions.

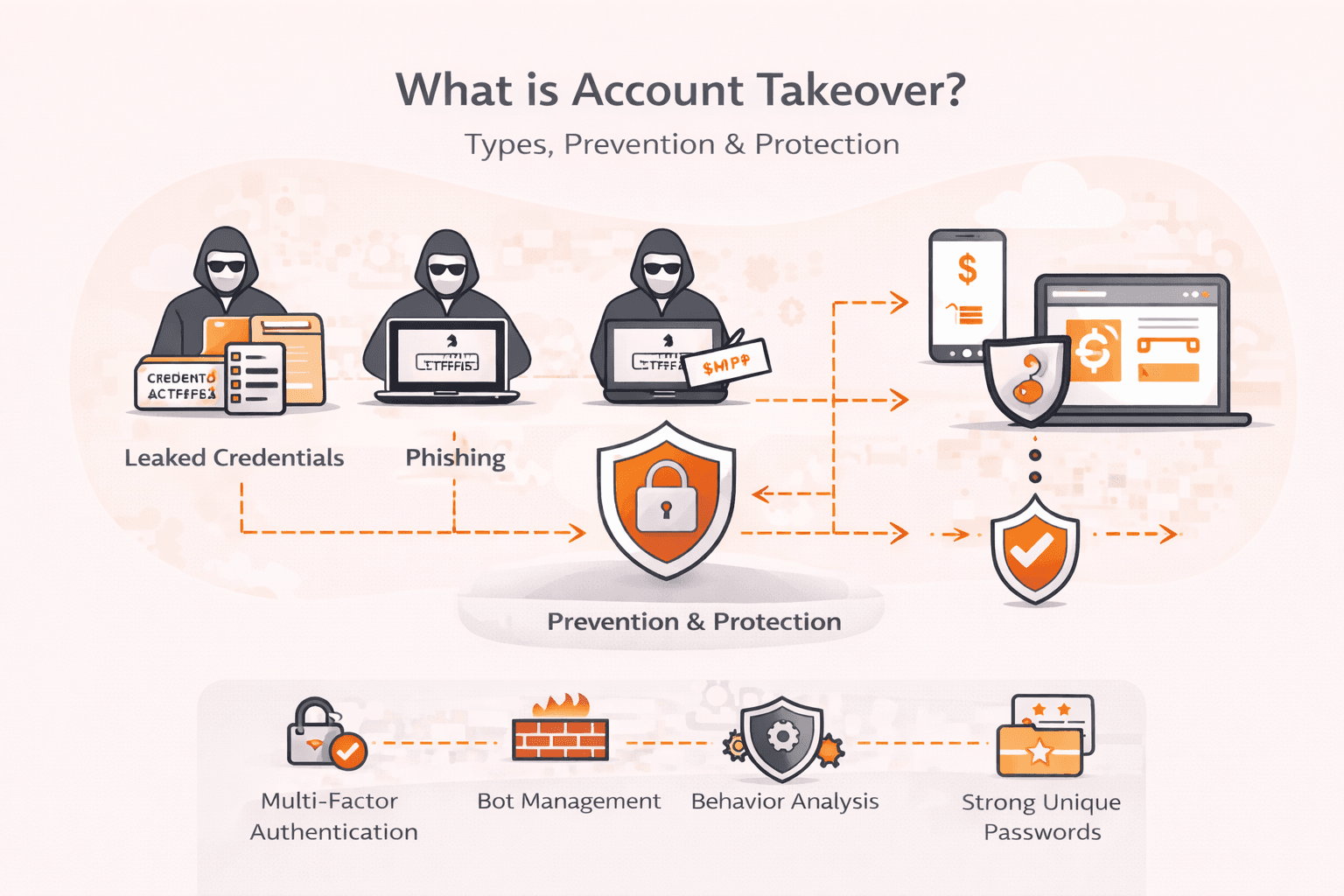

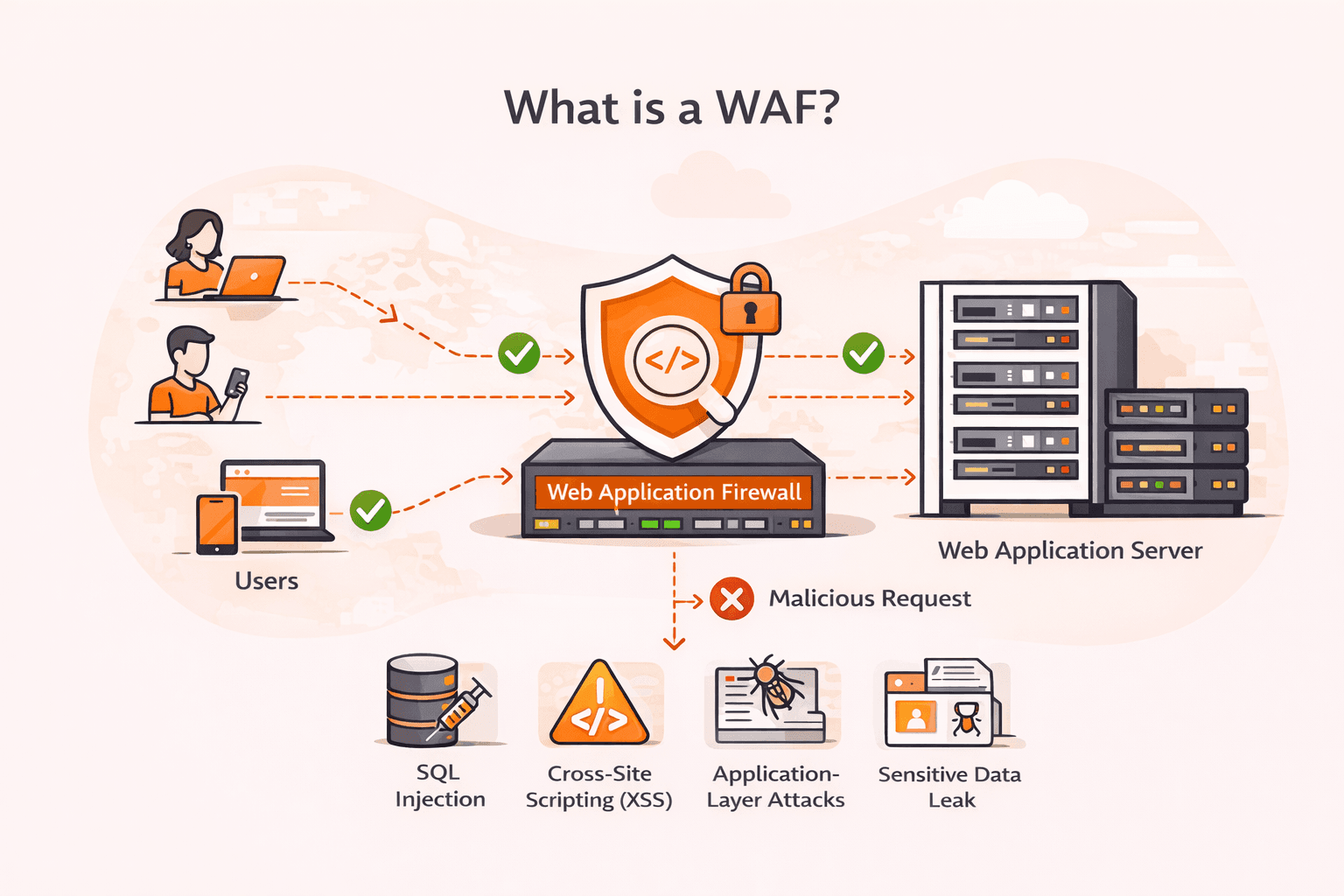

- Web application firewalls: Web application firewalls with bot management: Traditional WAFs protect against web vulnerabilities (SQL injection, XSS), while advanced WAFs or WAAP (Web Application and API Protection) platforms add specialized bot detection capabilities. These can verify legitimate search engine crawlers through reverse DNS lookup and IP verification, not just user-agent checking. Standard WAFs without dedicated bot management modules have limited effectiveness against sophisticated scrapers.

How to use web scraping protection best practices?

Every bot detection system faces a fundamental trade-off between catching malicious bots and accidentally blocking legitimate users. Aggressive detection settings can block real customers, users on corporate networks, visitors from regions with unstable internet, and assistive technologies like screen readers. When deploying protection, monitor for false positives by reviewing blocked requests, providing easy appeals for blocked users, and gradually tuning sensitivity rather than starting with maximum blocking.

You apply web scraping protection best practices by deploying multiple defense layers that work together to detect and block malicious bots while allowing legitimate traffic through.

- Start with behavioral analysis and machine learning detection. Deploy systems that monitor visitor patterns rather than relying on simple signature matching. Modern scraping bots mimic human behavior, so you need tools that analyze mouse movements, click patterns, and browsing sequences to spot automated activity. This approach catches advanced attacks that bypass traditional defenses.

- Configure rate limiting and request throttling across your infrastructure. Set maximum request thresholds per IP address within specific timeframes (for example, 100 requests per minute). When visitors exceed these limits, automatically slow their connection speed or temporarily block access. This prevents scrapers from rapidly extracting large volumes of data without completely blocking legitimate users who might occasionally refresh pages quickly.

- Apply device fingerprinting to track visitors across sessions. Collect browser attributes including screen resolution, operating system, active plugins, and network characteristics to create unique identifiers for each device. This lets you block malicious actors even when they rotate IP addresses or use VPNs, since the device fingerprint remains consistent.

- Deploy edge-based mitigation to protect your origin servers. Process bot detection at the network edge before requests reach your infrastructure. This approach reduces server load by up to 90% while maintaining fast response times for legitimate visitors, since you're filtering malicious traffic before it consumes backend resources.

- Set up geographic restrictions combined with IP blocking. Block access from regions where you don't conduct business or where scraping attacks commonly originate. Maintain allowlists for known legitimate crawlers like search engines, and regularly update your blocklists based on attack patterns you observe in your logs.

- Monitor your content usage across the web continuously. Apply automated tools that scan the internet daily for copies of your content appearing on other sites. When theft is detected, you can file complaints with search engines to remove stolen content from search results and take enforcement action against violators.

- Add CAPTCHA challenges at planned points in the user journey. Place these challenges on high-value pages like login forms, checkout processes, or content download pages rather than your entire site. This balances security with user experience. You're protecting critical areas without frustrating every visitor with constant verification requests.

- Review your protection logs weekly to refine detection rules. Analyze blocked requests to identify false positives where legitimate users got flagged, and adjust your thresholds accordingly. Look for new attack patterns in your data and update your behavioral profiles to catch emerging threats before they succeed.

- Recognize that bot protection is an ongoing arms race, not a one-time deployment. Attackers continuously evolve their techniques — sophisticated scrapers adapt to new defenses within days or weeks. AI-powered bots now account for over 50% of web traffic, and they constantly develop new evasion methods. Plan for continuous monitoring, regular rule updates, and periodic reassessment of your protection strategy. What works today may be bypassed tomorrow.

The key thing is that no single method stops all scraping attempts. You need layered defenses that adapt as attackers change tactics.

What are the warning signs of web scraping attacks?

Warning signs of web scraping attacks refer to observable patterns and anomalies that indicate automated bots are extracting data from your website. The warning signs are listed below.

- Unusual traffic spikes: Your analytics show sudden increases in page views or bandwidth consumption that don't correlate with marketing campaigns or normal growth patterns. These spikes often occur during off-peak hours when legitimate users are less active.

- Repetitive access patterns: Server logs reveal the same IP addresses or user agents requesting identical pages in rapid succession with mechanical timing intervals. Real users browse unpredictably, while scrapers follow systematic patterns.

- High bounce rates: Analytics display abnormally high bounce rates where visitors leave immediately after accessing a single page. Scraping bots typically extract content from one page and move on without engaging with your site's navigation or other elements.

- Degraded server performance: Your website experiences slower response times, increased server load, or occasional crashes despite normal visitor counts. Scraping attacks can reduce server performance by up to 90% as bots make thousands of requests per minute.

- Suspicious user agents: Log files show requests from outdated browsers, non-standard user agent strings, or multiple requests claiming to be from legitimate search engines like Googlebot. Malicious scrapers often fake their identity or apply uncommon browser configurations.

- Failed login attempts: You notice multiple failed authentication attempts across different accounts or systematic testing of common username-password combinations. Scrapers targeting protected content often probe login systems before attempting to extract data.

- Geographic anomalies: Traffic originates from unexpected countries or regions that don't match your typical audience, especially when combined with other suspicious patterns. Scrapers frequently route through proxy servers and VPNs from various global locations.

- Honeypot triggers: Hidden links or content traps that aren't visible to normal users get accessed repeatedly. Only automated bots following every link on a page will trigger these detection mechanisms.

How can Gcore help with web scraping protection?

Gcore protects against web scraping through the Web Application Firewall and bot management capabilities deployed across 210+ global edge locations. The system analyzes behavior patterns and applies machine learning to distinguish malicious scrapers from legitimate traffic, including search engine crawlers that your site needs.

You'll get real-time detection at the edge that stops advanced bots before they reach your origin servers. The Gcore platform identifies scraping patterns even when attackers rotate IPs, spoof user agents, or distribute requests across multiple ASNs. This approach can reduce server load by up to 90% while keeping your site accessible to real users.

The system combines rate limiting, device fingerprinting, and threat intelligence to block automated extraction attempts without impacting site performance. You can set custom rules based on geography, request patterns, or specific endpoints that need extra protection.

Learn more about Gcore's application security solutions at gcore.com/waap.

Frequently asked questions

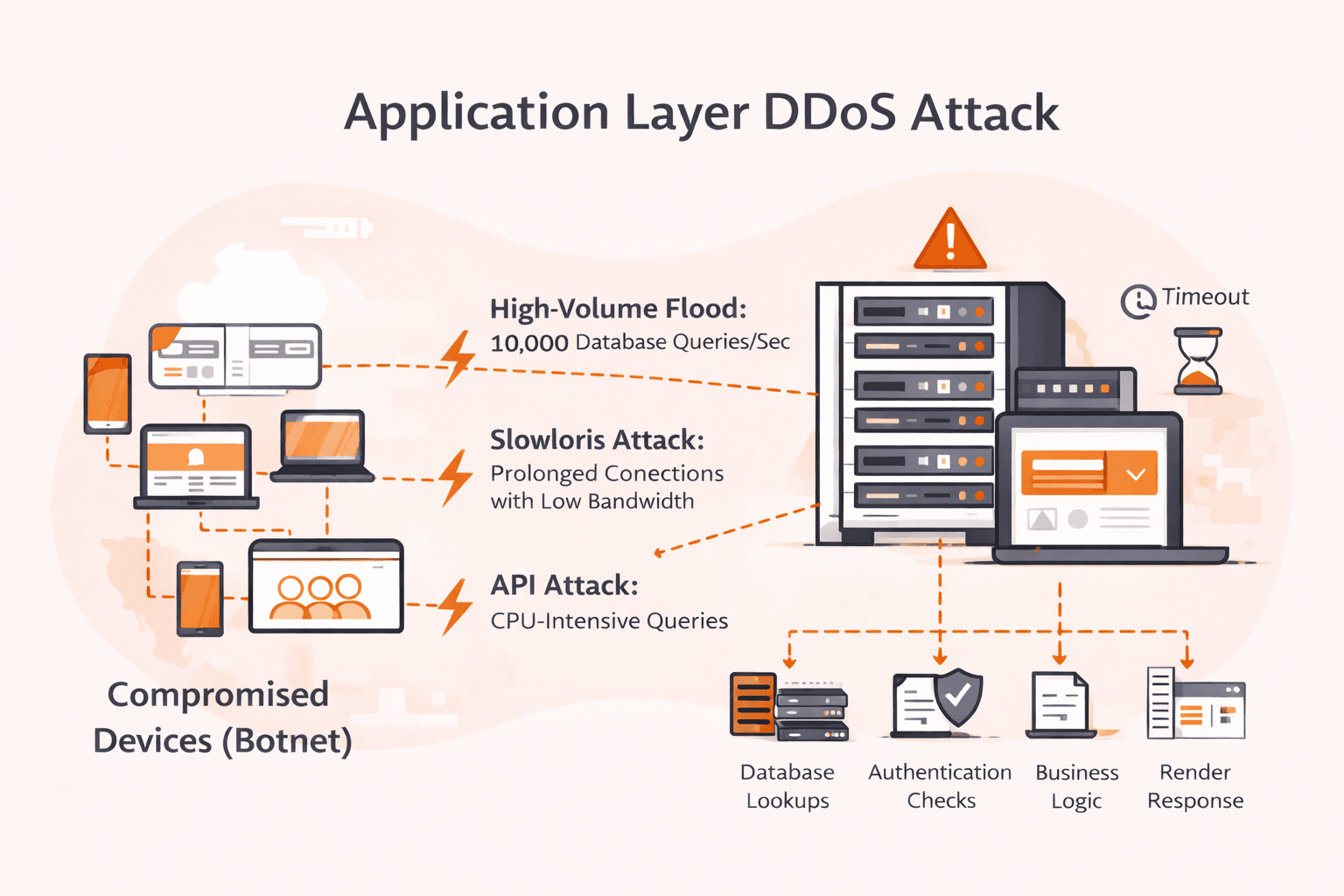

What's the difference between web scraping protection and DDoS protection?

Web scraping protection defends against automated data extraction bots that steal content, pricing, or inventory information, while DDoS protection blocks traffic floods designed to overwhelm servers and crash websites. Scraping attacks target your data, DDoS attacks target your availability.

How much does web scraping protection cost?

Web scraping protection costs range from free basic tools to enterprise solutions priced at $200 to $50,000+ monthly, depending on traffic volume and feature complexity. Organizations with moderate traffic typically spend $500 to $5,000 per month for full protection that includes behavioral analysis, machine learning detection, and real-time mitigation.

Is web scraping protection secure for legitimate users?

Yes, properly configured web scraping protection is secure for legitimate users because modern solutions analyze behavior patterns and apply machine learning to distinguish between human visitors and automated bots. Advanced systems maintain full functionality for real users and search engines while blocking malicious scrapers at the edge without impacting site performance.

What are the technical requirements for implementing scraping protection?

Most scraping protection solutions require only DNS changes or JavaScript tag deployment, with no server-side modifications needed. Cloud-based services handle detection and mitigation at the edge, though on-premises WAF solutions need dedicated hardware and ongoing maintenance resources.

How does machine learning improve web scraping detection?

Machine learning analyzes patterns in user behavior, request timing, navigation flows, and device attributes to distinguish legitimate visitors from advanced bots that mimic human activity. This behavioral analysis approach detects threats that evade traditional signature-based defenses, achieving up to 99% prevention rates against modern scraping attacks.

Can web scraping protection block all automated traffic?

No, web scraping protection can't block all automated traffic, nor should it. Legitimate bots like search engine crawlers (Googlebot, Bingbot) need access to index your site, while automated monitoring tools and API clients serve valid business purposes.

What industries need web scraping protection the most?

E-commerce, travel, and financial services face the highest scraping risks due to valuable pricing data, competitive intelligence, and real-time inventory information that competitors actively target. Media publishers and job boards also require robust protection to prevent content theft and unauthorized data aggregation that undermines their business models.

Related articles

Subscribe to our newsletter

Get the latest industry trends, exclusive insights, and Gcore updates delivered straight to your inbox.