Ubuntu is a well-liked open-source operating system that is both user-friendly and dependable. It caters to both regular users and professionals. Without any installations or commitments, anyone can explore and try Ubuntu online. Before installing, you can take a virtual tour to see its layout, tools, and features. In this article, we will walk you through how to try Ubuntu online to determine if it’s the right fit for your needs.

Trying Ubuntu Online Before You Install It

If you’re interested in trying out Ubuntu without actually installing it, accessing it online through a web browser is a great option. While it may not provide the full experience of having it installed on your device, it can still give you an idea of what the user interface and key features are like. Below is a simple guide to trying Ubuntu online step-by-step.

#1 Visit the Official Ubuntu Website

Open your preferred web browser. Navigate to the official Ubuntu website at https://www.ubuntu.com/.

#2 Search for the Online Tour

While the Ubuntu website’s design might change over time, look for a section or link related to “ubuntu online tour”.

#3 Start the Online Tour

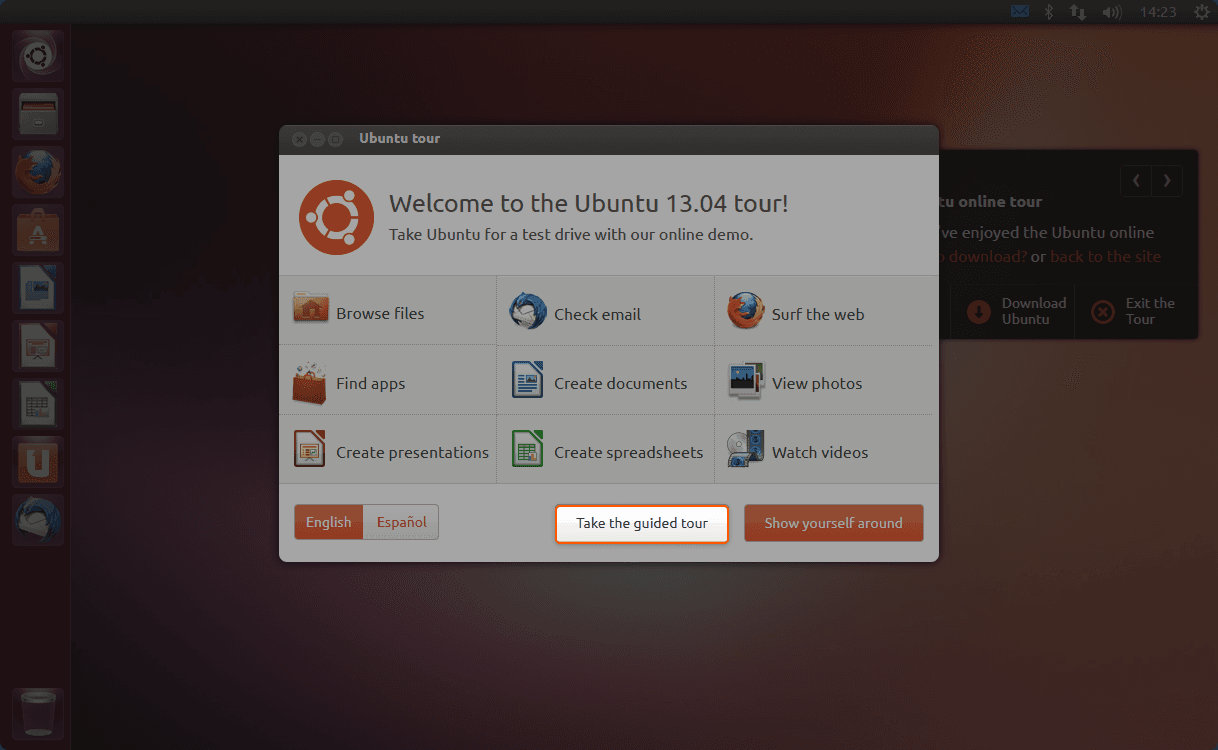

To access the online tour, simply locate the link or section and click on it. This will load a simulated version of Ubuntu in your browser. Please note that while you will get a sense of the operating system, some features may not be fully functional. To begin, select “Take the guided tour”.

#4 Explore the Interface

Familiarize yourself with the desktop environment with the guided tour. Click on icons, open applications, and navigate the system as you would on an actual desktop.

#5 Test Basic Applications

Try opening some of the default applications like Movie Player, LibreOffice, the file manager, or settings. Remember, since this is an online demo, not everything will work as it does on an actual installation.

#6 Consider Downloading for a Full Experience

If you enjoyed the online tour and want to dive deeper, consider downloading the Ubuntu ISO file and creating a Live USB. This allows you to boot Ubuntu on your computer without installing it, giving you a more genuine experience than the online demo.

That’s it! Now, you’re able to experience some of its basic features. However, please note that while the online tour offers a glimpse of Ubuntu, it doesn’t capture the speed, responsiveness, and complete feature set of an actual installation or live session. If you’re considering Ubuntu, we recommend testing it via a Live USB or virtual machine for a more in-depth understanding of what the OS provides.

Conclusion

Want to run Ubuntu in a virtual environment? With Gcore Cloud, you can choose from Basic VM, Virtual Instances, or VPS/VDS suitable for Ubuntu:

- Gcore Basic VM offers shared virtual machines from €3.2 per month

- Virtual Instances are virtual machines with a variety of configurations and an application marketplace

- Virtual Dedicated Servers provide outstanding speed of 200+ Mbps in 20+ global locations

Related articles

Subscribe to our newsletter

Get the latest industry trends, exclusive insights, and Gcore updates delivered straight to your inbox.