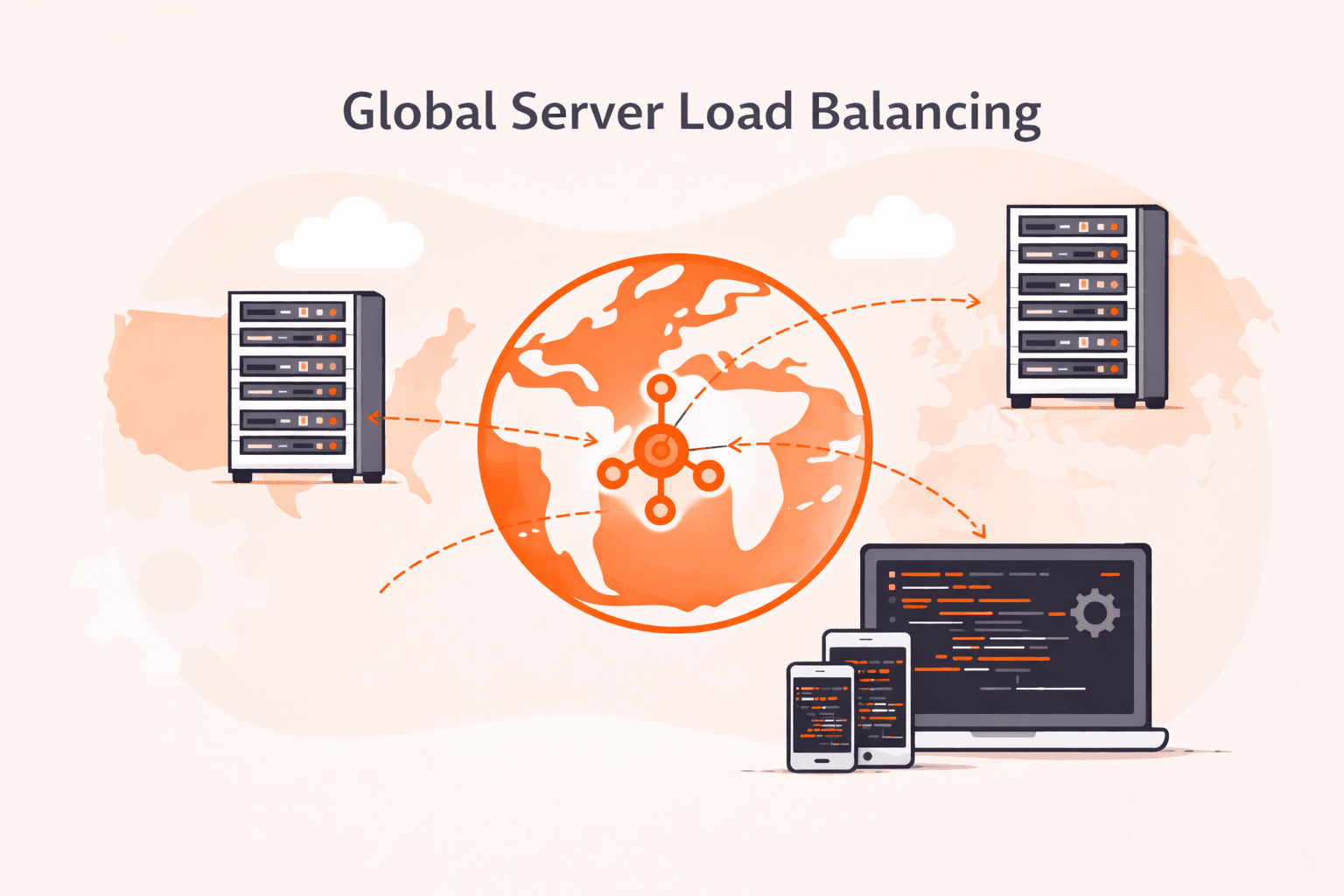

Load balancing automatically distributes incoming network traffic across multiple compute resources according to specified rules. Modern applications can generate millions of requests per second, and sharing the load boosts performance and keeps any one resource from becoming overwhelmed. This makes efficient distribution critical for performance.

Load balancing algorithms fall into two primary categories: static and dynamic. Static algorithms follow predetermined rules that don't change based on current server conditions. Dynamic algorithms adapt their distribution decisions based on real-time server metrics like CPU usage, memory consumption, and active connections.

Each category contains multiple specific methods suited to different infrastructure needs.

Static load balancing algorithms include techniques like round-robin, weighted round-robin, and IP hash. Round-robin algorithms distribute requests sequentially across servers in a fixed order. The algorithms distribute the new loads to each resource in turn, regardless of the current load. Weighted variations assign different capacities to servers based on their hardware specifications, sending proportionally more traffic to more powerful machines.

IP hash methods consistently route traffic from the same client IP address to the same resource.

Dynamic load balancing algorithms adjust traffic distribution based on current server performance data. These methods monitor metrics like response time, active connection count, and resource availability in real time. Least connections route new requests to the server handling the fewest active sessions. Least response time directs traffic to the fastest-responding server.

Major cloud providers report that dynamic algorithms can improve response times by 40 to 60% compared to static methods during traffic spikes.

Choosing the right load balancing algorithm directly affects application performance, user experience, and infrastructure costs. A well-matched algorithm reduces server response times from seconds to milliseconds. It also prevents costly downtime during traffic surges.

What are load balancing algorithms?

Load balancing algorithms are methods that determine how incoming network traffic or computational workloads get distributed across multiple servers or resources. These algorithms make real-time decisions about which server should handle each request based on specific criteria like current server load, response time, or geographic location.

The choice of algorithm directly impacts application performance. Different methods suit different scenarios. Round-robin works well for servers with similar capacity, while least connections excels when request processing times vary widely.

Modern implementations can process routing decisions in microseconds. This allows systems to handle millions of requests per hour while maintaining optimal resource usage across all available servers.

What are the different types of load balancing algorithms?

Load balancing algorithms are the methods and rules that load balancers use to distribute incoming network traffic across multiple servers. Here are the main types you'll encounter.

- Round-robin: This algorithm distributes requests sequentially across all available servers in rotation. Each server receives an equal number of requests, making it simple and effective for servers with similar capacity.

- Least connections: The load balancer directs traffic to the server currently handling the fewest active connections. This works well when requests vary in processing time and complexity.

- Weighted round-robin: You assign weight values to each server based on capacity or performance. This works well when you're mixing bare metal servers with different hardware specs, servers with higher weights receive proportionally more requests than those with lower weights.

- IP hash: The load balancer uses the client's IP address to determine which server receives the request. This creates consistent routing, sending the same client to the same server each time.

- Least response time: Traffic goes to the server with the fastest response time and fewest active connections. This balances both server load and performance to improve user experience.

- Random: The algorithm selects servers randomly for each incoming request. This distributes load evenly over time and works best with homogeneous server groups.

- Weighted least connections: This combines connection count with server capacity weights. Servers with higher capacity receive more connections, while the algorithm still considers current load levels.

How do static load balancing algorithms work?

Static load balancing algorithms distribute incoming network traffic across multiple servers using predetermined rules that don't change during operation. The algorithm assigns requests based on fixed criteria you establish when configuring the system, such as server capacity, geographic location, or a simple rotation pattern.

These algorithms follow consistent distribution patterns. Round-robin cycles through each server in sequence. It sends request one to server A, request two to server B, and so on.

Weighted distribution assigns more traffic to servers with higher capacity. If server A has twice the resources of server B, it receives twice the requests. IP hash algorithms map client IP addresses to specific servers, ensuring users consistently connect to the same backend server.

The main advantage is simplicity. Static algorithms require minimal processing overhead because they don't monitor real-time server performance or adjust to changing conditions.

You set the rules once, and the system applies them automatically.

However, this approach has limitations. If one server becomes overloaded or fails, the algorithm won't adapt automatically. You'll need to manually update the configuration to respond to changes in traffic patterns or server availability.

This makes static load balancing best suited for environments with predictable traffic and stable server infrastructure.

How do dynamic load balancing algorithms work?

Dynamic load balancing algorithms work by continuously monitoring server performance and automatically distributing incoming traffic based on real-time conditions. These algorithms collect metrics like CPU usage, memory consumption, active connections, and response times from each server in your pool. When a new request arrives, the algorithm evaluates current server states and routes the request to the server best positioned to handle it without degrading performance.

The process happens in milliseconds.

The load balancer queries health endpoints on each server, typically every 2 to 5 seconds, to build an updated picture of available capacity. Popular algorithms include least connections (routes to servers with fewest active sessions), weighted round-robin (distributes based on server capacity ratings), and least response time (sends traffic to the fastest-responding server). More advanced algorithms combine multiple metrics. For example, they'll check both CPU load and active connections before making routing decisions.

Modern implementations adapt to changing conditions automatically.

If a server's response time jumps from 50ms to 200ms, the algorithm immediately reduces traffic to that node. When traffic spikes occur, the system can trigger auto-scaling to add servers, then redistribute load across the expanded pool. This real-time adjustment prevents any single server from becoming overwhelmed while keeping others underused.

What are the benefits of using load balancing algorithms?

Load balancing algorithms deliver real advantages by distributing network traffic and workloads across multiple servers or resources. Here's what you gain.

- Improved performance: Load balancing spreads requests across multiple servers so no single server gets overwhelmed. Response times stay low, and your applications run smoothly even during traffic spikes.

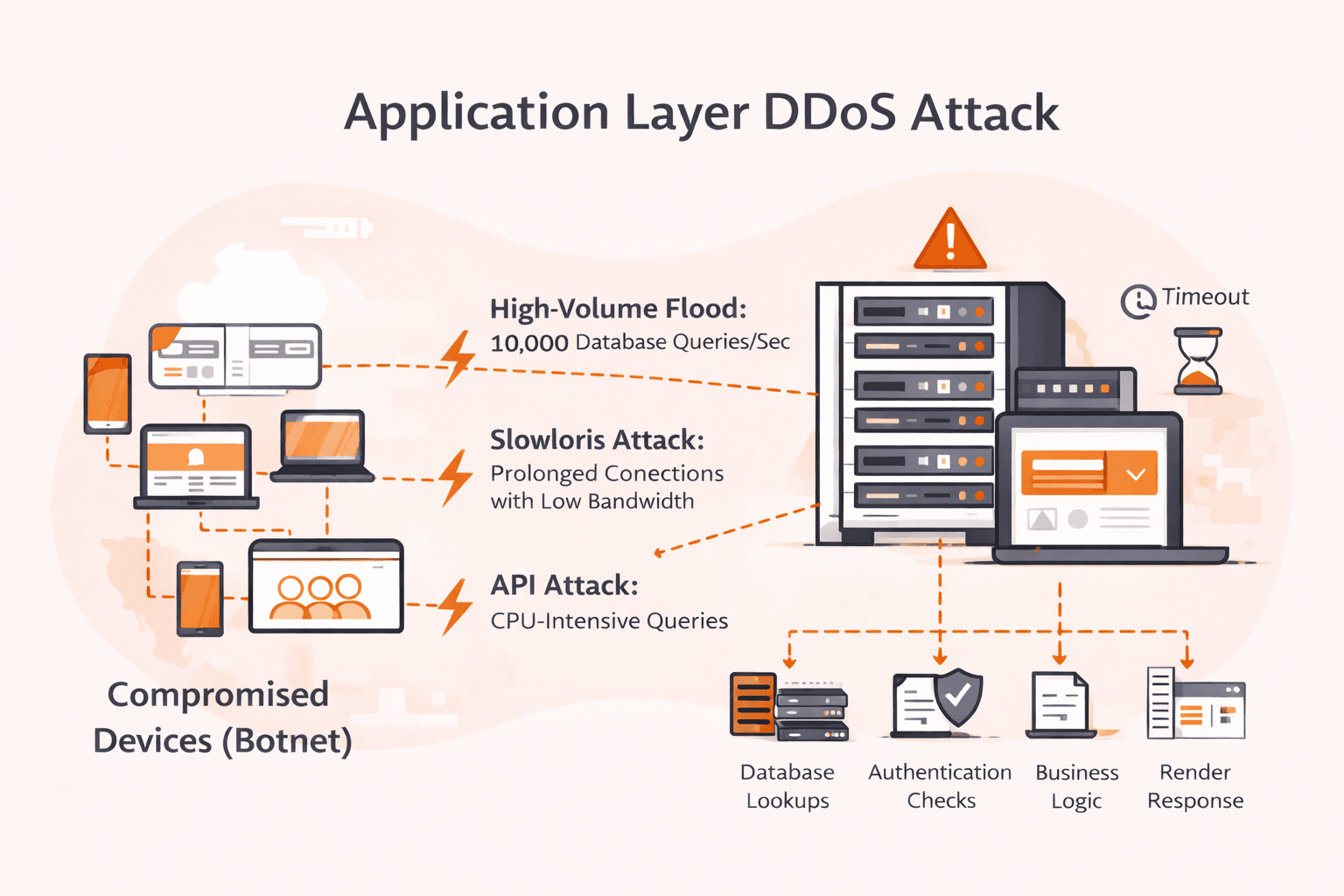

- Higher availability: When a server fails, the algorithm redirects traffic to healthy servers automatically. Combined with a ddos protection service, your application stays online without interruption, protecting against both infrastructure failures and malicious traffic that could cause downtime and revenue loss.

- Better scalability: Add or remove servers as demand changes without disrupting service. The algorithm detects new resources and adjusts traffic distribution on its own.

- Resource efficiency: Load balancing algorithms ensure each server handles work appropriate to its capacity. No more idle servers sitting alongside overloaded ones.

- Geographic optimization: Algorithms route users to the nearest server location, reducing latency by 50 to 70%. This proximity improves user experience, especially for global applications.

- Cost savings: Even workload distribution means you don't need to over-provision servers for peak loads. Organizations typically cut infrastructure costs by 30 to 40% through better resource use.

- Flexible maintenance: Take servers offline for updates or repairs while the algorithm redirects traffic to remaining servers. You'll get zero-downtime maintenance windows for critical updates.

How to choose the right load balancing algorithm?

You choose the right load balancing algorithm by matching your application's specific needs (traffic patterns, session requirements, and server capabilities) to the algorithm's strengths.

- First, determine if your application requires session persistence (sticky sessions). If users need to stay connected to the same server throughout their session, choose IP hash or least connections with session affinity rather than round-robin.

- Next, evaluate your server capacity and performance differences. Round-robin works well when all servers have identical hardware and processing power. If servers have different CPU, memory, or processing speeds, choose weighted round-robin or least connections to distribute load proportionally.

- Then, analyze your traffic patterns and request complexity. Round-robin handles distribution effectively for applications with quick, uniform requests like static content delivery, though cdn providers often handle this more efficiently at the edge. For compute-intensive tasks with varying processing times, least connections prevents server overload by directing new requests to the least busy server.

- Test response times under your typical load conditions. If response time varies by more than 30%, least response time algorithms direct traffic to the fastest-responding servers and improve overall performance.

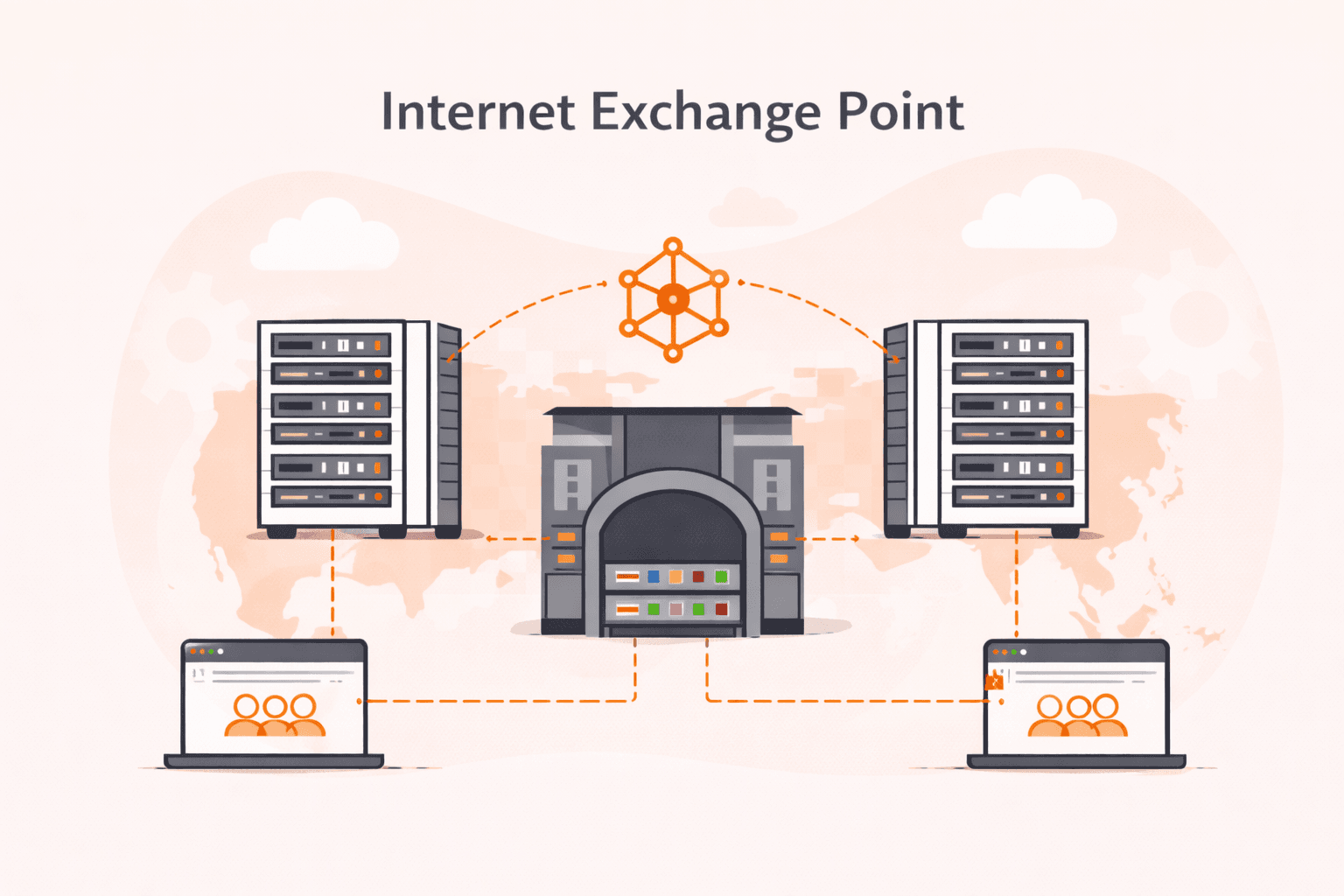

- Consider geographic distribution if you operate multiple data centers. Global server load balancing uses geographic or proximity-based algorithms to route users to the nearest server, reducing latency by 40-60% compared to random distribution.

- Finally, run load tests with your chosen algorithm for at least 48 hours under production-like conditions. Monitor server CPU usage, response times, and connection distribution to verify the algorithm handles your actual traffic patterns effectively.

Start with simpler algorithms like round-robin. Only move to complex options when monitoring data shows clear performance gaps.

What are the best practices for implementing load balancing algorithms?

Best practices for implementing load balancing algorithms refer to proven methods and strategies that ensure efficient, reliable distribution of network traffic across multiple servers. The best practices for implementing load balancing algorithms are listed below.

- Health monitoring: Set up continuous health checks to track server status and performance in real time. This automatically removes failed servers from the pool, preventing traffic from routing to unavailable resources.

- Session persistence: Configure sticky sessions when your applications require users to connect to the same server throughout their interaction. This maintains user state and prevents data loss during active sessions.

- Traffic distribution rules: Define clear criteria for how traffic splits across servers based on your specific workload patterns. Match your algorithm choice to application needs: round-robin works well for simple distribution, while least connections suits varied request durations.

- Failover configuration: Build redundant load balancers to avoid single points of failure in your infrastructure. Set up automatic failover that switches to backup systems within seconds when primary systems fail.

- Performance metrics: Track response times, connection counts, and throughput rates across all servers in your pool. Use this data to adjust weight distributions and identify capacity bottlenecks before they impact your users.

- Capacity planning: Monitor resource use patterns and plan for traffic spikes by maintaining 20–30% spare capacity. Scale your server pools before reaching 70% capacity to maintain consistent performance.

- Security integration: Apply rate limiting and DDoS protection at the load balancer level to filter malicious traffic before it reaches your application servers. Configure SSL termination to reduce encryption overhead on backend systems.

How do load balancing algorithms compare to each other?

Load balancing algorithms differ in how they distribute traffic, what information they use to make decisions, and which use cases they handle best. Each algorithm follows different logic to route requests across servers.

Round-robin rotates through servers in order. Each new request goes to the next server in line. It's simple and works well when all servers have equal capacity and requests need similar resources.

Least Connections tracks active sessions and routes new requests to servers with the fewest current connections. This performs better than round-robin when request processing times vary, since it prevents overloading servers that are handling complex tasks.

IP Hash creates a hash from the client's IP address to determine which server receives the request. This keeps clients connected to the same server, which helps when you need session persistence.

Weighted algorithms assign different capacities to servers based on their hardware specifications. A server with twice the CPU power might receive twice the traffic. These work well in mixed environments where server capabilities differ.

Least Response Time combines connection count with server response speed to pick the fastest available server. This delivers better performance than simpler methods but requires more monitoring overhead.

Random selection distributes requests without tracking state. It scales well but can create uneven distribution in small deployments.

The right algorithm depends on your server setup, traffic patterns, and whether you need session persistence. Most production environments benefit from least connections or least response time for dynamic workloads.

Frequently asked questions

What's the difference between static and dynamic load balancing algorithms?

Static load balancing uses fixed rules to distribute traffic. Common methods include round-robin or weighted distribution. Dynamic load balancing adjusts in real time based on current server metrics (CPU usage, response time, or active connections). Dynamic algorithms respond to changing conditions, but they require more processing overhead. Static methods are simpler and faster to execute.

Which load balancing algorithm is fastest?

Round-robin is fastest for pure speed. It requires no health checks or complex calculations and simply rotates through servers sequentially. However, least connections often delivers better real-world performance by directing traffic to the least-busy server, which prevents bottlenecks when request processing times vary.

Can you use multiple load balancing algorithms together?

Yes, you can run multiple load balancing algorithms at the same time. Apply different algorithms to separate services, ports, or traffic types within the same infrastructure. Here's how it works in practice: You might use round-robin for HTTP traffic on port 80 while running least connections for database queries on port 3306. This flexibility lets you optimize each service based on its specific requirements.

What is the most commonly used load balancing algorithm?

Round-robin is the most commonly used load balancing algorithm. It distributes requests sequentially across available servers in rotation. It's simple to implement and works well when servers have similar capacity and requests require similar processing time.

How does round-robin differ from least connections?

Round-robin distributes requests equally across all servers in rotation. It's straightforward and predictable. Least connections take a different approach by routing traffic to the server currently handling the fewest active connections. Here's the key difference: least connections adapts to real-time server load. This makes it more efficient when backend response times vary significantly, since it automatically directs new requests away from busy servers.

Do load balancing algorithms affect application security?

Yes, load balancing algorithms directly affect application security. They control traffic distribution patterns, session persistence, and your exposure to attacks like DDoS. Different algorithms impact security in different ways. IP hash maintains session consistency, which strengthens authentication security. Round-robin, on the other hand, can inadvertently expose vulnerabilities if you don't configure it with proper health check monitoring and SSL termination.

What happens when a server fails during load balancing?

When a server fails during load balancing, the load balancer automatically detects the failure through health checks and redirects traffic to healthy servers within seconds. Most load balancers detect failures in 2–10 seconds, depending on your health check intervals.

Related articles

Subscribe to our newsletter

Get the latest industry trends, exclusive insights, and Gcore updates delivered straight to your inbox.