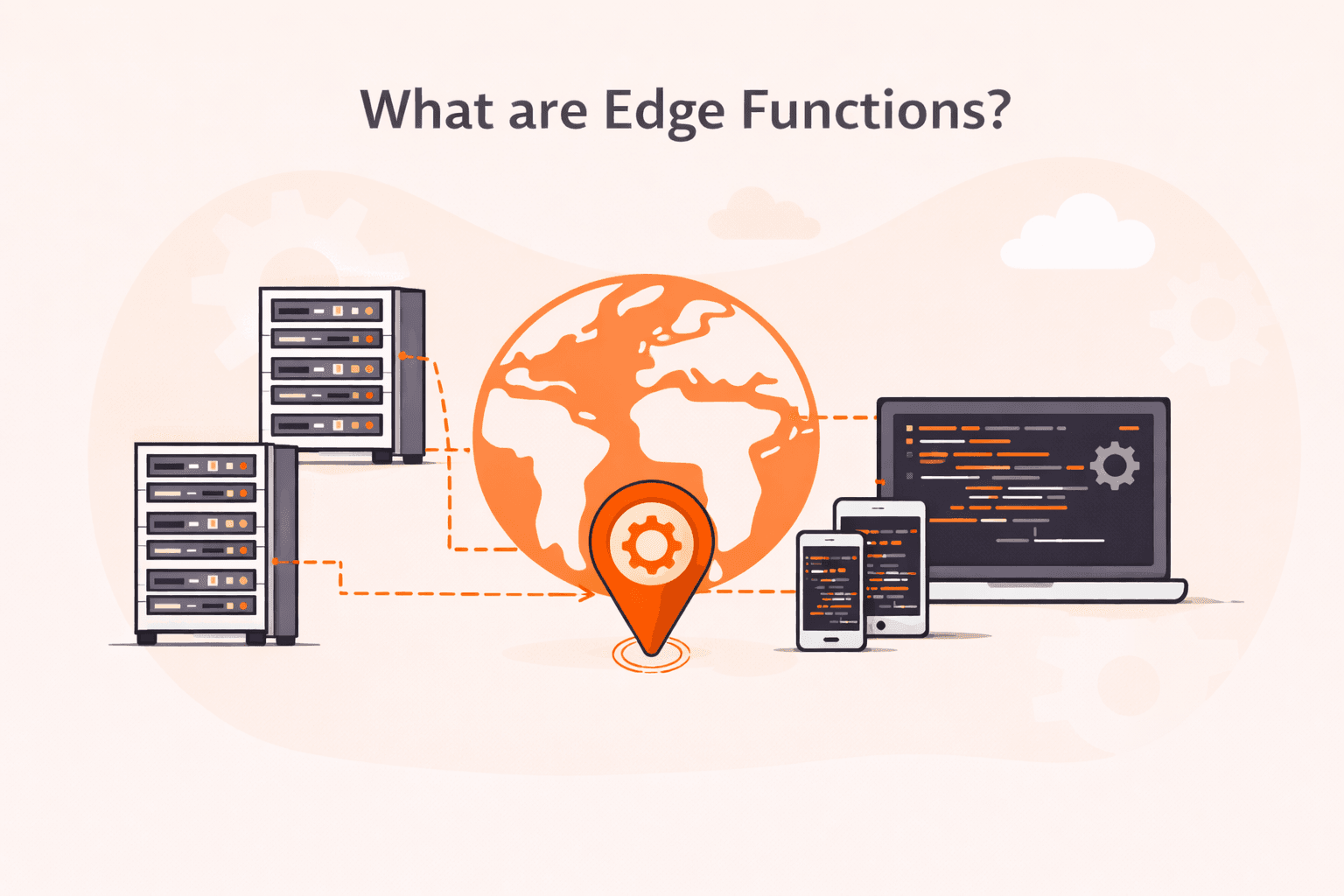

When you're building a web application, there's a good chance you've faced this problem: your server sits in one location, but your users are scattered across the globe. That means someone in Tokyo waits longer for a response than someone in Virginia. Edge functions solve this by running your code at network locations closest to each user, cutting out the travel time.

Think of edge functions as tiny, purpose-built programs that run on servers distributed worldwide, right at the "edge" of the network.

Instead of sending every request back to a central server, these functions execute right where your users are. They're server-side code snippets that handle custom logic like user authentication, content personalization, or A/B testing without the round trip to your origin server.

Here's what makes them different from traditional serverless functions: deployment happens globally and automatically. You write a JavaScript function, deploy it through your provider's platform (via CLI, API, or CI/CD), and it gets distributed to hundreds of edge locations.

When a user makes a request, the nearest edge location runs your code and returns a response, often in under 50 milliseconds.

The practical benefits are hard to ignore. You get faster response times because the code runs closer to users. You reduce load on your origin servers because edge functions handle requests before they reach your backend. And you can personalize content based on location, device type, or user preferences without adding latency.

And you can personalize content based on location, device type, or user preferences without adding latency.

Real-world use cases range from simple to complex. You might redirect users to localized content, add security headers to responses, or serve different page variants for A/B tests. Some teams handle authentication at the edge, while others process form submissions or transform images on the fly.

The key is that all of this happens instantly, wherever your users happen to be.

What are edge functions?

Edge functions are server-side code that runs on distributed servers at the network edge, closer to your end users. Unlike centralized data centers, this placement reduces latency by processing requests near where they originate. You don't need to send data back to a distant origin server for every operation. Edge functions execute custom logic for tasks like content personalization, authentication, and request routing. Response times are often under 50 milliseconds.

How do edge functions work?

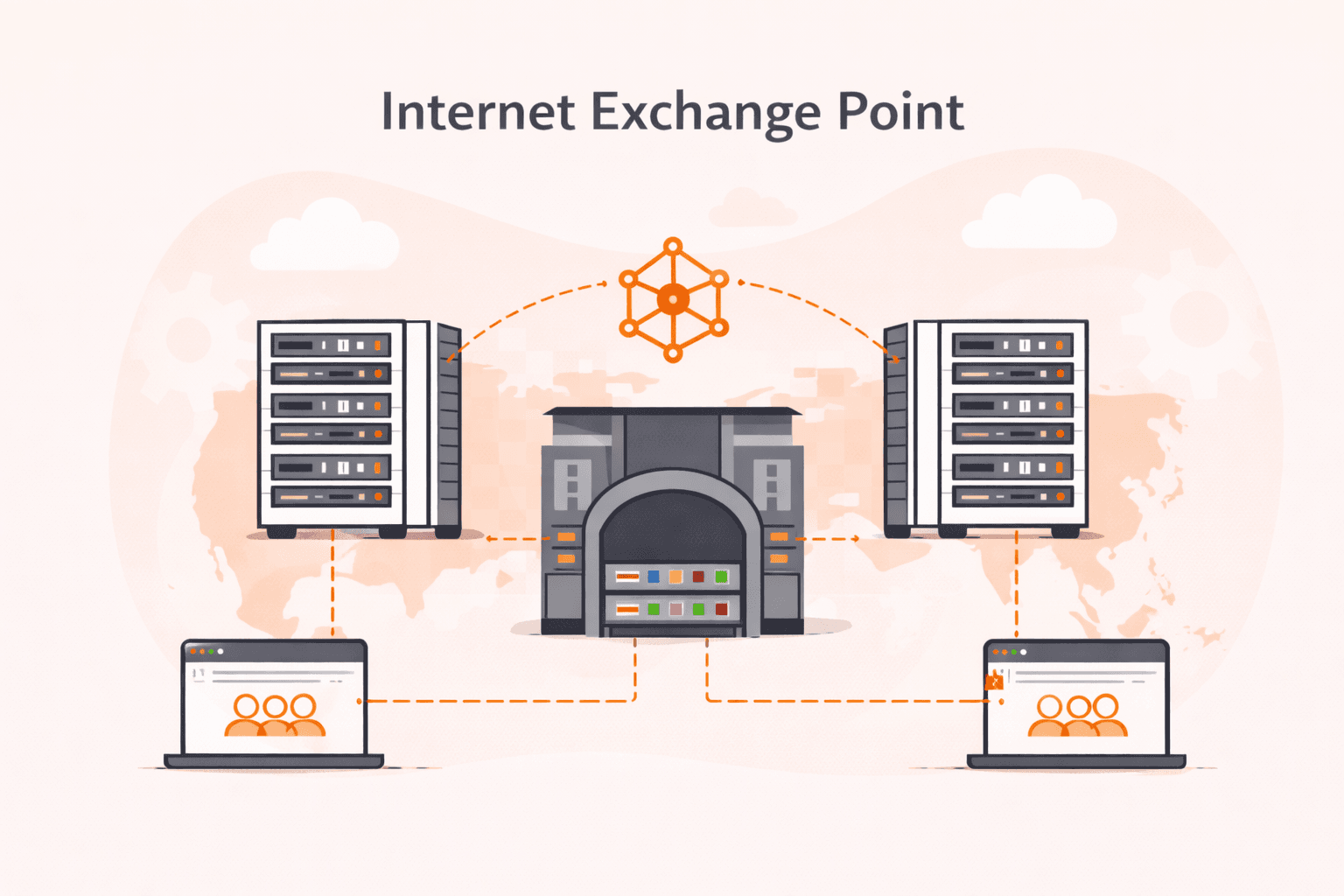

Edge functions deploy server-side code to a globally distributed network of edge servers, where it executes in response to user requests from the nearest geographic location. When a user makes a request, the edge function runs at the closest Point of Presence (PoP)—similar to how a CDN provider distributes content—rather than routing to a centralized origin server. This cuts the distance data travels and reduces latency to sub-50ms in many cases.

The process is straightforward. You write a JavaScript function and push it to your edge platform's repository. The platform automatically replicates this code across its global network of edge locations.

When a request comes in, the edge server intercepts it, executes your custom logic, and returns a response. All without touching your origin infrastructure. This happens in milliseconds because the code runs physically close to the user.

Edge functions can modify HTTP responses, personalize content based on user location, handle authentication, or process data in real time. They're event-driven, triggering only when specific conditions are met, such as a particular URL path or request header.

The execution environment is lightweight and isolated. This lets thousands of functions run concurrently across the network without resource conflicts.

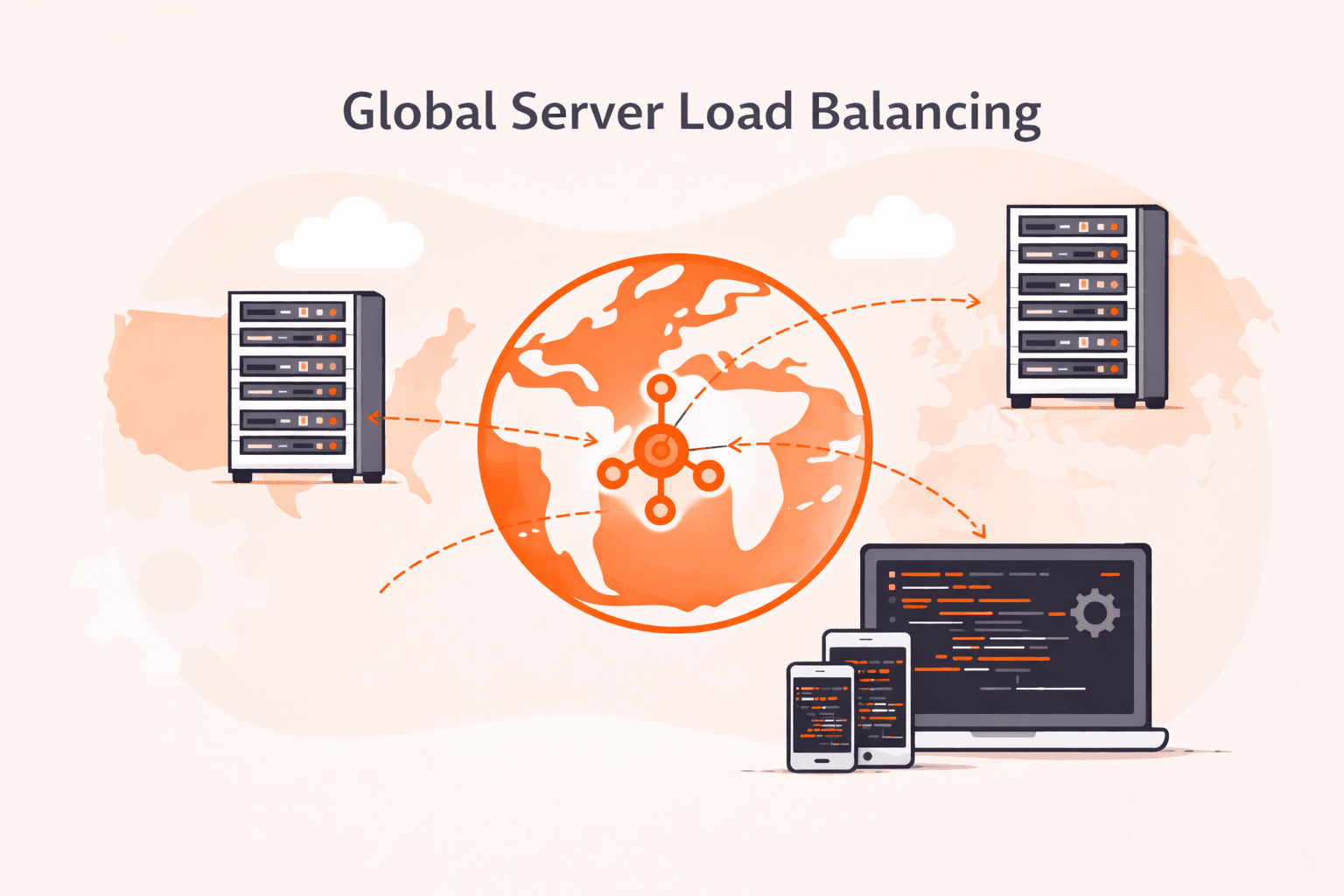

The key advantage is geographical proximity. Traditional serverless functions run in specific cloud regions, forcing requests from distant users to travel long distances. Edge functions eliminate this bottleneck by running everywhere simultaneously. You get consistent performance regardless of where users connect from.

What are the main benefits of edge functions?

Edge functions deliver code execution at network edge locations, giving you faster performance and lower costs. Here's what you gain by running server-side code closer to your users.

- Reduced latency: Edge functions run code nearest to users, cutting the distance data travels. Response times drop from 200 ms to under 50 ms for global audiences.

- Lower bandwidth costs: Processing requests at the edge reduces data transfer between origin servers and users. You save on bandwidth by handling tasks like image optimization and content change at edge locations.

- Global use simplicity: Edge functions distribute automatically across a provider's network without manual server configuration. Write code once and it runs worldwide, eliminating the need to manage infrastructure across multiple regions.

- Better performance: Code runs on servers near users, delivering faster page loads and improved user experience. Real-time applications like live chat and collaborative tools respond more quickly when processing happens at the edge.

- Easy personalization: Edge functions modify content based on user location, device type, or preferences before delivery. You can serve localized content, A/B test variations, or adjust layouts without round trips to origin servers.

- Cost effectiveness: Pay only for actual execution time rather than maintaining always-on servers. This serverless model reduces costs for applications with variable traffic patterns.

- Flexibility: Edge functions scale automatically to handle traffic spikes without capacity planning. The distributed architecture spreads load across many locations, preventing bottlenecks at a single origin point.

What are the common use cases for edge functions?

Edge functions execute server-side code at network edge locations to deliver faster, more personalized experiences. Here are the most common use cases.

- Content personalization: Edge functions modify web content based on user location, device type, or behavior without round trips to origin servers.

- A/B testing: Run experiments at the edge to test different page variants instantly for each user request. Edge functions route traffic between versions and collect performance data in real time.

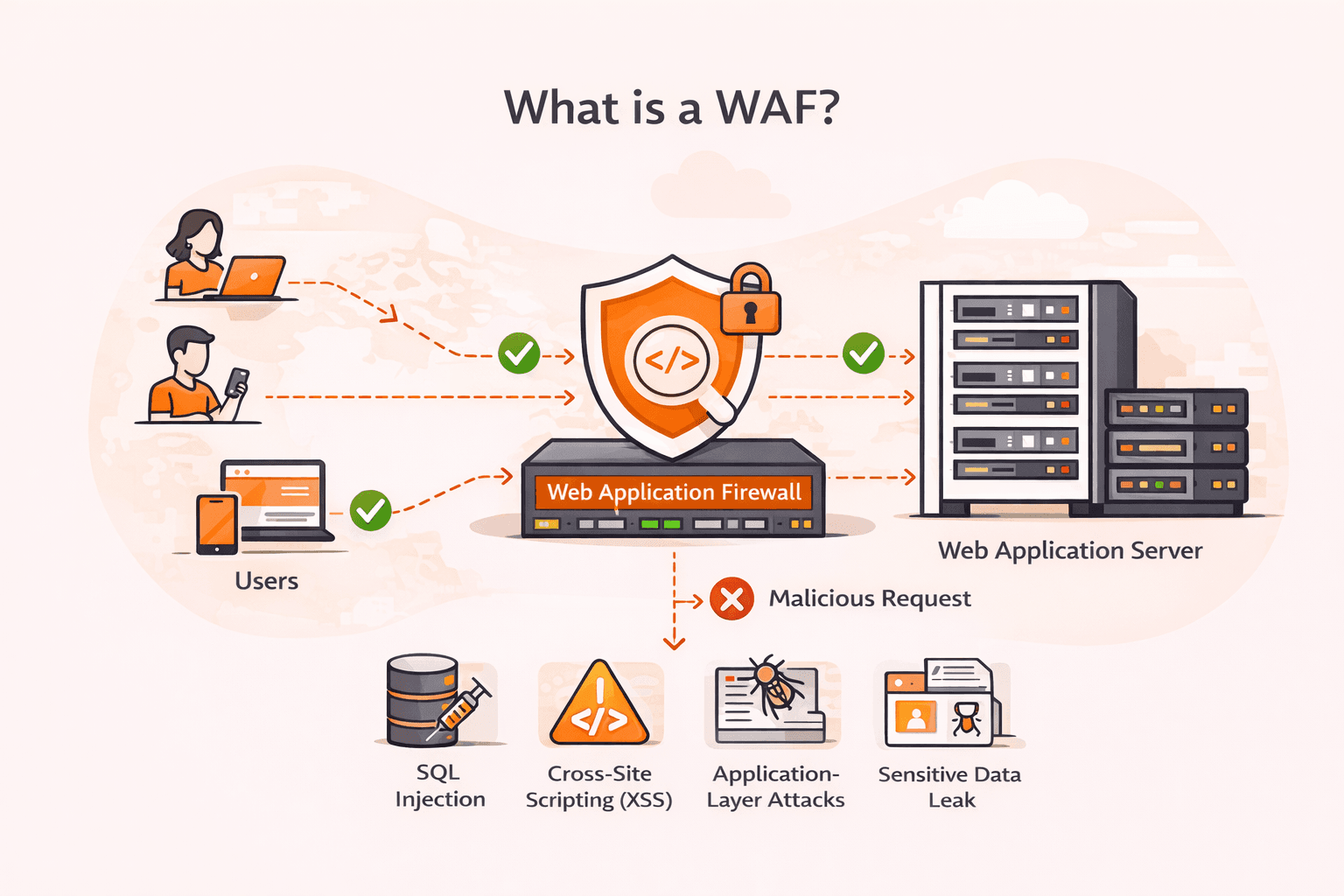

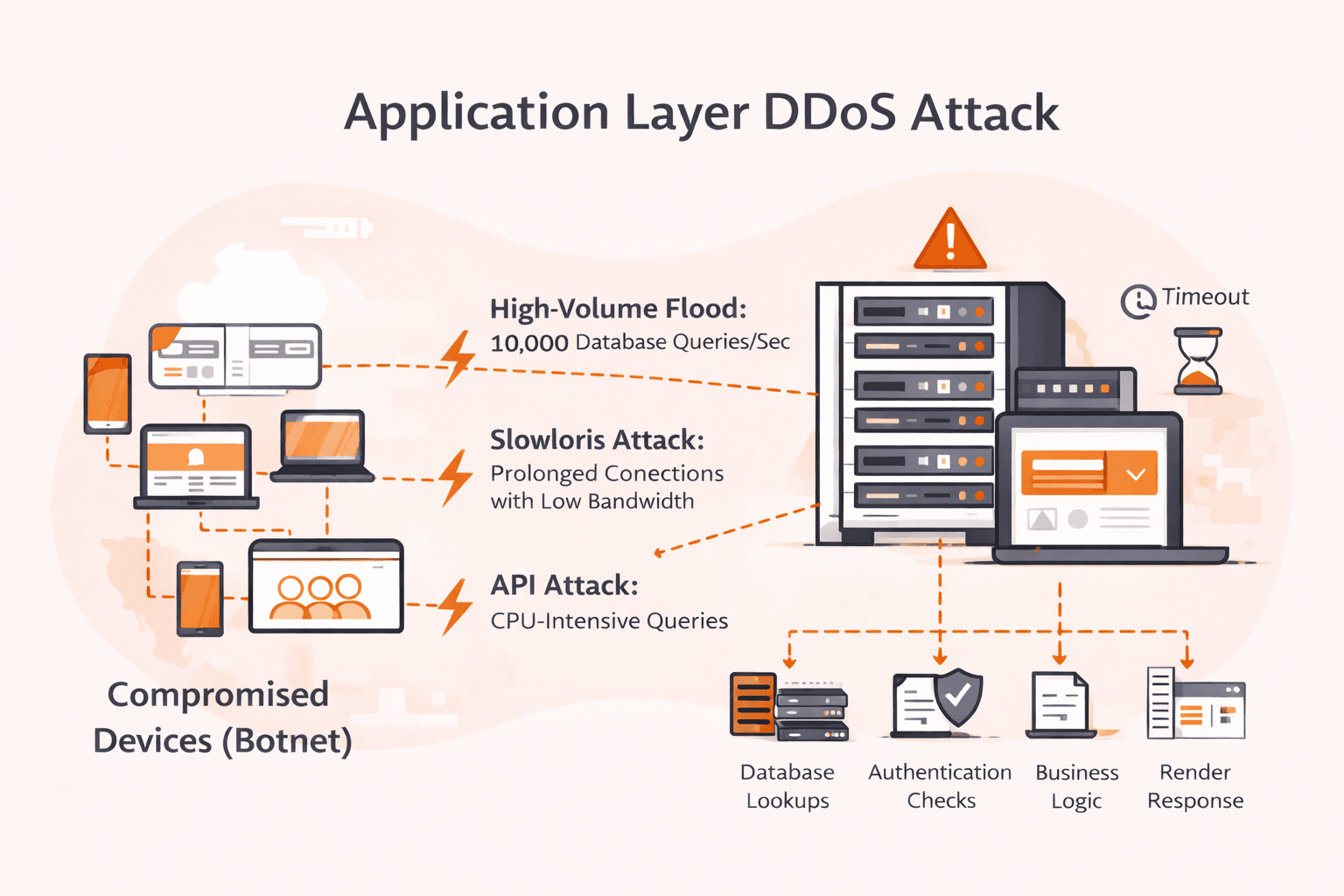

- Authentication and authorization: Security checks happen at edge locations before requests reach origin servers. This blocks unauthorized access faster and reduces load on backend systems, similar to how a DDoS protection service filters malicious traffic at the network edge.

- Image optimization: Edge functions automatically resize, compress, and convert images to best formats based on device capabilities. This cuts bandwidth usage and improves page load speeds.

- API response caching: Frequently requested API data gets cached at edge locations for faster delivery. Edge functions handle cache invalidation and refresh logic without backend involvement.

- Geolocation routing: Edge functions detect user location and serve region-specific content or redirect to local domains. This supports compliance with data residency requirements.

- Bot detection: Edge functions analyze request patterns to identify and block malicious bots before they reach origin servers. This reduces infrastructure costs and improves security.

- Server-side rendering: Edge functions generate HTML from JavaScript frameworks closer to users for faster first-page loads. For full SSR benefits, consider edge-compatible databases or caching strategies, as data fetching from distant origin servers can negate latency gains.

What are the limitations of edge functions?

Edge functions come with several constraints that can complicate development and use. The main challenges include difficult debugging in distributed environments, third-party API combination limits, and complex distributed database synchronization. Developers often struggle with limited visibility into code execution across hundreds of global nodes, making it harder to trace errors or monitor performance compared to centralized servers.

Third-party API combination presents a significant hurdle. Edge functions run in isolated execution contexts with strict resource limits.These restrictions can limit the types of external services you can call reliably.

Cold start latency affects initial request performance when code hasn't run recently at a specific edge location. Platforms using V8 isolates typically have near-zero cold starts (under 5ms), while container-based implementations may have higher initialization times. Check your provider's architecture to understand expected cold start behavior.Database operations become more complex because edge functions need to query data sources that may be geographically distant. This can negate latency benefits unless you use specialized edge-compatible databases.

Development complexity increases because edge function environments support limited runtime features compared to full server platforms. You'll typically work with JavaScript or WebAssembly. Some Node.js APIs may not be available due to security or performance constraints.

Testing locally can't fully replicate the distributed nature of production edge deployments. This leads to surprises when code runs across different geographic regions with varying network conditions.

Cost predictability can be challenging at scale. Edge functions reduce latency and infrastructure management overhead, but high-traffic applications may see unpredictable billing. Execution spreads across many global locations, so you pay for compute time at multiple edge nodes rather than a single centralized server. This can add up quickly for applications with millions of requests per day.

How to implement edge functions effectively

You use edge functions effectively by selecting the right provider platform, writing optimized code for distributed execution, testing completely across regions, and monitoring performance continuously.

First, evaluate platforms based on your technical requirements and geographic coverage needs. Look at supported runtimes (JavaScript, WebAssembly), use workflow integration with your existing CI/CD pipeline, and the number of global edge locations available. More edge locations mean lower latency for your users.

Keep your code lean for fast cold starts and minimal execution time. Keep function sizes small (limits vary by provider, typically 1-4 MB), limit external dependencies, and be aware of CPU time restrictions that vary by provider and plan. Edge functions work best for quick operations like request routing, header manipulation, or simple data transformations. Save complex database queries for your origin servers.

Test your edge functions in a staging environment that mirrors production conditions across multiple geographic regions. Verify behavior with different request types, check error handling for network failures, and confirm that authentication logic works correctly. Regional testing helps you catch latency issues or region-specific problems before they reach production.

Set up proper error handling to maintain reliability when edge nodes fail or experience issues. Build retry logic for transient failures, use circuit breakers for persistent problems, and configure fallback routes to origin servers when edge processing fails. This keeps your application running even when individual edge locations have problems.

After use, track execution time, error rates, cache hit ratios, and geographic distribution of requests. Set alerts for latency spikes above 100 milliseconds or error rates exceeding 1%. Use this data to identify optimization opportunities or regions that need additional edge capacity.

Cache frequently accessed data at the edge to reduce redundant processing and improve response times. Set cache durations from 60 to 300 seconds depending on how often your content updates. Use cache invalidation mechanisms to keep data fresh when source content changes.

Start with simple use cases like request routing or header modification before tackling complex personalization logic. This lets you build familiarity with edge function limitations and debugging tools.

What should you consider when choosing an edge functions provider?

Yes, you should consider several technical and operational factors when choosing an edge functions provider. Your choice directly impacts application performance, development experience, and long-term costs. The right provider depends on your specific use case, technical requirements, and team capabilities.

Network coverage and latency matter most for performance-critical applications. Evaluate the provider's global Points of Presence (PoPs) and ensure they have edge locations near your users.

Test actual latency from key geographic regions. Advertised network size doesn't always correlate with real-world performance. For applications serving specific regions, regional coverage density matters more than total global PoP count.

Runtime support and language compatibility determine what you can build. Most providers support JavaScript and TypeScript, but support for other languages varies.

Check if the runtime supports the npm packages, APIs, and libraries your application needs.

Developer experience and debugging tools especially affect productivity. Edge functions run in distributed environments, making debugging more challenging than traditional server code.

Look for providers offering local development environments, detailed logs, error tracking, and monitoring dashboards. Integration with your existing development workflow, version control, and CI/CD pipeline reduces friction.

Pricing structure and cost predictability vary widely between providers. Some charge per request, others by compute time, and many combine both.

Calculate costs based on your expected request volume and function complexity. Watch for hidden costs like bandwidth charges, log storage, or premium support requirements. Free tiers can help you test before committing.

Integration capabilities with your existing infrastructure affect use complexity.

Check how the provider connects with your CDN, databases, authentication systems, and third-party APIs. Built-in integrations with your current stack reduce development time and potential issues. Some providers offer tight integration with their CDN, while others work as standalone services.

Cold start performance impacts user experience for infrequently accessed functions.

Edge functions can experience initialization delays when they haven't run recently. Providers handle cold starts differently. Some keep functions warm, others improve startup time. For high-traffic applications, cold starts matter less, but they're critical for sporadic workloads.

Security features and compliance requirements depend on your application's sensitivity.

Evaluate built-in security controls, encryption options, secrets management, and compliance certifications (SOC 2, GDPR, HIPAA). Some providers offer additional security layers like DDoS protection, rate limiting, and Web Application Firewall (WAF) integration at the edge.

Vendor lock-in and portability affect long-term flexibility. Edge functions often use provider-specific APIs and deployment patterns, making migration difficult.

If portability matters, look for providers supporting standard APIs or frameworks that work across multiple platforms. Consider the effort required to switch providers if your needs change.

How can Gcore help with edge functions?

Running edge functions at scale demands infrastructure that delivers consistent global performance. Gcore FastEdge is a serverless edge compute platform that executes WebAssembly applications directly on 210+ CDN edge servers worldwide.

FastEdge supports JavaScript and Rust runtimes with microsecond cold starts thanks to the Wasm runtime. Deploy code globally in minutes, manipulate requests and responses without origin round-trips, and use built-in KV Storage for edge state management.

Explore Gcore FastEdge

Frequently asked questions

Is edge functions difficult to implement?

No, edge functions are straightforward to use. You add a JavaScript file to your repository and deploy through your provider's platform, which handles global distribution automatically. The main challenges arise in debugging distributed environments and combining with third-party APIs, but the initial setup and use process itself is simple.

How much does edge functions cost?

Edge functions pricing varies by provider. Most charge per request (starting around $0.50 per million) and compute time (around $0.02 per million GB-seconds). The good news? Providers typically offer generous free tiers, often one to three million requests monthly. This makes edge functions cost-effective for small to medium workloads compared to traditional serverless functions.

Can edge functions integrate with existing systems?

Yes, edge functions integrate seamlessly with existing systems. They connect through standard APIs, webhooks, and middleware patterns to databases, authentication services, and backend infrastructure.

Edge functions work as a lightweight layer between users and origin servers. They execute custom logic at edge locations while communicating with your current tech stack through HTTP requests, message queues, or direct database connections. This means you can add edge computing capabilities without rebuilding your architecture.

What are common mistakes with edge functions?

Edge functions can trip you up in several ways. Debugging in distributed environments is tough. Integration with third-party APIs often creates unexpected challenges. Database synchronization across multiple edge locations can cause headaches.

You'll also face performance constraints. Cold start times affect response speed.Memory constraints can cause functions to fail without warning, making it hard to predict behavior under load.

How long does it take to set up edge functions?

Getting edge functions up and running is quick. Write your JavaScript function, push it to the platform, and it deploys globally to hundreds of edge locations within two to five minutes.

Related articles

Subscribe to our newsletter

Get the latest industry trends, exclusive insights, and Gcore updates delivered straight to your inbox.