Real-Time Messaging Protocol, otherwise known as RTMP, plays a crucial role in enabling the smooth transmission of data over the internet, delivering live video content globally. Whether you’re new to live streaming or an experienced broadcaster, this detailed guide will equip you with the knowledge you need to effectively harness the power of RTMP.

What Is RTMP?

Real-Time Messaging Protocol, known as RTMP, is a technology specially designed for transmitting data over the internet. It’s primarily applied in live video streaming and live television. RTMP works to break down the data in cumbersome, high-definition video files into more manageable, smaller packets, making them easier to send. In this way, it is able to deliver encoded video content to live streaming platforms, social media networks, and media servers.

Types of RTMP

Different variants of RTMP have emerged to cater to various technological needs and scenarios. These specialized forms of RTMP include encrypted, tunneled, and layered versions, each designed to fulfill specific industry requirements, such as enhanced security or more flexible transmission methods.

- RTMPS (Real-Time Messaging Protocol Secure): RTMPS employs Secure Sockets Layer (SSL) to add an encryption layer to the standard RTMP, ensuring the secure, intact, and confidential transmission of data. This is vital in fields like financial services or private communications where data integrity cannot be compromised.

- RTMPT (Real-Time Messaging Protocol): RTMPT essentially tunnels RTMP data through HTTP (HyperText Transfer Protocol), a protocol that allows for the communication between a client’s web browser and a server, enabling the retrieval and display of web content. Tunneling the RTMP data through HTTP allows it to traverse firewalls and other network barriers, enhancing compatibility and reach.

- RTMPE (Real-Time Messaging Protocol Encrypted): RTMPE is a variant that encrypts the RTMP data, though without the SSL layer present in RTMPS. This can be favorable in scenarios where data privacy is essential but where the extra processing required by SSL might hinder performance.

- RTMPTE (Real-Time Messaging Protocol Tunneled and Encrypted): RTMPTE combines the tunneling feature of RTMPT with the encryption of RTMPE. This combination delivers both enhanced security via encryption and increased flexibility and compatibility via HTTP tunneling. This balanced approach makes RTMPTE suitable for a wide variety of applications where both security and accessibility are considerations.

- RTMFP (Real-Time Media Flow Protocol): RTMFP marks a departure from traditional RTMP by utilizing UDP (User Datagram Protocol) instead of TCP (Transmission Control Protocol). Unlike TCP, UDP doesn’t employ error-checking mechanisms, allowing for a more efficient and timely data transfer.

When Is RTMP Used?

RTMP plays a crucial role in enabling seamless live video streaming to social media networks, media servers, and live streaming platforms over the internet. It is used to make sure that the video data is transferred in real-time, without significant delays or buffering, allowing the viewer to experience the content as it’s happening. In this way, live events, webinars, or social media broadcasts can be shared with audiences around the world, without any loss of quality or time lag.

Today, RTMPS is widely used to ensure secure video data transmission. It encrypts the data, adding an extra layer of security to prevent unauthorized access or potential breaches, especially for industries that handle sensitive information, such as healthcare, finance, and government agencies, commonly utilize RTMPS.

RTMP is compatible with specific audio and video inputs. For audio, AAC (Advanced Audio Codec), AAC-LC (Low Complexity), and HE-AAC+ (High-Efficiency Advanced Audio Codec) are commonly used, with each serving different purposes. AAC is known for its quality, while AAC-LC offers lower complexity, making it suitable for less robust systems. HE-AAC+ is used when high efficiency is required. For video, H.264 is commonly applied for high-quality streaming. These encoding options provide flexibility and optimization for various streaming scenarios, tailoring the streaming experience to the specific needs of the content and audience.

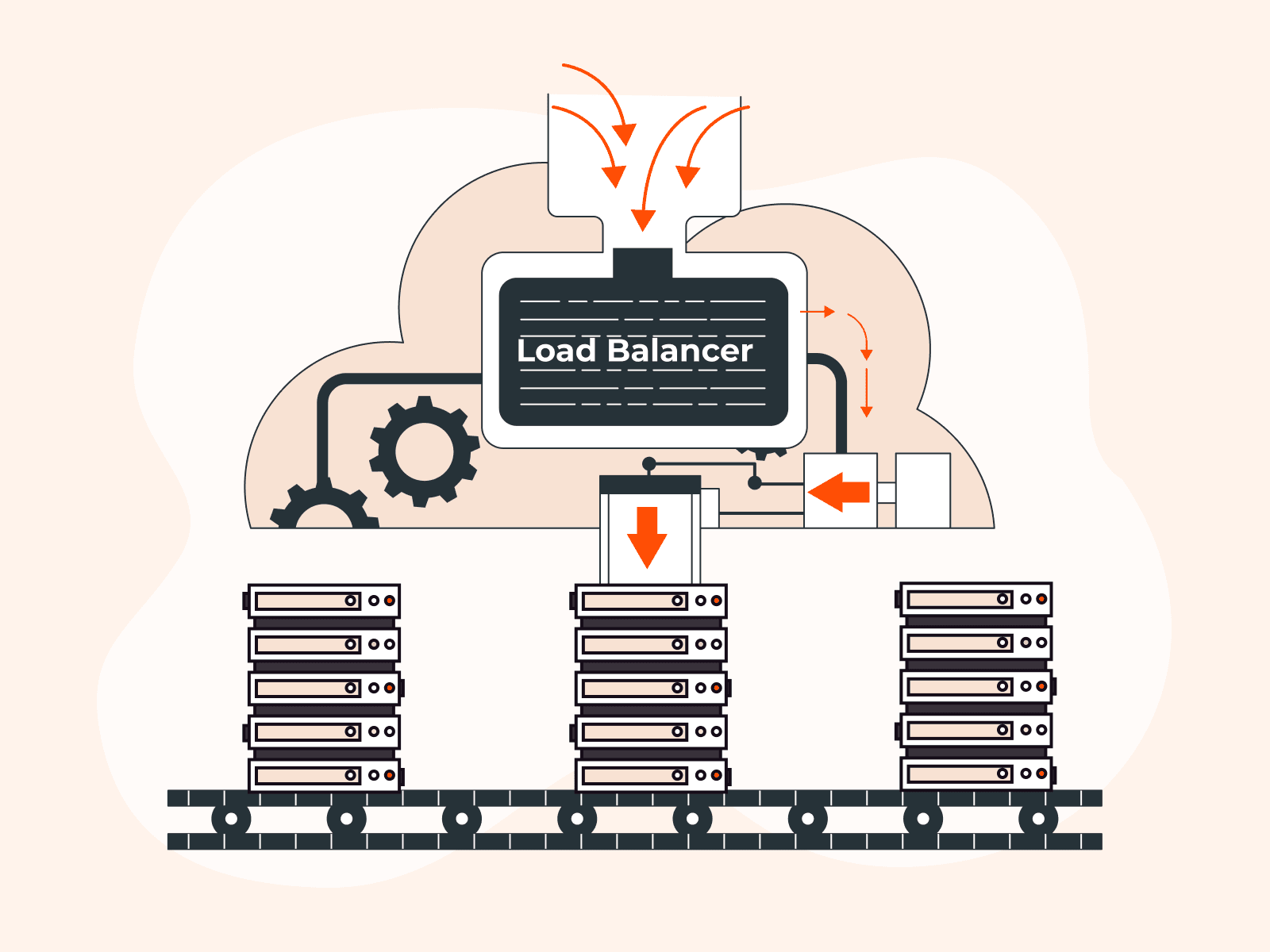

How Does RTMP Streaming Work?

RTMP streaming connects three main components: the encoder, the streaming server, and the media player. This is done by breaking down video and audio data into smaller packets using an RTMP encoder. These packets are sent from the encoder to a streaming server, where they are stored and prepared for distribution. When a viewer requests the stream, the server establishes a direct connection with the media player using RTMP to deliver the encoded data, which is then decoded and played back in real time.”

To properly explain how RTMP streaming works in 2023, we will use the example of streaming a live concert taking place at a popular venue.

The first process in RTMP streaming is transferring the video stream to a server. In our live concert example, the venue’s local recorder captures the event, and RTMP sends the stream to an on-premise transcoder or a cloud-based platform like Gcore. This step ensures that the live feed is centralized and ready for distribution. Some companies may solely utilize this process for their streaming needs.

The second process, restreaming, involves the multiplication and distribution of the stream to various platforms like Facebook, Twitch, or YouTube. After the video has reached the transcoder or cloud platform, RTMP facilitates the distribution to different platforms, ensuring the live concert reaches viewers on their preferred channels, effectively making the content accessible to a wide audience. Some companies may exclusively opt for this process, while others might combine both processes for a comprehensive restreaming strategy.

Viewers are then able to watch the concert on their chosen platform, with RTMP ensuring smooth delivery. The RTMP stream concludes when the concert ends.

How streams are transferred from the client to the server

The data transfer within the RTMP streaming can be done in two distinct ways: Push or Pull.

- Push Method: The client’s recorder initiates the connection and pushes the video to a platform like YouTube. If broken, it’s restarted by the client.

- Pull Method: The platform initiates the connection to the venue’s recorder and pulls the data. If broken, the platform restores it.

Platforms That Accept RTMP Streams

Currently, there are many platforms accepting RTMP streams, providing organizations and content creators with a plethora of opportunities to broadcast live content across various online channels. Leading social media networks like Facebook, X, LinkedIn, Twitch, and YouTube have embraced RTMP, enabling real-time video sharing and audience engagement.

Moreover, with the rising popularity of virtual events, platforms like Eventfinity, Livestream, and Teams Live Event have also implemented RTMP stream capabilities. Likewise, Gcore’s Live Streaming solution integrates RTMP support, offering versatile options for showcasing videos to global audiences.

Benefits of Using RTMP Streaming

RTMP streaming offers a range of valuable advantages that contribute to its effectiveness in delivering high-quality live video content.

- Low latency: RTMP minimizes the delay between content capture and delivery, ensuring fast interaction and engagement during live events.

- Secure, cloud-based streaming through RTMPS: This is a secure version that encrypts data, ensuring privacy and protection during cloud-based streaming.

- Compatibility with most live-streaming video services: RTMP is supported by a large number of platforms, allowing users to reach wider audiences and leverage multiple distribution channels.

- Ease of integration: RTMP seamlessly integrates different media types into a single source, enabling content creators to deliver dynamic and versatile live streams. All modern streaming encoders and live streaming apps support RTMP protocol.

RTMP Disadvantages

Despite the benefits that RTMP brings to live video streaming, RTMP faces challenges such as limited codec support, affecting the compression of high-resolution videos like 4K and 8K due to a lack of support for modern codecs. TCP retransmission limitations further hamper RTMP, with TCP’s windowing mechanism restricting retransmission of lost packets, leading to stuttering in unstable networks.

Additional challenges include a lack of advanced error-correction, where RTMP’s absence of Forward-Error-Correction (FEC) and Automatic Repeat Request (ARQ) makes recovery from packet loss difficult, and vulnerability to bandwidth fluctuations, where RTMP lacks robust mechanisms to adapt to sudden changes in network conditions, risking inconsistent live broadcast quality.

Is RTMP Becoming Obsolete?

No, RTMP is not becoming obsolete. As technology advances, RTMP continues to be relevant and widely used due to its beneficial features, such as low-latency streaming capabilities, essential for real-time interactive experiences. Furthermore, RTMP remains compatible with many live-streaming video services, making it a dependable choice for content creators seeking ease of setup and integration.

Additionally, the introduction of RTMPS (RTMP Secure), a more secure version of RTMP, enables secure, cloud-based streaming.

RTMP Alternatives for Ingest

Despite its historical significance, RTMP has some potential limitations and drawbacks to consider. Firstly, RTMP requires stable and sufficient internet bandwidth, which may pose challenges for users with limited capabilities or viewers with slower connections.

SRT

SRT, or Secure Reliable Transport protocol, is an open-source video transport protocol that optimizes streaming over unpredictable networks, ensuring resilient, secure, and low-latency delivery. The design emphasizes quality and reliability by utilizing 128/256-bit AES encryption and handling packet loss and jitter.

It combines the benefits of UDP transmission without its downsides, reducing latency compared to TCP/IP. Additionally, SRT’s compatibility with firewalls simplifies traversal and adheres to corporate LAN security policies, while its flexibility enables the transport of various video formats, codecs, resolutions, or frame rates. As a member of the SRT Alliance, Gcore supports this cost-effective solution that operates effectively at the network transport level, encouraging its widespread adoption and collaborative development.

Enhanced RTMP

Enhanced RTMP is a modern adaptation of the traditional RTMP. Recognizing the need to keep pace with evolving streaming technologies, Enhanced RTMP brings the protocol up to date with current advancements, introducing support for contemporary video codecs that were previously unsupported, such as HEVC (H.265), VP9, and AV1. These codecs are vital in the contemporary streaming landscape, with HEVC being popular within streaming hardware and software solutions, and AV1 gaining recognition for its broad applicability.

The advantages of Enhanced RTMP extend beyond compatibility with modern codecs. Improved viewing experience is achieved through support for High Dynamic Range (HDR), which enriches color depth and contrast ratio, and planned updates that include a seamless reconnect command, minimizing interruptions. Increased flexibility is provided by the addition of PacketTypeMetadata, allowing for various types of video metadata support. The audio capabilities are also expanded with the integration of popular audio codecs like Opus, FLAC, AC-3, and E-AC-3, all while maintaining backward compatibility with existing systems and preserving the legacy of RTMP.

NDI

NDI, or Network Device Interface, is a video over IP transmission protocol that was developed to meet professional needs. A royalty-free solution, NDI enables compatible devices to share video, audio, and metadata across IP networks. This innovation transforms the way content is managed and delivered, in massive broadcast environments, as well as in smaller, specialized integrations.

NDI’s comprehensive set of features addresses both current and emerging needs in video and audio transmission, earning recognition and implementation in diverse applications around the world. The strategic design employs high efficiency by providing visually lossless video up to 4K60 through advanced formats like HX3, plug & play functionality, and interoperability, giving NDI a competitive advantage. Additionally, the use of popular codecs like H.264 & H.265, NDI ensures optimal performance with reduced bitrates, low latency, and a visually lossless image. This makes it suitable for CPU, GPU, and FPGA implementations.

Ingest Video Sent as Multicast over UDP MPEG-TS

Multicast over UDP MPEG-TS is a sophisticated method used in OTT platform and IPTV video services for encoding a group of TV channels. OTT (Over-the-Top) refers to streaming media services delivered directly over the internet, bypassing traditional cable or satellite TV platforms, while IPTV (Internet Protocol Television) is a service that delivers television content using the Internet Protocol network, enabling a more personalized and interactive TV experience.

By employing MPEG-TS streams via multicast UDP, a large number of threads in one place can be collected. The protocol works by distributing sets of UDP (User Datagram Protocol) packets from the same source to multiple subscribers, often encapsulating seven 188-byte packets in each UDP packet. These packets are usually sent to a specific range of IP addresses reserved for multicast, typically between 224.0.0.1 and 239.255.255.255. Multicast traffic is routed to the nearest router, which then decides the client to send the traffic to, based on the client’s requirements transmitted via the IGMP protocol. As such, this protocol provides users with advantages such as efficient bandwidth utilization, minimal data loss, scalability, real-time delivery, network flexibility, and integration with existing systems.

RTMP Alternatives for Playback

Scalability is another consideration, as RTMP may encounter difficulties when streaming to large audiences or distributing content across multiple servers.

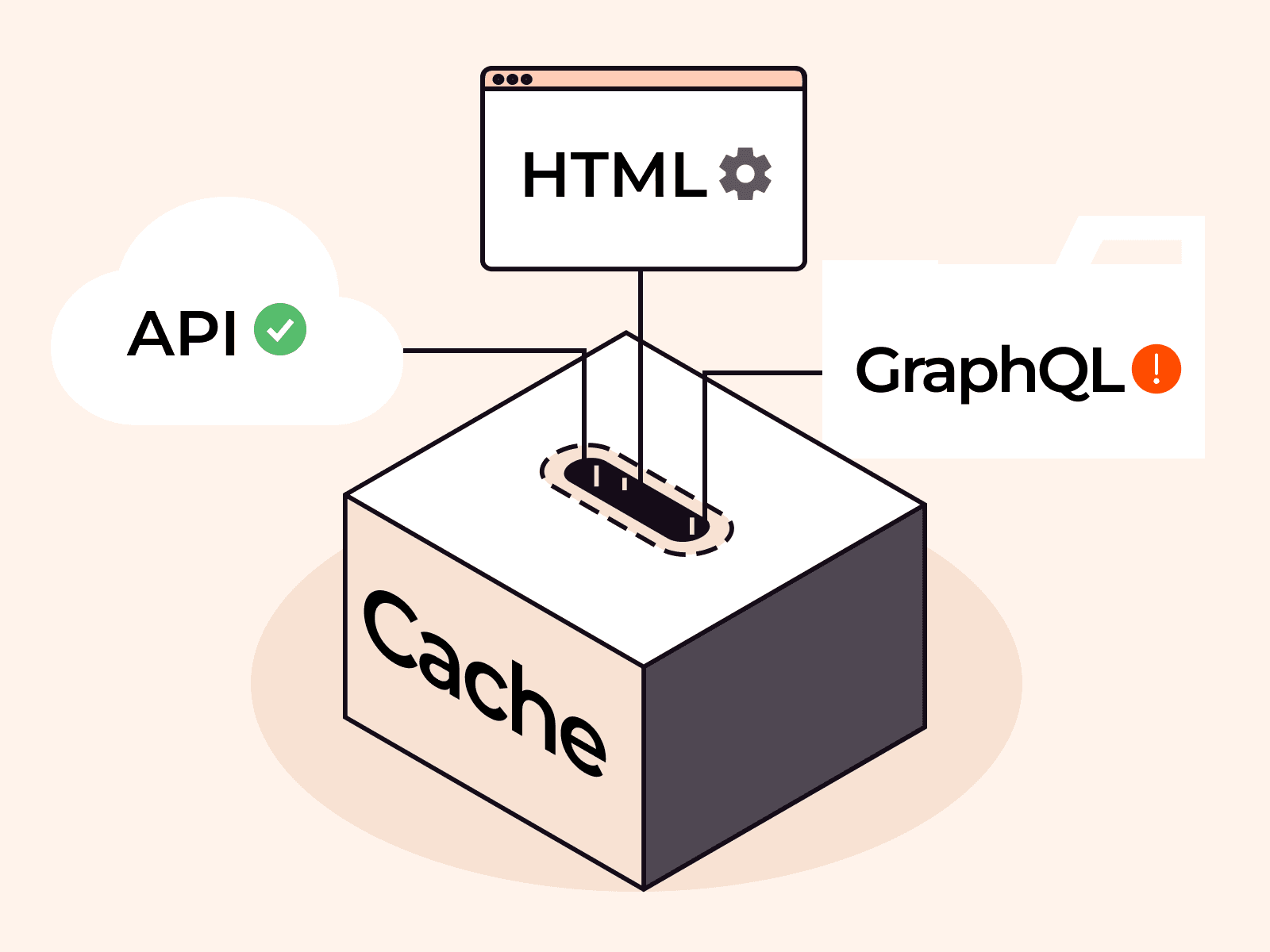

Considering these factors, it becomes evident that exploring alternative protocols for live streaming is essential. HTTP protocols like HLS (HTTP Live Streaming) or DASH (Dynamic Adaptive Streaming over HTTP) provide broad device compatibility and adaptive streaming, adjusting video quality based on viewers’ internet connections and thus delivering a seamless playback experience.

Adaptive HTTP Streaming like HLS or MPEG-DASH

Adaptive HTTP Streaming technologies such as HLS (HTTP Live Streaming) or MPEG-DASH (Dynamic Adaptive Streaming over HTTP) are increasingly popular playback alternatives to RTMP, offering a more flexible and adaptive approach to video streaming.

HLS, developed by Apple, presents a robust solution for delivering both live and on-demand content via standard HTTP connections. Its broad compatibility and adaptive streaming capabilities make it an attractive option for a wide range of users. However, it is essential to examine how HLS stands against RTMP in terms of performance, ease of use, and overall efficiency.

On the other hand, DASH, developed by the MPEG industry consortium, is another popular alternative. It provides adaptive bitrate streaming for seamless viewing under varying network conditions. Its broad compatibility with various devices simplifies distribution, and its reliance on standard HTTP connections streamlines setup and integration.

Unlike RTMP, HLS and MPEG-DASH operate over standard HTTP, facilitating integration with existing web technologies and supporting adaptive bitrate streaming to select appropriate bitrates based on viewer’s network conditions. They are capable of delivering up to 4K and 8K resolutions and are designed to be codec-agnostic, which allows them to support new codecs as they become available, enhancing the efficiency and quality of the stream.

Other alternatives for playback include:

HESP

High Efficiency Stream Protocol (HESP) delivers ultra-low latency video streaming via HTTP, maintaining quality up to 8K. It offers 20% reduction in bandwidth, supports new codecs, 4K/8K resolutions, and integrates with DRM systems. Gcore is a HESP Alliance member.

HTTP-FLV

HTTP Flash Live Video (HTTP-FLV) streams FLV format via HTTP. It has low latency, converts RTMP streams into FLV, and is firewall-compatible. Advantages include easy delivery, support for DNS 302 redirects, and broad compatibility.

WebSockets and Media Source Extensions (MSE)

This combination enables low latency live streams, using WebSockets for bidirectional communication and MSE for adaptive streaming. It offers 3-second latencies, adaptive bitrate streaming, and enhanced control over content quality.

WebRTC

WebRTC facilitates real-time web communication, transmitting video, voice, and data using open web protocols. Compatible with modern browsers and native platforms, it supports diverse applications, including video conferencing and peer-to-peer connectivity.

How to Set Up RTMP Streams

When setting up an RTMP stream, several factors need to be considered, including the destination platform and the type of encoder being used. If you choose a hardware encoder, additional steps may be involved, making the setup slightly more complex.

One necessary element for RTMP streaming is the RTMP stream key. The stream key acts as a code that establishes a connection between your encoder and the streaming platform. You’ll also need a server URL, a unique web address that links your broadcast to the chosen streaming platform. Typically, you can find the server URL in your chosen platform’s settings, along with the stream key. The server URL remains constant for each broadcast to the same platform, whereas the stream key changes with every new stream.

Once you’re prepared to start broadcasting, you’ll input the stream key and server URL from your streaming platform into your encoder. This seamless integration creates a reliable connection between the two, enabling the smooth transmission of data packets from your encoder to the streaming platform, and ensuring a successful RTMP stream.

How to Set Up an RTMP Stream with Gcore

Setting up an RTMP stream with Gcore Streaming Platform is a straightforward process that can be completed in just a few steps:

- Create a free account: Sign up for a Gcore account by providing your email and password.

- Activate the service: Choose the Free live plan or another suitable option to activate the service.

- Create a live stream: Access the live streaming section in the Streaming tab and click “Create live stream.” Enter the name for your live stream and click “Create.” If you’ve reached your live stream limit, you will need to delete an existing stream before you can create a new one.

- Turn on low-latency live streaming and choose the stream type: Select either Push or Pull stream typebased on your requirements. If you have your own media server, choose Pull, and if not, opt for Push. If you select Pull, input the link to your media server in the URL field. For Push, select your encoder from the drop-down list and copy the server URL and stream key into your encoder’s interface. You may need to edit the name of the stream key or enable additional, previously activated features, such as Record for live stream recording and DVR to allow the broadcast to be paused.

- Set up the live stream: If you chose a Pull stream type, enter the media server link in the URL field. You can specify multiple media servers, with the first as the primary source and the rest as backups in case of signal interruptions. If you chose the Push stream type, choose the encoder from the drop-down list, and copy the server URL and Stream Key. Insert these values into your encoder’s interface as instructed in the “Push live streams software” section.

- Start the live stream: Once everything is configured correctly, begin the live stream on your media server or encoder. A streaming preview will be visible in the player.

- Embed the live stream: Choose the appropriate method to embed the live stream into your web application, either by copying the iFrame code for the built-in player or using the export link in the desired protocol (LL-DASH for non-iOS devices and LL-HLS for iOS viewing).

We offer a comprehensive guide to creating a live stream should you need more detailed information.

Conclusion

RTMP continues to play a vital role in online video broadcasting, offering low-latency streaming and a seamless server-client connection that enables content creators to deliver live streams reliably and efficiently.

Leverage Gcore’s Streaming Platform and take advantage of an all-in-one solution that covers your video streaming needs, regardless of the chosen protocol. From adaptive streaming to secure delivery mechanisms, Gcore empowers content creators to stream a wide range of content, including online games and events, with speed and reliability.

Want to learn more about Gcore’s exceptional Streaming Platform? Talk to us to explore personalized options for your business needs.

Related articles

Subscribe to our newsletter

Get the latest industry trends, exclusive insights, and Gcore updates delivered straight to your inbox.