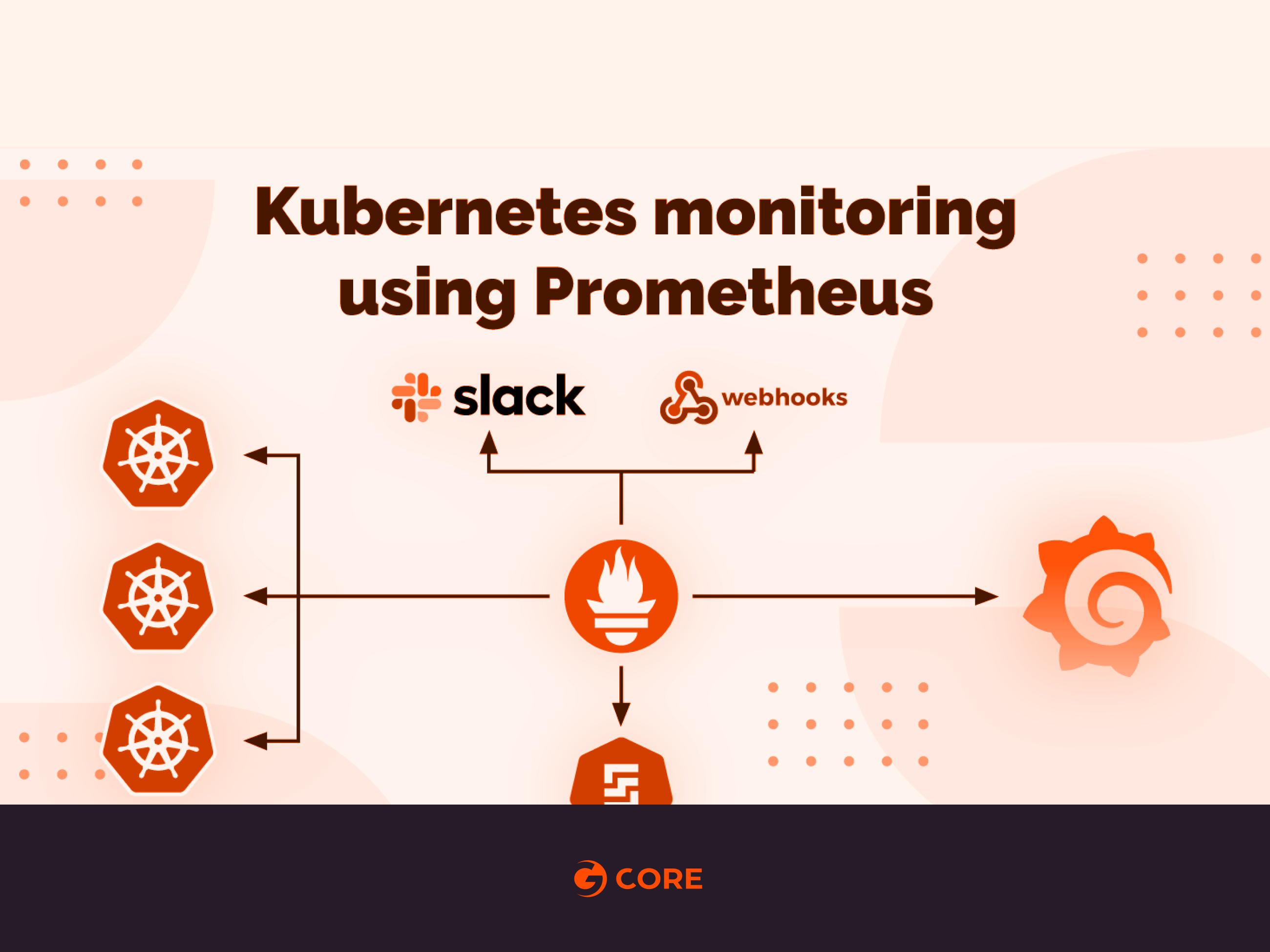

Monitoring is a crucial aspect of any Ops pipeline and for technologies like Kubernetes which is a rage right now, a robust monitoring setup can bolster your confidence to migrate production workloads from VMs to Containers.

Today we will deploy a Production grade Prometheus based monitoring system, in less than 5 minutes.

Pre-requisites:

- Running a Kubernetes cluster with at least 6 cores and 8 GB of available memory. We’ll be using a 6 node cluster for this tutorial.

- Working knowledge of Kubernetes Deployments and Services.

Setup:

- Prometheus server with persistent volume. This will be our metric storage (TSDB).

- Alertmanager server which will trigger alerts to Slack/Hipchat and/or Pagerduty/Victorops etc.

- Kube-state-metrics server to expose container and pod metrics other than those exposed by cadvisor on the nodes.

- Grafana server to create dashboards based on Prometheus data.

Note: All the manifests being used are present in this Github Repo. I recommend cloning it before you start.

Deploying Alertmanager

Before deploying, please update “<your_slack_hook>” , “<your_victorops_hook>” , ‘<YOUR_API_KEY>’ . If you use a notification channel other than these, please follow this documentation and update the config

kubectl apply -f k8s/monitoring/alertmanager/

This will create the following:

- A monitoring namespace.

- Config-map to be used by alertmanager to manage channels for alerting.

- Alertmanager deployment with 1 replica running.

- Service with Google Internal Loadbalancer IP which can be accessed from the VPC (using VPN)

root$ kubectl get pods -l app=alertmanager NAME READY STATUS RESTARTS AGE alertmanager-42s7s25467-b2vqb 1/1 Running 0 2m root$ kubectl get svc -l name=alertmanager NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE alertmanager LoadBalancer 10.12.8.110 10.0.0.6 9093:32634/TCP 2m root$ kubectl get configmap NAME DATA AGE alertmanager 1 2m

In your browser, navigate to http://<Alertmanager-Svc-Ext-Ip>:9093 and you should see the alertmanager console.

Deploying Prometheus

Before deploying, please create an EBS volume (AWS) or pd-ssd disk (GCP) and name it as prometheus-volume (This is important because the PVC will look for a volume in this name).

kubectl apply -f k8s/monitoring/prometheus/

This will create the following:

- Service account, cluster-role and cluster-role-binding needed for Prometheus.

- Prometheus config map which details the scrape configs and alertmanager endpoint. It should be noted that we can directly use the alertmanager service name instead of the IP. If you want to scrape metrics from a specific pod or service, then it is mandatory to apply the Prometheus scrape annotations to it. For example:

spec:

replicas: 1

template:

metadata:

annotations:

prometheus.io/path: <path_to_scrape>

prometheus.io/port: "80"

prometheus.io/scrape: "true"

labels:

app: myapp

spec:

...

- Prometheus config map for the alerting rules. Some basic alerts are already configured in it (Such as High CPU and Mem usage for Containers and Nodes etc). Feel free to add more rules according to your use case.

- Storage class, persistent volume and persistent volume claim for the prometheus server data directory. This ensures data persistence in case the pod restarts.

- Prometheus deployment with 1 replica running.

- Service with Google Internal Loadbalancer IP which can be accessed from the VPC (using VPN).

root$ kubectl get pods -l app=prometheus-server NAME READY STATUS RESTARTS AGE prometheus-deployment-69d6cfb5b7-l7xjj 1/1 Running 0 2m root$ kubectl get svc -l name=prometheus NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE prometheus-service LoadBalancer 10.12.8.124 10.0.0.7 8080:32731/TCP 2m root$ kubectl get configmap NAME DATA AGE alertmanager 1 5m prometheus-rules 1 2m prometheus-server-conf 1 2m

In your browser, navigate to http://<Prometheus-Svc-Ext-Ip>:8080 and you should see the Prometheus console. It should be noticed that under the Status->Targets section all the scraped endpoints are visible and under Alerts section all the configured alerts can be seen.

Deploying Kube-State-Metrics

kubectl apply -f k8s/monitoring/kube-state-metrics/

This will create the following:

- Service account, cluster-role and cluster-role-binding needed for kube-state-metrics.

- Kube-state-metrics deployment with 1 replica running.

- In-cluster service which will be scraped by Prometheus for metrics (Note the annotation attached to it).

root$ kubectl get pods -l k8s-app=kube-state-metrics NAME READY STATUS RESTARTS AGE kube-state-metrics-255m1wq876-fk2q6 2/2 Running 0 2m root$ kubectl get svc -l k8s-app=kube-state-metrics NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kube-state-metrics ClusterIP 10.12.8.130 <none> 8080/TCP,8081/TCP 2m

Deploying Grafana

By now, we have deployed the core of our monitoring system (metric scrape and storage), it is time too put it all together and create dashboards

kubectl apply -f k8s/monitoring/grafana

This will create the following:

- Grafana deployment with 1 replica running.

- Service with Google Internal Loadbalancer IP, which can be accessed from the VPC (using VPN).

root$ kubectl get pods NAME READY STATUS RESTARTS AGE grafana-7x23qlkj3n-vb3er 1/1 Running 0 2m kube-state-metrics-255m1wq876-fk2q6 2/2 Running 0 5m prometheus-deployment-69d6cfb5b7-l7xjj 1/1 Running 0 5m alertmanager-42s7s25467-b2vqb 1/1 Running 0 2m root$ kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE grafana LoadBalancer 10.12.8.132 10.0.0.8 3000:32262/TCP 2m kube-state-metrics ClusterIP 10.12.8.130 <none> 8080/TCP,8081/TCP 5m prometheus-service LoadBalancer 10.12.8.124 10.0.0.7 8080:30698/TCP 5m alertmanager LoadBalancer 10.12.8.110 10.0.0.6 9093:32634/TCP 5m

All you need to do now is to add the Prometheus server as the data source in Grafana and start creating dashboards. Use the following config:

Name: DS_Prometheus

Type: Prometheus

URL: http://prometheus-service:8080

Note: We are using the Prometheus service name in the URL section as both Grafana and Prometheus servers are deployed in the same cluster. In case the Grafana server is outside the cluster, then you should use the Prometheus service’s external IP in the URL.

All the dashboards can be found here. You can import the json files directly and you are all set.

Note:

- No Need to add separate dashboards whenever deploying new service. All the dashboards are generic and templatized.

- Prometheus offers hot reloads. So if you need to update the config or rules file, just update the config map and make a HTTP POST request to the Prometheus endpoint. Eg:

curl -XPOST http://<Prometheus-Svc-Ext-Ip>:8080>/-/reload #In the prometheus logs it can be seen as level=info ts=2019-01-17T03:37:50.433940468Z caller=main.go:624 msg="Loading configuration file" filename=/etc/prometheus/prometheus.yml level=info ts=2019-01-17T03:37:50.439047381Z caller=kubernetes.go:187 component="discovery manager scrape" discovery=k8s msg="Using pod service account via in-cluster config" level=info ts=2019-01-17T03:37:50.439987243Z caller=kubernetes.go:187 component="discovery manager scrape" discovery=k8s msg="Using pod service account via in-cluster config" level=info ts=2019-01-17T03:37:50.440631225Z caller=kubernetes.go:187 component="discovery manager scrape" discovery=k8s msg="Using pod service account via in-cluster config" level=info ts=2019-01-17T03:37:50.444566424Z caller=main.go:650 msg="Completed loading of configuration file" filename=/etc/prometheus/prometheus.yml

- Alertmanager config can be reloaded by a similar api call.

curl -XPOST http://<Alertmanager-Svc-Ext-Ip>:9093>/-/reload

We hope this helps you get insights into your Kubernetes cluster and effectively monitor workloads. This set-up should be enough to get you started on monitoring your workloads. In the next post, we will learn about scaling Prometheus horizontally and ensuring High Availability.