Long launch times, video buffering, high delays, broadcast interruptions, and other lags are common issues when developing applications for streaming and live streaming. Anyone who has ever developed such services has come across at least one of them.

In previous articles, we talked about how to develop streaming apps for iOS and Android. And today, we will share the problems we encountered in the process and how we solved them.

Use of a modern streaming platform

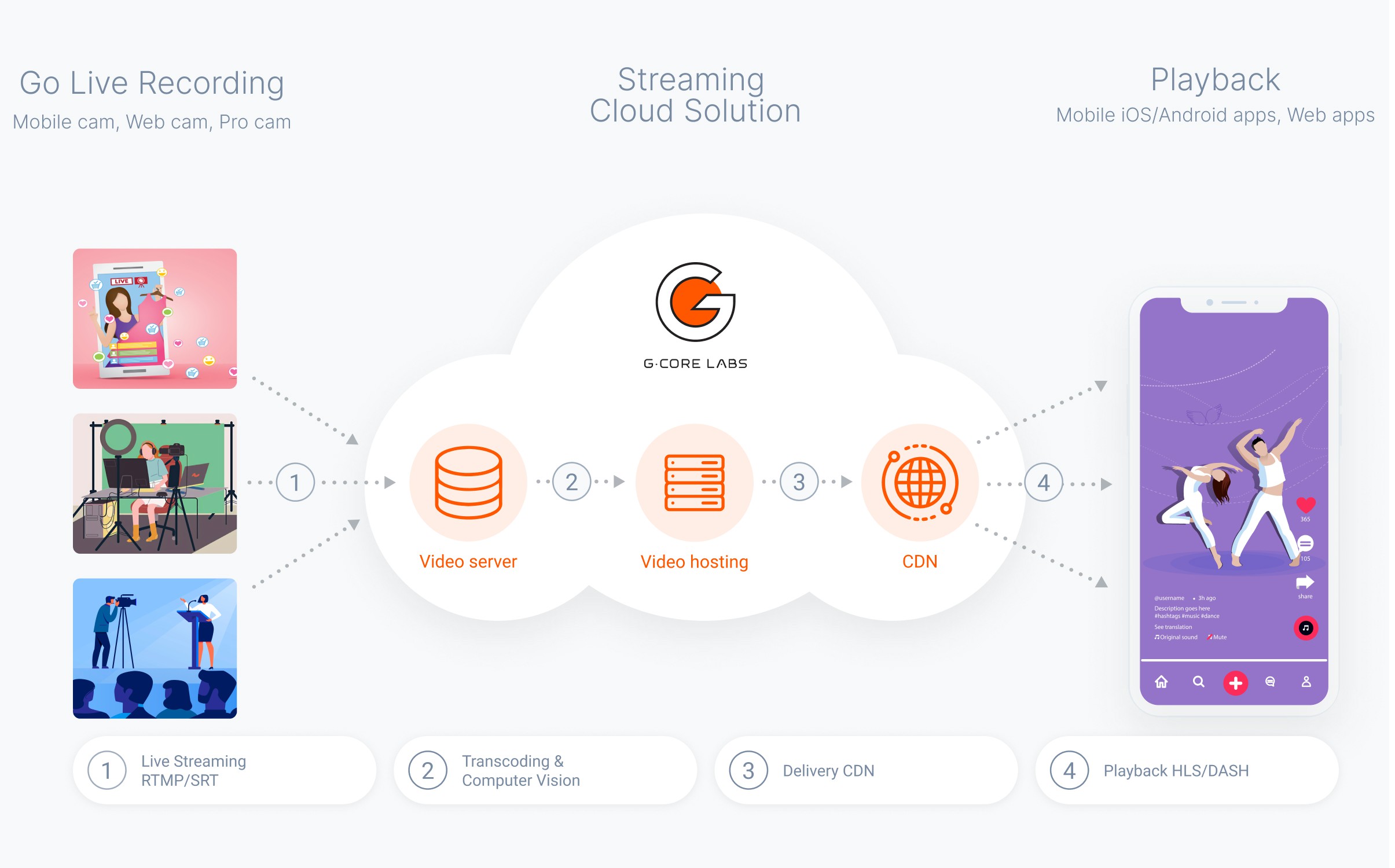

All that is required from the mobile app is to capture video and audio from the camera, form a data stream, and send it to viewers. A streaming platform will be needed for mass content distribution to a wide audience.

The only drawback of a streaming platform is latency. Broadcasting is a rather complex and sophisticated process. A certain amount of latency occurs at each stage.

Our developers were able to assemble a stable, functional, and fast solution that requires 5 seconds to launch all processes, while the end-to-end latency when broadcasting in the Low latency mode takes 4 seconds.

The table below shows several platforms that solve the latency reduction problem in their own way. We compared several solutions, studied each one, and found the best approach.

It takes 5 minutes to start streaming on Gcore Streaming Platform:

- Create a free account. You will need to specify your email and password.

- Activate the service by selecting Free Live or any other suitable plan.

- Create a stream and start broadcasting.

All the processes involved in streaming are inextricably linked. Changes to one affect all subsequent ones. Therefore, it would be incorrect to divide them into separate blocks. We will consider what can be optimized and how.

Decrease of GOP size and speed up of stream delivery and reception

To start decoding and processing any video stream, you need an iframe. We conducted tests and selected the optimal 2-second iFrame interval for our apps. However, in some cases, it can be changed to 1 second. By reducing the GOP length, the decoding, and thus the beginning of stream processing, is faster.

iOS

Set maxKeyFrameIntervalDuration = 2.

Android

Set iFrameIntervalInSeconds = 2.

Background streaming to keep it uninterrupted

If you need short pauses during streaming, for example, to switch to another app, you can continue streaming in the background and keep the video intact. In doing so, we do not waste time on initializing all processes and keep minimal end-to-end latency when returning to the air.

iOS

Apple forbids recording video while the app is minimized. Our initial solution was to disable the camera at the appropriate moment and reconnect it when returning to the air. To do this, we subscribed to a system notification informing us of the entry/exit to the background state.

It didn’t work. The connection was not lost, but the library did not send the video of the RTMP stream. Therefore, we decided to make changes to the library itself.

Each time the system sends a buffer with audio to AVCaptureAudioDataOutputSampleBufferDelegate, it checks whether all devices are disconnected from the session. Only the microphone should remain connected. If everything is correct, timingInfo is created. It contains information about the duration, dts, and pts of a fragment.

After that, the pushPauseImageIntoVideoStream method of the AVMixer class is called, which checks the presence of a picture to pause. Next, a CVPixelBuffer with the image data is created via the pixelBufferFromCGImage method, and the CMSampleBuffer itself is created via the createBuffer method, which is sent to AVCaptureVideoDataOutputSampleBufferDelegate.

Extension for AVMixer:

- hasOnlyMicrophone checks if all devices except the microphone are disconnected from the session

- func pushPauseImageIntoVideoStream takes data from the audio buffer, creates a video buffer, and sends it to AVCaptureVideoDataOutputSampleBufferDelegate

- private func pixelBufferFromCGImage (image: CGImage) creates and returns CVPixelBuffer from the image

- createBuffer (pixelBuffer: CVImageBuffer, timingInfo: input CMSampleTimingInfo) creates and returns a CMSampleBuffer from timingInfo and CVPixelBuffer

Add the pauseImage property to the AVMixer class:

In AVAudioIOUnit, add the functionality to the func captureOutput (_ output: AVCaptureOutput, didOutput sampleBuffer: CMSampleBuffer, from connection: AVCaptureConnection) method:

Android

With Android, things turned out to be simpler. Looking deeper into the source code of the library that we used, it becomes clear that streaming is actually in a separate stream.

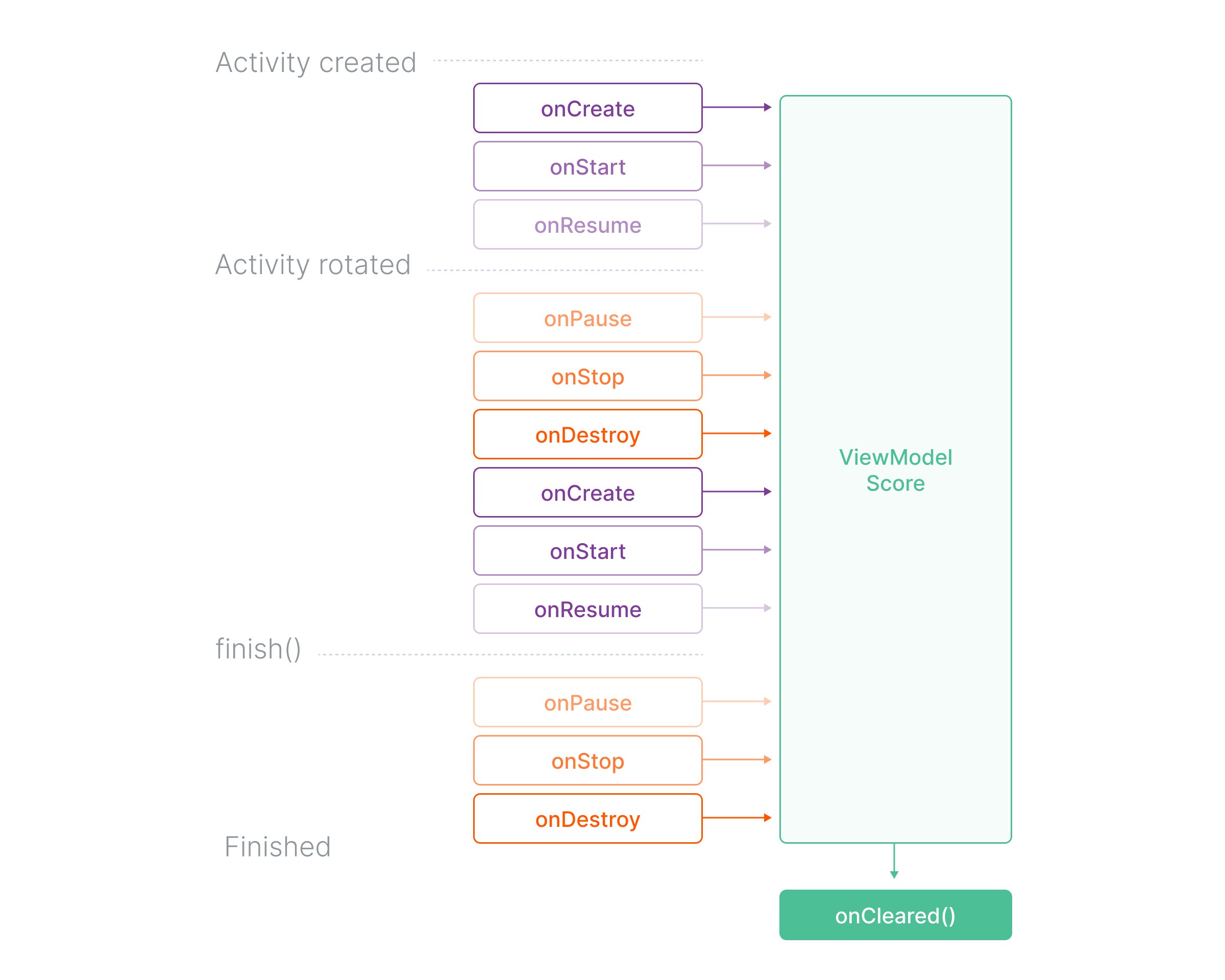

Considering the life cycle of the component where our streaming is initialized, we decided to initialize it in the ViewModel—it remains alive throughout the life cycle of the component to which it is bound (Activity, Fragment).

Nothing will change in the life cycle of ViewModel, even in case of changes in configuration, orientation, background transition, etc.

But there is still a small problem. For streaming, we need to create a RtmpCamera2() object, which depends on an OpenGlView object. This is a UI element, which means it is eliminated when the app goes to background and the streaming process is interrupted.

The solution was found quickly. The library allows you to easily replace the View option of the RtmpCamera2 object. We can replace it with a Context object from our app. Its life lasts until the app is eliminated by the system or closed by the user.

We consider the elimination of the OpenGlView object to be an indicator of the app going to background and the creation of this View to be the signal of a return to foreground. For this purpose, we need to implement the corresponding callback:

Next, as we mentioned before, we need to replace the OpenGlView object with Context when going to background and back to foreground. To do this, we define the required methods in ViewModel. We’ll also need to stop streaming when ViewModel is eliminated.

If we need to pause our streaming without going to background, we just have to turn off the camera and microphone. In this mode, the bitrate is reduced to 70–80 Kbps, which allows you to save traffic.

WebSocket and launch of the player at the right time

Use WebSocket to get the required information about the content being ready for playing and to start streaming instantly:

Use of adaptive bitrate and resolution

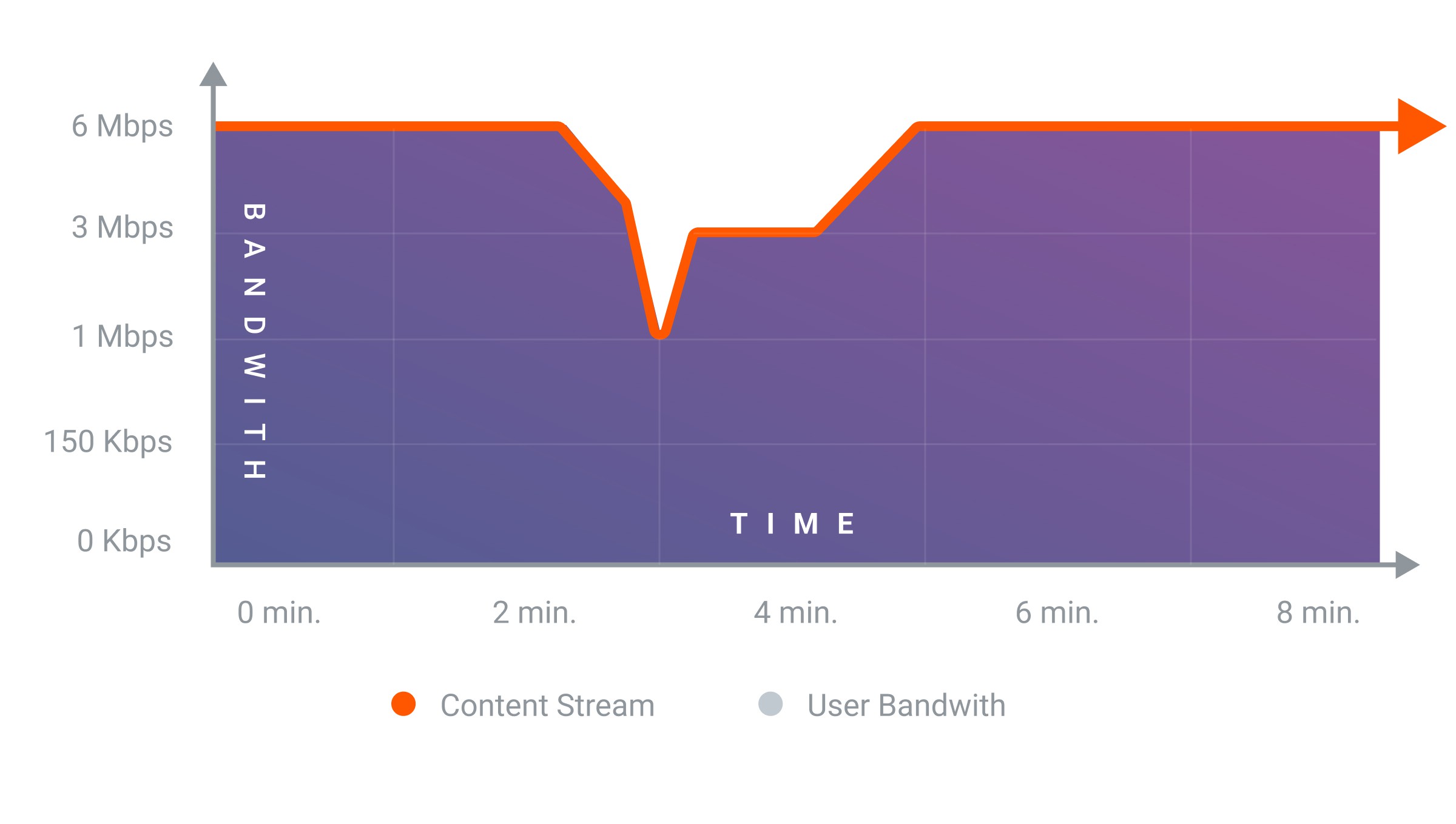

If we perform streaming from a mobile device, cellular networks will be used for video transmission. It is the main problem in mobile streaming: the signal level and its quality depend on many factors. Therefore, it is necessary to adapt the bitrate and resolution to the available bandwidth. This will help maintain a stable streaming process regardless of the viewers’ internet connection quality.

iOS

Two RTMPStreamDelegate methods are used to implement adaptive bitrate:

Examples of implementation:

The adaptive resolution is adjusted according to the bitrate. We used the following resolution/bitrate ratio as a basis:

| Resolution | 1920×1080 | 1280×720 | 854×480 | 640×360 |

| Video bitrate | 6 Mbps | 2 Mbps | 0.8 Mbps | 0.4 Mbps |

If the bandwidth drops by more than half of the difference between two adjacent resolutions, switch to a lower resolution. To increase the bitrate, switch to a higher resolution.

Android

To use adaptive bitrate, change the implementation of the ConnectCheckerRtmp interface:

Summary

Streaming from mobile devices is not a difficult task. Using open-source code and our Streaming Platform, this can be done quickly and at minimal costs.

Of course, you can always face problems during the development process. We hope that our solutions will help you simplify this process and complete your tasks faster.

Learn more about developing apps for streaming on iOS and Android in our articles:

Repositories with the source code of mobile streaming apps can be found on GitHub: iOS, Android.

Seamlessly stream on mobile devices using our Streaming Platform.