SQLite is a free in-memory C library that implements a simple and efficient SQL database engine. It’s the most commonly used database engine in the world and is built into most electronic applications like phones, computers, televisions, and so on. SQLite has few dependencies, a cross-platform file format, and no intermediary servers or configurations. It uses memory space and execution time as efficiently, if not more so, than the file system. It’s also a fully featured database that’s reliable in practice because all changes are atomic, consistent, isolated, and durable. This is further evidenced by the billions of deployed instances.

Despite these benefits, SQLite cannot keep applications running in all possible scenarios. Its dependability comes into question when a system failure occurs. This is because SQLite’s flexibility is dependent on a single machine. Therefore, in a production setting, SQLite is vulnerable to failure because a single machine failing will cause all services connected to the database to stop working. In a distributed setting, however, the database will be replicated on multiple machines. So, if one machine fails, the others keep the system working as expected. You should thus look at some options for using SQLite in a distributed manner.

In this article, we compare three tools that help extend SQLite’s functionality so that it can manage distributed databases and have high reliability: Litestream, rqlite, and dqlite.

Comparing the tools

Litestream streams and replicates SQLite write-ahead logs on various servers. Rqlite provides a standalone application that controls numerous SQLite database instances across a cluster of servers, using the Raft algorithm to ensure that all instances receive the same updates and have the same data. Dqlite also expands SQLite across a network of servers but keeps SQLite’s in-memory feature intact. It uses C-Raft, an optimized C implementation of the Raft algorithm, to further boost performance.

The following sections compare Litestream, rqlite, and dqlite. We discuss each tool’s functionality and user experience, along with its capacity to offer data guarantees (reliability, availability, and durability).

The tools are compared based on the results of a replication speed test. The replication speed tests used for each tool are also described in each section. These tests all use the Chinook sample database, which consists of eleven tables containing data on clients, workers, invoices, and music-related entities:

Finally, we highlight the most appropriate use case for each tool.

Litestream

This disaster recovery tool asynchronously backs up the write-ahead logs from an SQLite database to external storage every ten seconds. All of the database’s features, particularly the read and write speeds, are retained. This gives it an advantage over the other tools because no compromises are made in terms of how fast the write process is; it writes data as fast as SQLite itself.

Litestream also improves SQLite reliability. In the event of a database failure, the write-ahead logs can be used to regenerate the database. Because of their replication, these logs are highly available, as the failure of one node does not affect the others. However, the database itself will suffer some downtime when it’s being restored from the write-ahead logs. The duration of this downtime will depend on the size of the logs that need to be restored. Furthermore, the time lag between backups means that some information can be lost forever if a catastrophic failure occurs. As a result, data availability and durability with Litestream are limited.

In terms of developer experience, using Litestream necessitates configuring a storage location for the write-ahead log replicas. This destination is then linked to Litestream via a configuration file or the command line. However, we can quickly get started because it has good documentation. Simple commands like ‘litestream restore db_name’ are used to interact with the database directly through the SQLite API.

To test how quickly Litestream replicates the Chinook sample database information, we started a Linux terminal and downloaded the database using the ‘wget’ command:

$ wget -o chinook.db https://github.com/Fortune-Adekogbe/database-replication-test/blob/main/chinook.db?raw=true

We followed the Litestream installation page and the getting started guide to set up the test, using the Chinook database as our example instead. Following the same documentation, we also set up an Amazon S3 Bucket for storing the write-ahead logs.

To identify when replication started, we added the ‘date;’ command to the replication command in the guide:

$ date; litestream replicate chinook.db s3://test-bucket-73/database

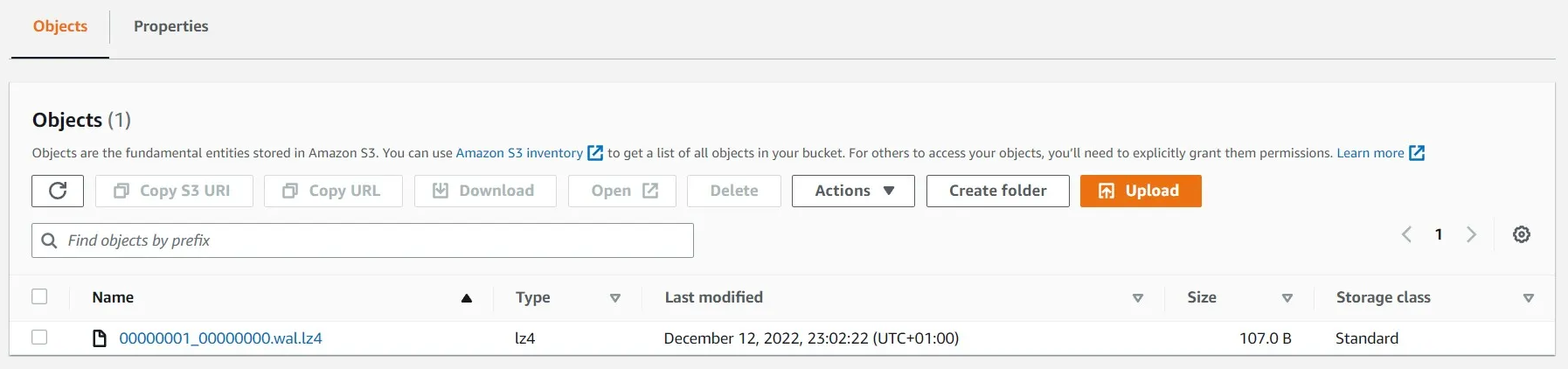

We determined the replication time to be ten second by comparing the time the command was executed to the time the database received the write-ahead logs, which we noted from the AWS S3 console:

In terms of suitable use cases, Litestream excels where extremely low latency is required but high data guarantees are not the main concern. For example, if there isn’t a lot of database interaction, the likelihood of losing data between backups is diminished. LogPaste, a tool for creating shareable links for text files, is an example use case of Litestream.

rqlite

This is a standalone relational database that employs SQLite as its storage engine. It includes all of the tools you need to read, write, monitor, maintain, and back up your database. rqlite maintains copies of the database across multiple nodes, which could be on the same machine or different machines (on different continents). Naturally, this also provides higher availability and durability than Litestream because even if one node fails, the other nodes that share the same copy of the database keep the service up and running. If the leader node fails, a new leader is chosen.

The database has a low read latency, but its write latency is much higher than that of an SQLite database. This is because rqlite is accessed via an HTTP API, and rqlite uses the Raft consensus algorithm to distribute the database. For a write request to be successful in the Raft algorithm, the initial node receiving it must first communicate with the leader node, which then makes the necessary changes and communicates back to the node. Thus, the database interaction for rqlite is somewhat different from that for SQLite. In terms of user experience, rqlite has a very quick setup process for cluster configuration. But its documentation is also not very thorough. This makes it difficult to use when compared with Litestream, as one has to understand how to use its API via the command line or a client library.

We used the same Chinook database to test rqlite’s replication speed. In this case, however, we had to use SQL commands to create the database directly. We put the database in a file and downloaded it using the following code:

$ wget https://raw.githubusercontent.com/Fortune-Adekogbe/database-replication-test/main/chinook.sql

The test also required an rqlite server and a client to interact with the server, and we used the Python client in this case. Python’s package manager, pip, can be installed following the documentation, but if it’s already installed, run the command below to install the client:

$ pip install pyrqlite

To set up the server, we pulled the rqlite Docker image and created a container instance with it by running the following commands:

$ docker pull rqlite/rqlite $ docker run -p4001:4001 rqlite/rqlite

Next, we created a Python script named ‘rqlite_test.py’ to connect the database and record the time it took for the replication to be carried out. To begin, we imported the ‘time’ and ‘pyrqlite’ modules:

import time import pyrqlite.dbapi2 as dbapi2

Next, we read the SQL commands using the ‘open()’ function in Python. Importantly, to make sure the file would be decodable, we indicated that the encoding was ‘utf-8’:

with open('chinook.sql', 'r', encoding="utf-8") as fp:

chinookFile = fp.read()

Next, to avoid execution errors, we replaced SQLite’s special characters in the commands. We then split the text line by line into commands:

chinookFile = chinookFile.replace('?','')

chinookFile = chinookFile.replace(':',' ')

chinookFile = chinookFile.replace('; ','')

commands = chinookFile.split(';\n')

Following this, we connected to the database using the ‘dbapi2.connect()’ method and passed the host connection string and port number as parameters:

connection = dbapi2.connect(

host= '127.0.0.1',

port=4001,

)

Finally, we set a timer and calculated how long it took to execute the commands using the previously established database connection:

start = time.time()

with connection.cursor() as cursor:

for command in commands[:-1]:

cursor.execute(command)

end = time.time() - start

print('Time taken:',end, 'seconds')

The resulting value was 61.4563 seconds, which was considerably slower than Litestream.

rqlite should be used when high data availability and durability are necessary but write speed (latency) is not a major concern. This might apply in IoT applications where the written information is not urgently required and the delay, however slight, has no negative effects on the user’s experience. rqlite is used in k0s, a Kubernetes distribution that packages all the necessary features for creating a cluster into a single binary.

dqlite

dqlite is an efficient, persistent, and embedded SQL database that essentially extends SQLite to a distributed setting across several nodes. Like SQLite, its clients can run within a thread in memory rather than as a separate process. This gives it an advantage in edge applications. SQL requests are sent to the database server via the dqlite wire protocol. It also maintains low read latency because read operations can be performed asynchronously from any node. Write operations must be delegated to the dqlite server thread leader, which performs the write and replicates it across all nodes. However, unlike rqlite, dqlite uses an optimized implementation of the Raft algorithm in C that reduces overall latency, so its operations are faster than those of rqlite.

The distributed setup also ensures dqlite’s high reliability, availability, and durability by preventing service interruption in the event of a node failure. Additionally, dqlite sets up SQLite to use write-ahead logs so that it can efficiently perform atomic commits and rollbacks as needed. This means that it has some of the best features of both Litestream and rqlite. In terms of user experience, dqlite is simple to install, and cluster nodes can be created with simple Linux commands. The ‘dqlite-tools’ package also provides an SQLite-like shell for making queries, making it simple to learn and use, unlike rqlite and Litestream. However, it lacks sufficient detail in its documentation.

We used the same database again for the replication speed test. We installed dqlite in a Linux environment by executing the following commands in a terminal:

$ sudo add-apt-repository ppa:dqlite/dev $ sudo apt-get update $ sudo apt-get install libdqlite-dev dqlite-tools

The final command in the code above installed ‘dqlite-tools’, which provided access to the dqlite shell.

We then started three nodes of the dqlite demo application by running the command below. Since the first was the leader node, we connected the other two to it using the ‘–join’ argument:

$ dqlite-demo --api 127.0.0.1:6011 --db 127.0.0.1:7011 & dqlite-demo --api 127.0.0.1:6012 --db 127.0.0.1:7012 --join 127.0.0.1:7011 & dqlite-demo --api 127.0.0.1:6013 --db 127.0.0.1:7013 --join 127.0.0.1:7011 &

We then executed the command below to launch a shell instance:

$ dqlite -s 127.0.0.1:7011 chinook

In the shell, we copied the SQL commands to our clipboard and recorded the start time using the ‘SELECT Time(‘now’)’ command. After running the commands, we computed the execution time and subtracted the time it took to paste the commands from this. The final replication time was twenty-two seconds. This was twice as fast as the rqlite implementation, but still two times slower than Litestream.

As far as use cases go, dqlite is most appropriate for use in edge computing and IoT-based systems. It’s also generally useful for backend services that want a performant but simpler database. Dqlite is used extensively by the LXD system container manager in cluster nodes when high availability is required.

Comparison overview

The table below summarizes the comparison result of Litestream, rqlite, and dqlite:

| Factor | Litestream | rqlite | dqlite |

| Reliability | High | High | High |

| Availability | Limited | High | High |

| Durability | Limited | High | High |

| User/developer experience | Good | Fair | Fair |

| Replication speed (seconds) | 10 | 61.5 | 22 |

| Use-case | Storage applications | Edge and IoT applications | Edge and IoT applications |

Conclusion

This article compared three tools that enable SQLite to operate in a distributed fashion, making it suitable for production. You should have a better understanding of how these tools function (with a focus on data guarantee), replication speed, and each tool’s most suitable use case.

Though the tools all have similar read latency, Litestream typically has the lowest write latency. Additionally, rqlite and dqlite are better suited for high data guarantees. Dqlite, in particular, offers the best compromise in terms of write latency and data guarantees.

Also, Litestream has the most user-friendly documentation with guides written for different scenarios, while rqlite and dqlite might require some more tweaking and thinking for you to figure out how to best use them.

When low latency is necessary but high availability is not crucial, Litestream works best. On the other hand, rqlite and dqlite perform well in edge and IoT applications that demand extremely high availability and data durability. In terms of latency, dqlite is better than rqlite.

Written by Fortune Adekogbe