In this article, we’ll show you how to integrate video calls into your iOS app in 15 minutes. You don’t have to implement the entire WebRTC stack from scratch; you can just use a ready-made SDK.

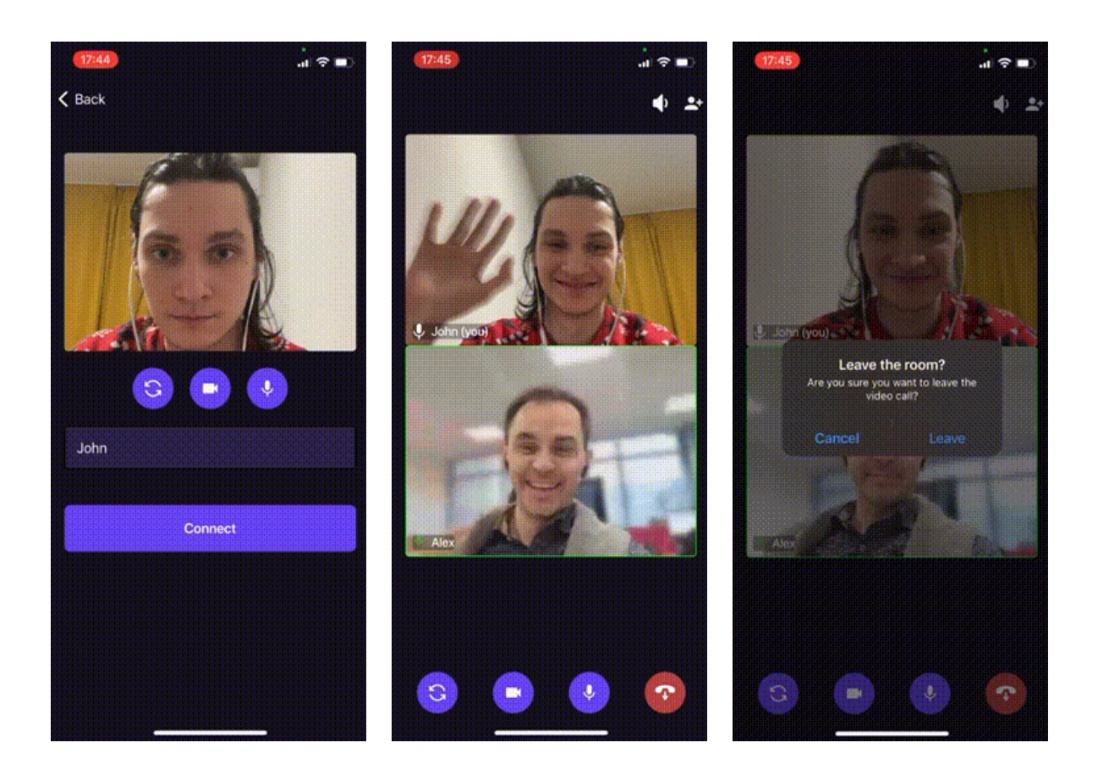

Here is what the result will look like:

You’ll implement the business logic and interface. The video call function will be integrated using GCoreVideoCallsSDK, a GCore framework that takes care of creating and interacting with sockets and WebRTC, connecting/creating a video call room, and interacting with the server.

Note: This article is part of a series about working with iOS. In other articles, we show you how to create a mobile streaming app on iOS, and how to add VOD uploading and smooth scrolling VOD features to an existing app.

What functions you can add with the help of this guide

The solution includes the following:

- Video calling with the camera and microphone.

- Showing the conversation participants using your designs.

- Sending a video stream from the built-in camera to the server.

- Receiving a video stream from the server.

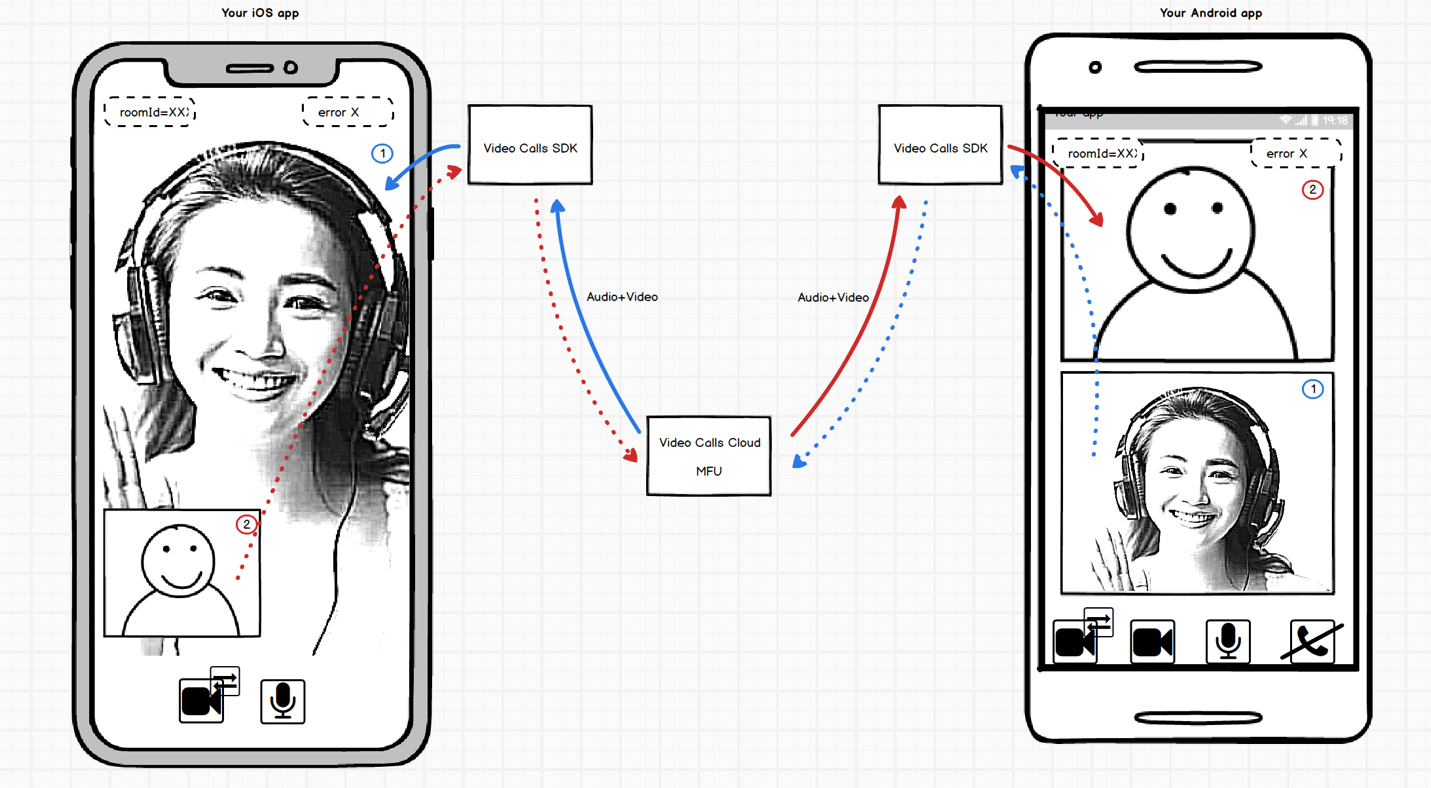

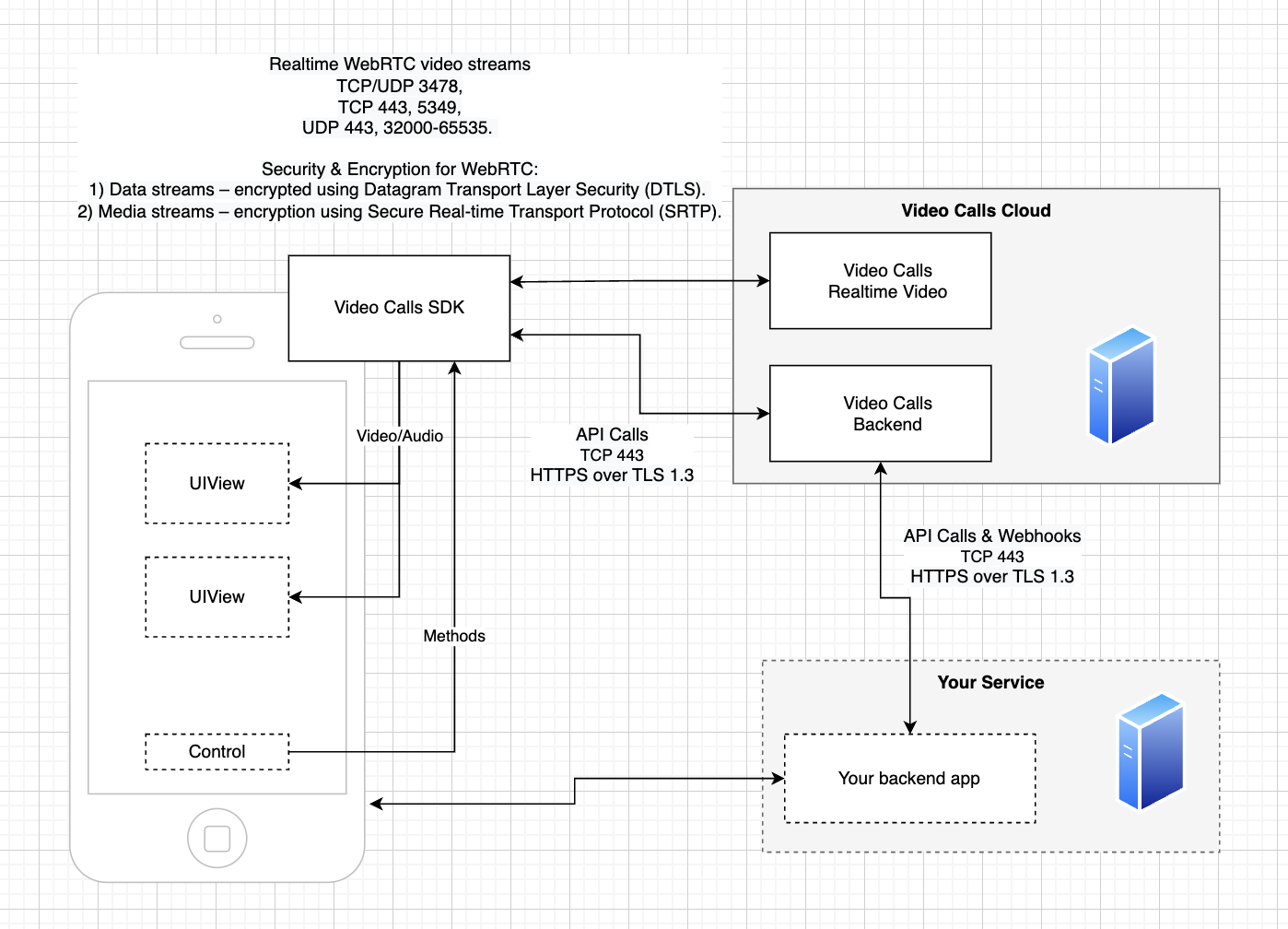

The solution architecture

Here is what the solution architecture for video calls looks like:

How to integrate the video calling feature into your app

Step 1: Prep

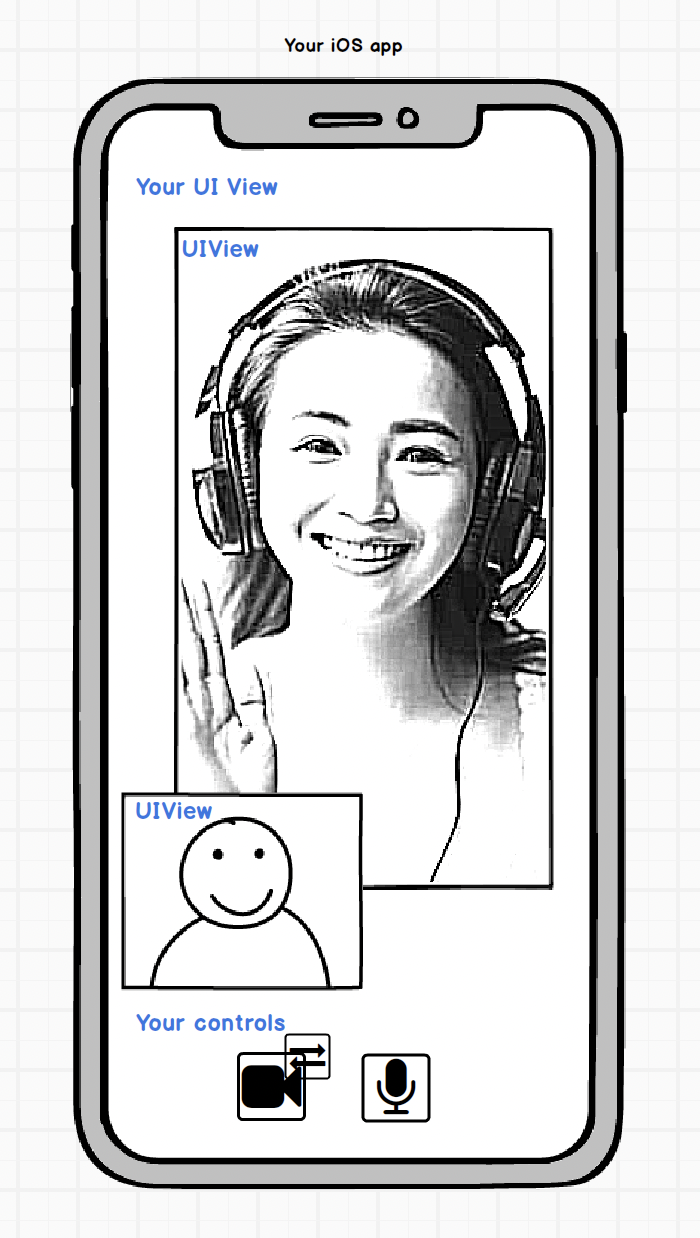

The application must connect to the room and show the user and speakers on the screen. To accomplish this, we’ll use UICollectionView and UICollectionViewCel to display the participants, and UIView to display the user. WebRTC provides RTCEAGLVideoView to display video streams in the application. We’ll also create a small model to store data and link it all. The SDK will be UIViewController.

The final application will look something like this:

First, you’ll need to install all the dependencies and request the rights to video/microphone recording from the user.

Dependencies

Use CocoaPods to install mediasoup Mediasoup iOS Client and GcoreVideoCallsSDK by specifying the following in podfile:

source ‘https://github.com/g-core/ios-video-calls-SDK.git’ ... pod "mediasoup_ios_client", '1.5.3' pod "GCoreVideoCallsSDK", '2.6.0'

Permissions

To allow the application to access the camera and microphone, specify the permissions in the project’s Info.plist:

NSMicrophoneUsageDescription(Privacy – Microphone Usage Description)NSCameraUsageDescription(Privacy – Camera Usage Description)

Step 2: Create the UI

Model

A model is required to store any data, in this case, user data.

Create a model file and import GCoreVideoCallsSDK into it. To store user data, create the VideoCallUnit structure, which will contain the user’s ID and name, and RTCEAGLVideoView, which is assigned to it. Here is what the whole file will look like:

import GCoreVideoCallsSDK

final class Model {

var localUser: VideoCallUnit?

var remoteUsers: [VideoCallUnit] = []

}

struct VideoCallUnit {

let peerID: String

let name: String

let view = RTCEAGLVideoView()

}

CollectionCell

CollectionCell will be used to display the speaker.

Cells are reused and can show a large number of different speakers in their lifetime. For this to work, we need a mechanism that will remove the previous thread from the screen, pull a new thread onto the screen, and set its position on the screen. To implement the mechanism, create a CollectionCell class and set the inheritance from UICollectionViewCell. This class will contain only one property: rtcView.

import GCoreVideoCallsSDK

final class CollectionCell: UICollectionViewCell {

weak var rtcView: RTCEAGLVideoView? {

didSet {

oldValue?.removeFromSuperview()

guard let rtcView = rtcView else { return }

rtcView.frame = self.bounds

addSubview(rtcView)

}

}

}

ViewController

Set up a controller to manage the entire process:

1. Import GCoreVideoCallsSDK.

import GCoreVideoCallsSDK

2. Create a model property.

let model = Model()

3. Create the property cellID to hold the cell ID, and the lazy property collectionView to manage the cells (lazy to use cellID, view, and self). Set up the collection layout, assign a controller as adtaSource, and register the cell.

lazy var collectionView: UICollectionView = {

let layout = UICollectionViewFlowLayout()

layout.itemSize.width = UIScreen.main.bounds.width - 100

layout.itemSize.height = layout.itemSize.width

layout.minimumInteritemSpacing = 10

let collection = UICollectionView(frame: view.bounds, collectionViewLayout: layout)

collection.backgroundColor = .white

collection.dataSource = self

collection.register(CollectionCell.self, forCellWithReuseIdentifier: cellID)

return collection

}()

4. Create the localView property. The layout for this view needs to be made from the code; to do this, create the method initConstraints.

let localView: UIView = {

let view = UIView(frame: .zero)

view.translatesAutoresizingMaskIntoConstraints = false

view.layer.cornerRadius = 10

view.backgroundColor = .black

view.clipsToBounds = true

return view

}()

func initConstraints() {

NSLayoutConstraint.activate([

localView.widthAnchor.constraint(equalToConstant: UIScreen.main.bounds.width / 3),

localView.heightAnchor.constraint(equalTo: localView.widthAnchor, multiplier: 4/3),

localView.leftAnchor.constraint(equalTo: view.leftAnchor, constant: 5),

localView.bottomAnchor.constraint(equalTo: view.bottomAnchor, constant: -5)

])

}

5. Create the gcMeet property.

var gcMeet = gcMeet.shared

6. In the viewDidLoad method, add collectionView and localView to the main view and initialize the constraints.

override func viewDidLoad() {

super.viewDidLoad()

view.backgroundColor = .white

view.addSubview(collectonView)

view.addSubview(localView)

initConstraints()

})

7. Create an extension to subscribe the controller to UICollectionViewDataSource. This is necessary in order to link the UI to the model and set up cells when they appear.

extension ViewController: UICollectionViewDataSource {

func collectionView(_ collectionView: UICollectionView, numberOfItemsInSection section: Int) -> Int {

return model.remoteUsers.count // Получаем количество удаленных пользователей

}

func collectionView(_ collectionView: UICollectionView, cellForItemAt indexPath: IndexPath) -> UICollectionViewCell {

// Настраиваем ячейку

let cell = collectionView.dequeueReusableCell(withReuseIdentifier: cellID, for: indexPath) as! CollectionCell

cell.rtcView = model.remoteUsers[indexPath.row].view

cell.layer.cornerRadius = 10

cell.backgroundColor = .black

cell.clipsToBounds = true

return cell

}

}

The UI is now ready to display the call. Now we need to set up the relationship with the SDK.

Initializing GCoreMeet

The connection to the server is made through GCoreMeet.shared. The parameters for the local user, room, and camera will be passed to it. You also need to call the method to activate the audio session, which will allow you to capture headphones connected. The SDK passes data from the server to the application through listeners: RoomListener and ModeratorListener.

Add all this to the viewDidLoad method:

override func viewDidLoad() {

super.viewDidLoad()

view.backgroundColor = .white

view.addSubview(collectonView)

view.addSubview(localView)

let userParams = GCoreLocalUserParams(name: "EvgenMob", role: .moderator)

let roomParams = GCoreRoomParams(id: "serv1z3snbnoq")

gcMeet.connectionParams = (userParams, roomParams)

gcMeet.audioSessionActivate()

gcMeet.moderatorListener = self

gcMeet.roomListener = self

try? gcMeet.startConnection()

initConstraints()

}

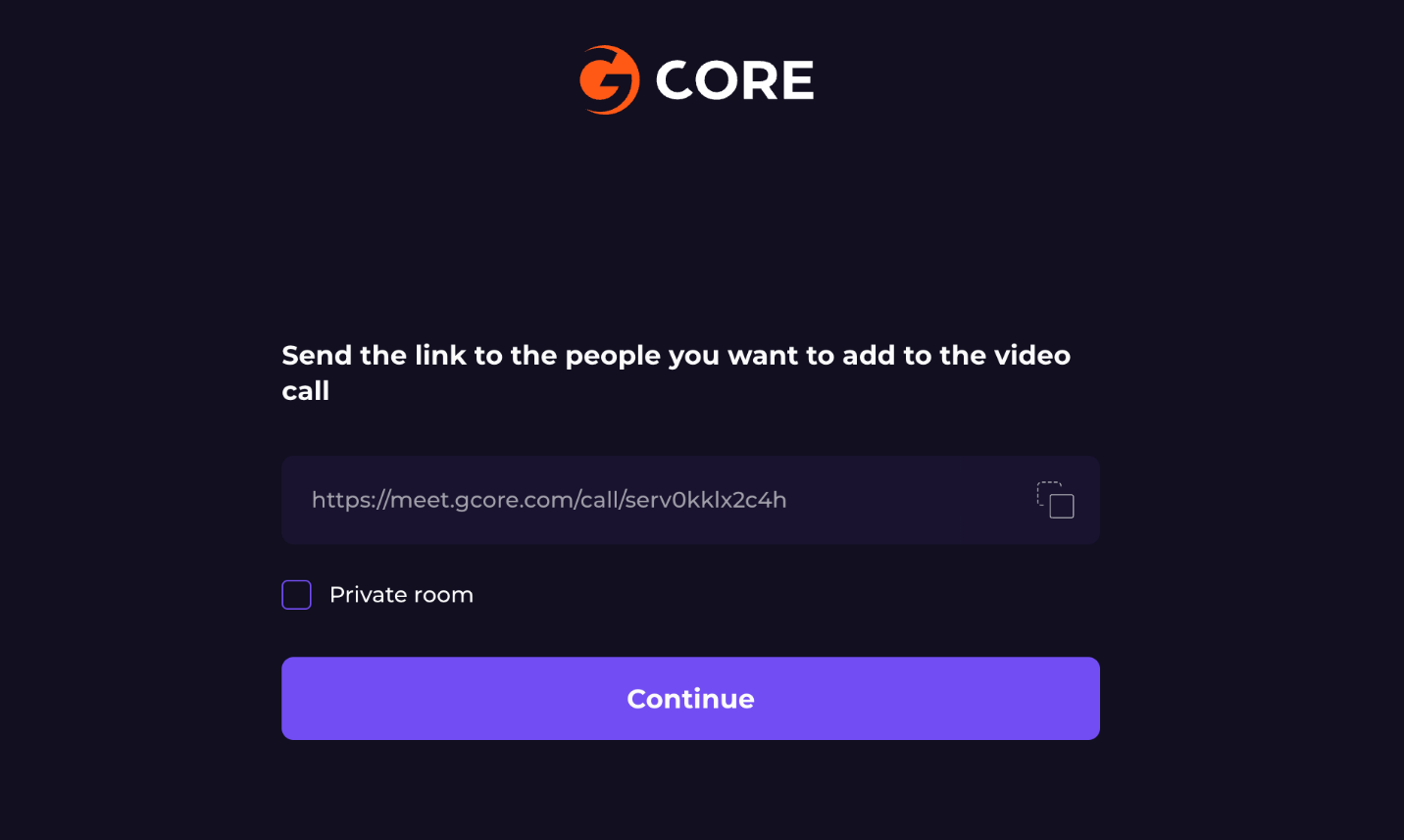

The roomID can be taken from https://meet.gcore.com by clicking on the “Create a room for free” button and selecting a conference.

In the RoomListener methods, you will also receive video streams (both your own and remote users’), audio streams, and information about users and moderator actions, which will allow you to render the necessary UI. This will be discussed further later in the article.

Through the ModeratorListener, you will receive requests from other users to enable streams and be notified when new users join the waiting room. The MeetRoomParameters can also use the following parameters:

clientHostName: You can leave this nil, in which case the default will be meet.gcore.compeerId: If you leave this nil, the ID will be auto-generated by the SDK.

Step 3: Interacting with the Gcore server

Interaction with the server is carried out through an object that subscribes to the RoomListener protocol. It has a number of methods. You can find more details about these, as well as about the SDK, on SDK readme. Below is an image of what the process of this interaction looks like:

For the simplest implementation, you need only a few methods:

func roomClientHandle(_ client: GCoreRoomClient, forAllRoles joinData: GCoreJoinData) // To get data at the moment of entering the room func roomClientHandle(_ client: GCoreRoomClient, connectionEvent: GCoreRoomConnectionEvent) // To update the status of the connection to the room func roomClientHandle(_ client: GCoreRoomClient, remoteUsersEvent: GCoreRemoteUsersEvent) // To respond to actions related to remote users, not related to video/audio streams func roomClientHandle(_ client: GCoreRoomClient, mediaEvent: GCoreMediaEvent) // To respond to receiving and disabling video/audio streams

1. First, subscribe the controller to the RoomListener protocol:

extension ViewController: RoomListener {

}

2. Add the required methods to extension. You can start with func roomClientHandle(_ client: GCoreRoomClient; forAllRoles joinData: GCoreJoinData). This is called when joining a room and sends initial data related to room permissions, a list of participants, and information about the local user. We’re interested in the user list:

func roomClientHandle(_ client: GCoreRoomClient, forAllRoles joinData: GCoreJoinData) {

switch joinData {

case othersInRoom(remoteUsers: [GCoreRemoteUser]):

remoteUsers.forEach {

model.remoteUsers += [ .init(peerID: $0.id, name: $0.displayName ?? "") ]

}

collectonView.reloadData()

default:

break

}

}

3. The next method is func roomClientHandle(_ client: GCoreRoomClient, connectionEvent: GCoreRoomConnectionEvent). This is called when the connection status of the room changes. The device’s camera and microphone will turn on once successfully connected.

func roomClientHandle(_ client: GCoreRoomClient, forAllRoles joinData: GCoreJoinData) {

switch joinData {

case othersInRoom(remoteUsers: [GCoreRemoteUser]):

remoteUsers.forEach {

model.remoteUsers += [ .init(peerID: $0.id, name: $0.displayName ?? "") ]

}

collectonView.reloadData()

default:

break

}

}

4. The func roomClientHandle(_ client: GCoreRoomClient, remoteUsersEvent: GCoreRemoteUsersEvent) method is called for events related to remote user data but not related to media. You will use it to add and remove users during the call.

func roomClientHandle(_ client: GCoreRoomClient, remoteUsersEvent: GCoreRemoteUsersEvent) {

switch remoteUsersEvent {

case handleRemote(user: GCoreRemoteUser):

model.remoteUsers += [.init(peerID: handlePeer.id, name: handlePeer.displayName ?? "")]

collectonView.reloadData()

case closedRemote(userId: String):

model.remoteUsers.removeAll(where: { $0.peerID == peerClosed })

collectonView.reloadData()

default:

break

}

}

5. The func roomClientHandle(_ client: GCoreRoomClient, mediaEvent: GCoreMediaEvent) method is called when the SDK is ready to provide the user’s video stream and when videos from the speakers arrive. You’ll use it to render the UI.

func roomClientHandle(_ client: GCoreRoomClient, mediaEvent: GCoreMediaEvent) {

switch mediaEvent {

case produceLocalVideo(track: RTCVideoTrack):

guard let localUser = model.localUser else { return }

videoTrack.add(localUser.view)

localUser.view.frame = self.localView.bounds

localView.addSubview(localUser.view)

case handledRemoteVideo(videoObject: GCoreVideoStream):

guard let user = model.remoteUsers.first(where: { $0.peerID == videoObject.peerId }) else { return }

videoObject.rtcVideoTrack.add(user.view)

default:

break

}

}

6. Let xcode fill in the rest of the methods without giving them functionality.

7. Setup is complete! The project is ready to run on a real device (running on an emulator is not recommended).

Results

Your app now has a video calling feature. Here is what the implementation of this feature looks like in our demo application.

Developer notes

You can get pixelBuffer from the SDK, which contains an image frame link (received from the camera) so you can do whatever you like with it. To do this, you need to subscribe the controller under MediaCapturerBufferDelegate and implement the mediaCapturerDidBuffer method. The example below adds a blur before sending the frame to the server:

extension ViewController: MediaCapturerBufferDelegate {

func mediaCapturerDidBuffer(_ pixelBuffer: CVPixelBuffer) {

let image = CIImage(cvPixelBuffer: pixelBuffer).applyingGaussianBlur(sigma: 10)

CIContext().render(image, to: pixelBuffer)

}

}

Conclusion

Using the SDK and Gcore services, you can easily and quickly integrate video calling functionality into your application. Users will be pleased; they’re used to video calls in popular services like Instagram, WhatsApp, and Facebook, and now they will see a familiar feature in your application.

You can check out the source code for our project here: ios-demo-video-calls. There you can also take a peek at the implementation of other methods, moderator mode, screen preview, etc.

Mediasoup iOS Client was used to implement WebRTC on iOS.

GcoreVideoCallsSDK is used to connect and interact with the room, as well as to create sockets.

The article is based on the GcoreVideoCalls application.